Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-35 |

| Gender | Female, 54.8% |

| Disgusted | 45.1% |

| Calm | 53.6% |

| Angry | 45.1% |

| Confused | 45.1% |

| Fear | 45.1% |

| Sad | 45.4% |

| Surprised | 45.3% |

| Happy | 45.3% |

Feature analysis

Amazon

| Person | 97.8% | |

Categories

Imagga

| pets animals | 73.8% | |

| paintings art | 16.2% | |

| streetview architecture | 3.6% | |

| people portraits | 3.1% | |

| interior objects | 1.4% | |

Captions

Clarifai

created by general-english-image-caption-blip on 2025-05-17

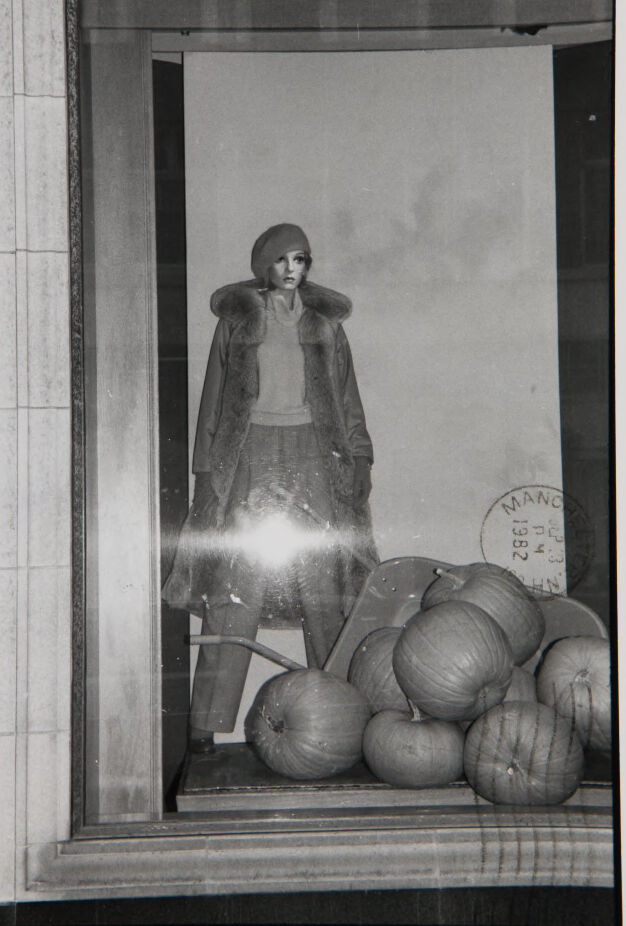

| a photograph of a woman standing in front of a window with a bunch of pumpkins | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-11

Here is a description of the image:

The image captures a grayscale photograph of a storefront window display. A mannequin dressed in vintage attire stands prominently in the window. The mannequin sports a beret, a light-colored sweater, a long coat with fur trim, and pants. The reflection of a bright light obscures parts of the mannequin, particularly around the legs.

The window display features a fall theme. Several pumpkins, of varying sizes, are arranged in front of the mannequin, and there is a small garden cart. In the background, a circular stamp with text is partially visible. The window is framed by a border, and the building's exterior is visible on the left side, with stone tiles. The overall composition suggests a vintage aesthetic, likely from the late 20th century.

Created by gemini-2.0-flash on 2025-05-11

Here is a description of the image:

The black and white photo is of a window display. It features a female mannequin dressed in what appears to be 1980s style clothing - a long fur-trimmed coat, sweater, pants, and a beret. At the bottom of the display are several pumpkins and a wheelbarrow. On the right of the mannequin is a partially visible circular mark with lettering and numbers that appear to read "Manchester 1982". The window is framed by what looks like a building's exterior. There is a light glare in the center of the display.