Machine Generated Data

Tags

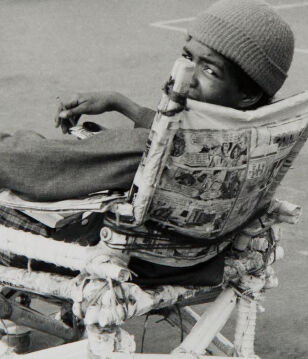

Amazon

created on 2019-11-15

Clarifai

created on 2019-11-15

| people | 98.2 | |

|

| ||

| monochrome | 96.9 | |

|

| ||

| street | 96 | |

|

| ||

| transportation system | 90.9 | |

|

| ||

| man | 89.9 | |

|

| ||

| adult | 88.9 | |

|

| ||

| one | 88.6 | |

|

| ||

| chair | 88.5 | |

|

| ||

| vehicle | 86.3 | |

|

| ||

| two | 85.6 | |

|

| ||

| woman | 80.8 | |

|

| ||

| bench | 80.7 | |

|

| ||

| cart | 76.9 | |

|

| ||

| child | 76.6 | |

|

| ||

| black and white | 75.6 | |

|

| ||

| snow | 73.1 | |

|

| ||

| group together | 72.8 | |

|

| ||

| winter | 70.1 | |

|

| ||

| sport | 69.5 | |

|

| ||

| recreation | 69.1 | |

|

| ||

Imagga

created on 2019-11-15

| rocking chair | 100 | |

|

| ||

| chair | 100 | |

|

| ||

| seat | 86.1 | |

|

| ||

| furniture | 52.5 | |

|

| ||

| bench | 28.3 | |

|

| ||

| handcart | 25.8 | |

|

| ||

| furnishing | 25.1 | |

|

| ||

| shopping cart | 23.6 | |

|

| ||

| beach | 22.8 | |

|

| ||

| sea | 20.3 | |

|

| ||

| wheeled vehicle | 20 | |

|

| ||

| wood | 20 | |

|

| ||

| sand | 19.2 | |

|

| ||

| outdoors | 16.4 | |

|

| ||

| ocean | 15.8 | |

|

| ||

| park | 15.6 | |

|

| ||

| summer | 15.4 | |

|

| ||

| old | 14.6 | |

|

| ||

| season | 14 | |

|

| ||

| water | 14 | |

|

| ||

| vacation | 13.9 | |

|

| ||

| relax | 13.5 | |

|

| ||

| travel | 13.4 | |

|

| ||

| outdoor | 13 | |

|

| ||

| sky | 12.7 | |

|

| ||

| park bench | 12.3 | |

|

| ||

| sun | 12.1 | |

|

| ||

| winter | 11.9 | |

|

| ||

| container | 11.5 | |

|

| ||

| wooden | 11.4 | |

|

| ||

| day | 11 | |

|

| ||

| landscape | 10.4 | |

|

| ||

| scene | 10.4 | |

|

| ||

| cold | 10.3 | |

|

| ||

| shore | 10.2 | |

|

| ||

| man | 10.1 | |

|

| ||

| people | 10 | |

|

| ||

| holiday | 10 | |

|

| ||

| snow | 10 | |

|

| ||

| leisure | 10 | |

|

| ||

| road | 9.9 | |

|

| ||

| coast | 9.9 | |

|

| ||

| fun | 9.7 | |

|

| ||

| barrow | 9.6 | |

|

| ||

| rest | 9.6 | |

|

| ||

| cloud | 9.5 | |

|

| ||

| sitting | 9.4 | |

|

| ||

| lifestyle | 9.4 | |

|

| ||

| tree | 9.2 | |

|

| ||

| relaxation | 9.2 | |

|

| ||

| metal | 8.8 | |

|

| ||

| frozen | 8.6 | |

|

| ||

| holidays | 8.4 | |

|

| ||

| conveyance | 8.3 | |

|

| ||

| vehicle | 8 | |

|

| ||

| autumn | 7.9 | |

|

| ||

| benches | 7.9 | |

|

| ||

| person | 7.8 | |

|

| ||

| black | 7.8 | |

|

| ||

| nobody | 7.8 | |

|

| ||

| outside | 7.7 | |

|

| ||

| sit | 7.6 | |

|

| ||

| bay | 7.5 | |

|

| ||

| support | 7.5 | |

|

| ||

| tool | 7.5 | |

|

| ||

| boat | 7.4 | |

|

| ||

| tourism | 7.4 | |

|

| ||

| relaxing | 7.3 | |

|

| ||

| tranquil | 7.2 | |

|

| ||

| grass | 7.1 | |

|

| ||

| male | 7.1 | |

|

| ||

| to | 7.1 | |

|

| ||

Google

created on 2019-11-15

| Photograph | 96.1 | |

|

| ||

| Black | 94.5 | |

|

| ||

| Black-and-white | 88.5 | |

|

| ||

| Snapshot | 86 | |

|

| ||

| Photography | 75.7 | |

|

| ||

| Monochrome | 74.2 | |

|

| ||

| Sitting | 72 | |

|

| ||

| Stock photography | 69.8 | |

|

| ||

| Furniture | 61.5 | |

|

| ||

| Monochrome photography | 57.8 | |

|

| ||

| Child | 52 | |

|

| ||

| Chair | 51.3 | |

|

| ||

| Street | 51.1 | |

|

| ||

Microsoft

created on 2019-11-15

| text | 99.1 | |

|

| ||

| black and white | 95 | |

|

| ||

| cart | 85.4 | |

|

| ||

| monochrome | 82.9 | |

|

| ||

| wheel | 80.7 | |

|

| ||

| vehicle | 60.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 45-63 |

| Gender | Male, 90.9% |

| Confused | 0.2% |

| Surprised | 0.1% |

| Calm | 0.3% |

| Fear | 0.1% |

| Disgusted | 0.2% |

| Happy | 0.1% |

| Sad | 0.2% |

| Angry | 98.9% |

Feature analysis

Amazon

Person

| Person | 90.7% | |

|

| ||

Categories

Imagga

| paintings art | 69.7% | |

|

| ||

| cars vehicles | 17.2% | |

|

| ||

| food drinks | 7.7% | |

|

| ||

| beaches seaside | 2.4% | |

|

| ||