Machine Generated Data

Tags

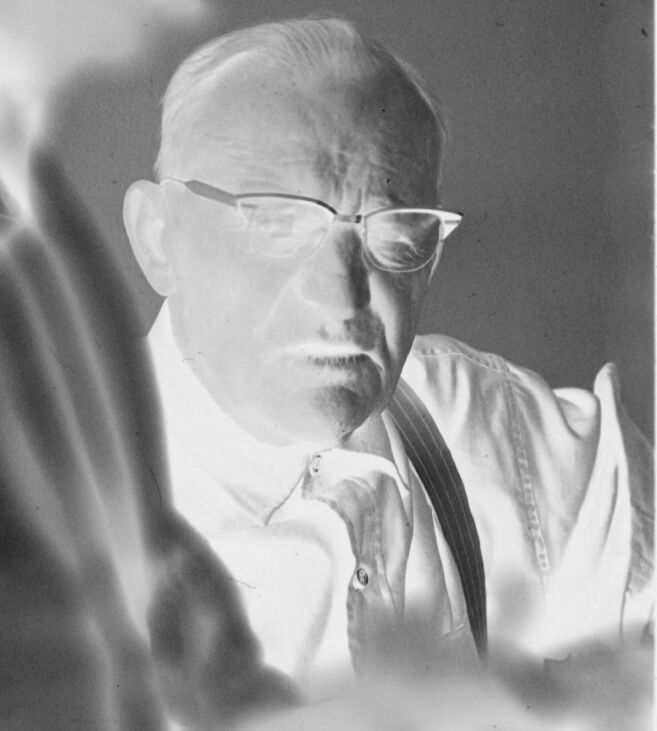

Amazon

created on 2019-08-09

Clarifai

created on 2019-08-09

| people | 99.7 | |

|

| ||

| portrait | 99 | |

|

| ||

| one | 98.9 | |

|

| ||

| man | 98.9 | |

|

| ||

| adult | 98.2 | |

|

| ||

| monochrome | 97.9 | |

|

| ||

| leader | 94 | |

|

| ||

| elderly | 89.6 | |

|

| ||

| facial expression | 89.2 | |

|

| ||

| bald | 88.2 | |

|

| ||

| administration | 87.4 | |

|

| ||

| wear | 85.7 | |

|

| ||

| indoors | 84.8 | |

|

| ||

| eyewear | 84.5 | |

|

| ||

| profile | 84.3 | |

|

| ||

| face | 81 | |

|

| ||

| black and white | 80.6 | |

|

| ||

| old | 80.6 | |

|

| ||

| music | 79.3 | |

|

| ||

| concentration | 78.2 | |

|

| ||

Imagga

created on 2019-08-09

| portrait | 35.6 | |

|

| ||

| person | 32.1 | |

|

| ||

| man | 30.9 | |

|

| ||

| people | 28.5 | |

|

| ||

| face | 27 | |

|

| ||

| child | 26 | |

|

| ||

| male | 24.7 | |

|

| ||

| adult | 24 | |

|

| ||

| black | 19.9 | |

|

| ||

| happy | 18.2 | |

|

| ||

| head | 17.7 | |

|

| ||

| looking | 17.6 | |

|

| ||

| hair | 17.5 | |

|

| ||

| love | 17.4 | |

|

| ||

| baby | 17.3 | |

|

| ||

| smile | 15.7 | |

|

| ||

| attractive | 15.4 | |

|

| ||

| human | 15 | |

|

| ||

| hand | 13.7 | |

|

| ||

| expression | 13.7 | |

|

| ||

| neonate | 13.7 | |

|

| ||

| one | 13.5 | |

|

| ||

| blond | 13.1 | |

|

| ||

| smiling | 13 | |

|

| ||

| lab coat | 12.8 | |

|

| ||

| pretty | 12.6 | |

|

| ||

| senior | 12.2 | |

|

| ||

| eyes | 12.1 | |

|

| ||

| elderly | 11.5 | |

|

| ||

| negative | 11.5 | |

|

| ||

| mature | 11.2 | |

|

| ||

| coat | 10.9 | |

|

| ||

| model | 10.9 | |

|

| ||

| cute | 10.8 | |

|

| ||

| family | 10.7 | |

|

| ||

| men | 10.3 | |

|

| ||

| youth | 10.2 | |

|

| ||

| parent | 10.2 | |

|

| ||

| lifestyle | 10.1 | |

|

| ||

| girls | 10 | |

|

| ||

| handsome | 9.8 | |

|

| ||

| close | 9.7 | |

|

| ||

| boy | 9.6 | |

|

| ||

| mother | 9.5 | |

|

| ||

| emotion | 9.2 | |

|

| ||

| film | 9.1 | |

|

| ||

| leisure | 9.1 | |

|

| ||

| teenager | 9.1 | |

|

| ||

| old | 9.1 | |

|

| ||

| grandfather | 8.7 | |

|

| ||

| mask | 8.7 | |

|

| ||

| women | 8.7 | |

|

| ||

| serious | 8.6 | |

|

| ||

| hand glass | 8.6 | |

|

| ||

| relax | 8.4 | |

|

| ||

| lips | 8.3 | |

|

| ||

| clothing | 8.2 | |

|

| ||

| couple | 7.8 | |

|

| ||

| happiness | 7.8 | |

|

| ||

| professional | 7.8 | |

|

| ||

| retired | 7.8 | |

|

| ||

| sad | 7.7 | |

|

| ||

| life | 7.7 | |

|

| ||

| health | 7.6 | |

|

| ||

| casual | 7.6 | |

|

| ||

| fashion | 7.5 | |

|

| ||

| finger | 7.5 | |

|

| ||

| fun | 7.5 | |

|

| ||

| holding | 7.4 | |

|

| ||

| phone | 7.4 | |

|

| ||

| lady | 7.3 | |

|

| ||

| garment | 7.3 | |

|

| ||

| student | 7.3 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| microscope | 7.2 | |

|

| ||

| body | 7.2 | |

|

| ||

| eye | 7.2 | |

|

| ||

| work | 7.1 | |

|

| ||

| businessman | 7.1 | |

|

| ||

| photographic paper | 7.1 | |

|

| ||

| covering | 7 | |

|

| ||

Google

created on 2019-08-09

| Photograph | 97.3 | |

|

| ||

| White | 95.5 | |

|

| ||

| Black-and-white | 89.5 | |

|

| ||

| Snapshot | 85.9 | |

|

| ||

| Photography | 78 | |

|

| ||

| Stock photography | 77.7 | |

|

| ||

| Monochrome photography | 76.2 | |

|

| ||

| Portrait | 72.2 | |

|

| ||

| Monochrome | 70.2 | |

|

| ||

| Self-portrait | 58.3 | |

|

| ||

| Smoking | 50.7 | |

|

| ||

| Art | 50.2 | |

|

| ||

Microsoft

created on 2019-08-09

| man | 99.5 | |

|

| ||

| human face | 98.9 | |

|

| ||

| person | 95.6 | |

|

| ||

| glasses | 92.4 | |

|

| ||

| portrait | 90 | |

|

| ||

| text | 88.7 | |

|

| ||

| black and white | 85.5 | |

|

| ||

| clothing | 68.2 | |

|

| ||

| human beard | 63.6 | |

|

| ||

| wrinkle | 56 | |

|

| ||

| staring | 23 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 35-51 |

| Gender | Male, 78.6% |

| Happy | 0.1% |

| Sad | 94.5% |

| Angry | 1.6% |

| Fear | 1.4% |

| Confused | 1.2% |

| Calm | 0.7% |

| Disgusted | 0.4% |

| Surprised | 0.1% |

Feature analysis

Categories

Imagga

| paintings art | 98.8% | |

|

| ||

Captions

Microsoft

created on 2019-08-09

| a man in glasses looking at the camera | 91.7% | |

|

| ||

| a man wearing glasses and looking at the camera | 89.5% | |

|

| ||

| a man wearing glasses | 89.4% | |

|

| ||

Text analysis

Amazon

Satrr