Machine Generated Data

Tags

Amazon

created on 2019-08-09

Clarifai

created on 2019-08-09

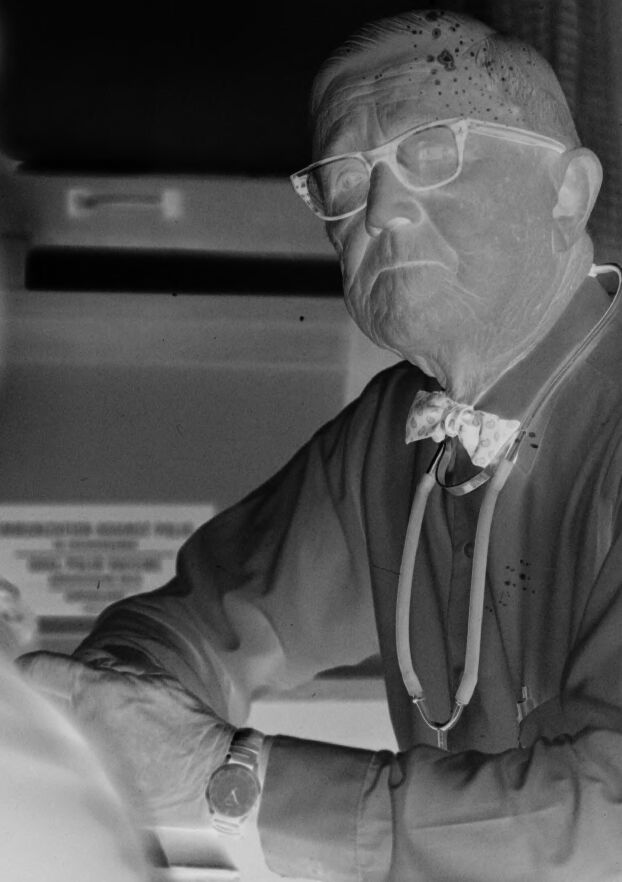

| people | 99.3 | |

|

| ||

| portrait | 98.6 | |

|

| ||

| monochrome | 98.4 | |

|

| ||

| man | 98.4 | |

|

| ||

| adult | 98.2 | |

|

| ||

| one | 97.4 | |

|

| ||

| indoors | 92.1 | |

|

| ||

| facial expression | 87.8 | |

|

| ||

| old | 85.3 | |

|

| ||

| elderly | 84.7 | |

|

| ||

| wear | 83.3 | |

|

| ||

| elder | 82.7 | |

|

| ||

| black and white | 82.3 | |

|

| ||

| business | 81.3 | |

|

| ||

| leader | 79.4 | |

|

| ||

| concentration | 79 | |

|

| ||

| actor | 78.6 | |

|

| ||

| face | 78 | |

|

| ||

| street | 76.4 | |

|

| ||

| lid | 75.6 | |

|

| ||

Imagga

created on 2019-08-09

| bow tie | 60.9 | |

|

| ||

| necktie | 48.7 | |

|

| ||

| man | 45.1 | |

|

| ||

| male | 36.3 | |

|

| ||

| portrait | 33.7 | |

|

| ||

| person | 33.1 | |

|

| ||

| face | 32 | |

|

| ||

| people | 29 | |

|

| ||

| garment | 28 | |

|

| ||

| adult | 24 | |

|

| ||

| businessman | 23.8 | |

|

| ||

| looking | 21.6 | |

|

| ||

| black | 20.4 | |

|

| ||

| clothing | 20.4 | |

|

| ||

| business | 20.1 | |

|

| ||

| professional | 17.9 | |

|

| ||

| happy | 17.6 | |

|

| ||

| men | 17.2 | |

|

| ||

| tie | 17.1 | |

|

| ||

| head | 16.8 | |

|

| ||

| look | 16.7 | |

|

| ||

| expression | 16.2 | |

|

| ||

| suit | 15.4 | |

|

| ||

| attractive | 15.4 | |

|

| ||

| guy | 15.4 | |

|

| ||

| smiling | 15.2 | |

|

| ||

| handsome | 15.2 | |

|

| ||

| mask | 14.3 | |

|

| ||

| serious | 14.3 | |

|

| ||

| senior | 14.1 | |

|

| ||

| glasses | 13.9 | |

|

| ||

| hair | 13.5 | |

|

| ||

| work | 13.4 | |

|

| ||

| eyes | 12.9 | |

|

| ||

| smile | 12.8 | |

|

| ||

| surgeon | 12 | |

|

| ||

| old | 11.9 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| human | 11.3 | |

|

| ||

| office | 11.2 | |

|

| ||

| one | 11.2 | |

|

| ||

| mature | 11.2 | |

|

| ||

| close | 10.8 | |

|

| ||

| eye | 10.7 | |

|

| ||

| hand | 10.6 | |

|

| ||

| coat | 10.6 | |

|

| ||

| success | 10.5 | |

|

| ||

| corporate | 10.3 | |

|

| ||

| hat | 10.2 | |

|

| ||

| covering | 10.2 | |

|

| ||

| medical | 9.7 | |

|

| ||

| mustache | 9.7 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| model | 9.3 | |

|

| ||

| casual | 9.3 | |

|

| ||

| student | 9.3 | |

|

| ||

| phone | 9.2 | |

|

| ||

| confident | 9.1 | |

|

| ||

| dark | 8.4 | |

|

| ||

| technology | 8.2 | |

|

| ||

| telephone | 8.1 | |

|

| ||

| sitting | 7.7 | |

|

| ||

| studio | 7.6 | |

|

| ||

| executive | 7.6 | |

|

| ||

| doctor | 7.5 | |

|

| ||

| worker | 7.5 | |

|

| ||

| jacket | 7.3 | |

|

| ||

| successful | 7.3 | |

|

| ||

| cheerful | 7.3 | |

|

| ||

| teenager | 7.3 | |

|

| ||

| stylish | 7.2 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| call | 7.1 | |

|

| ||

Google

created on 2019-08-09

| Photograph | 96.3 | |

|

| ||

| Black | 94.5 | |

|

| ||

| Black-and-white | 84.2 | |

|

| ||

| Stock photography | 79.6 | |

|

| ||

| Photography | 74.8 | |

|

| ||

| Monochrome | 67.6 | |

|

| ||

| Monochrome photography | 64.7 | |

|

| ||

| Gentleman | 54.5 | |

|

| ||

| Style | 52.5 | |

|

| ||

Microsoft

created on 2019-08-09

| person | 99.9 | |

|

| ||

| man | 99 | |

|

| ||

| human face | 97.4 | |

|

| ||

| indoor | 88.6 | |

|

| ||

| glasses | 84.9 | |

|

| ||

| black and white | 81.2 | |

|

| ||

| statue | 74.6 | |

|

| ||

| clothing | 67 | |

|

| ||

| text | 65 | |

|

| ||

| portrait | 58 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 49-67 |

| Gender | Male, 53.1% |

| Fear | 1% |

| Happy | 10.4% |

| Calm | 74.1% |

| Surprised | 5.9% |

| Angry | 2% |

| Disgusted | 2% |

| Confused | 2.3% |

| Sad | 2.2% |

Feature analysis

Categories

Imagga

| interior objects | 63.1% | |

|

| ||

| food drinks | 18.1% | |

|

| ||

| paintings art | 16.2% | |

|

| ||

Captions

Microsoft

created on 2019-08-09

| a man wearing a suit and tie | 96.8% | |

|

| ||

| a man wearing a suit and tie looking at the camera | 95.7% | |

|

| ||

| a man wearing glasses | 82.2% | |

|

| ||

Text analysis

Amazon

1

mi

00l

40010 00l

40010