Machine Generated Data

Tags

Amazon

created on 2019-08-09

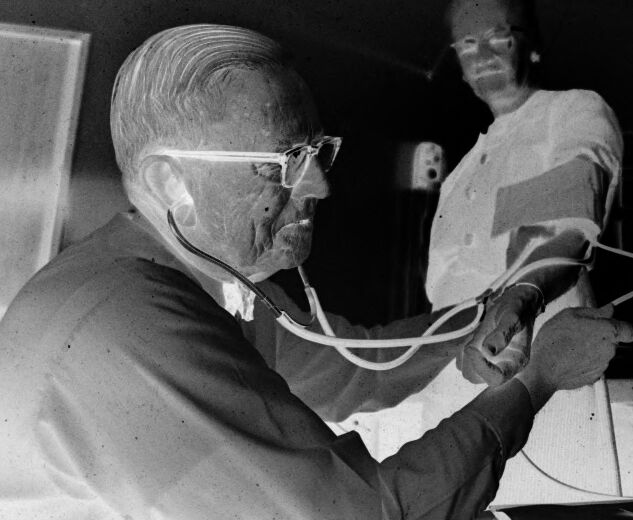

| Human | 99.5 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Clinic | 97.2 | |

|

| ||

| Person | 79.8 | |

|

| ||

| Hospital | 77.1 | |

|

| ||

| Operating Theatre | 77.1 | |

|

| ||

| Face | 66 | |

|

| ||

| Doctor | 65.8 | |

|

| ||

| Display | 63.6 | |

|

| ||

| Screen | 63.6 | |

|

| ||

| Monitor | 63.6 | |

|

| ||

| LCD Screen | 63.6 | |

|

| ||

| Electronics | 63.6 | |

|

| ||

| Indoors | 56.8 | |

|

| ||

| Finger | 55.2 | |

|

| ||

Clarifai

created on 2019-08-09

Imagga

created on 2019-08-09

| man | 49 | |

|

| ||

| person | 34.3 | |

|

| ||

| male | 32 | |

|

| ||

| people | 28.4 | |

|

| ||

| hairdresser | 23.7 | |

|

| ||

| senior | 22.5 | |

|

| ||

| adult | 22.4 | |

|

| ||

| home | 19.9 | |

|

| ||

| smiling | 19.5 | |

|

| ||

| indoors | 18.4 | |

|

| ||

| happy | 17.5 | |

|

| ||

| room | 16.8 | |

|

| ||

| sitting | 16.3 | |

|

| ||

| working | 15.9 | |

|

| ||

| work | 15.7 | |

|

| ||

| couple | 15.7 | |

|

| ||

| computer | 15.5 | |

|

| ||

| men | 15.4 | |

|

| ||

| grandfather | 15.2 | |

|

| ||

| patient | 14.3 | |

|

| ||

| chair | 13.9 | |

|

| ||

| business | 13.4 | |

|

| ||

| lifestyle | 13 | |

|

| ||

| worker | 12.9 | |

|

| ||

| barbershop | 12.8 | |

|

| ||

| professional | 12.5 | |

|

| ||

| shop | 12.5 | |

|

| ||

| elderly | 12.4 | |

|

| ||

| office | 12.3 | |

|

| ||

| together | 12.3 | |

|

| ||

| mature | 12.1 | |

|

| ||

| occupation | 11.9 | |

|

| ||

| laptop | 11.9 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| smile | 11.4 | |

|

| ||

| family | 10.7 | |

|

| ||

| retired | 10.7 | |

|

| ||

| job | 10.6 | |

|

| ||

| cheerful | 10.6 | |

|

| ||

| old | 10.4 | |

|

| ||

| adults | 10.4 | |

|

| ||

| love | 10.3 | |

|

| ||

| happiness | 10.2 | |

|

| ||

| device | 10 | |

|

| ||

| interior | 9.7 | |

|

| ||

| couch | 9.7 | |

|

| ||

| retirement | 9.6 | |

|

| ||

| sick person | 9.3 | |

|

| ||

| case | 9.2 | |

|

| ||

| attractive | 9.1 | |

|

| ||

| black | 9 | |

|

| ||

| 40s | 8.8 | |

|

| ||

| married | 8.6 | |

|

| ||

| talking | 8.5 | |

|

| ||

| face | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| two | 8.5 | |

|

| ||

| glasses | 8.3 | |

|

| ||

| disk jockey | 8.3 | |

|

| ||

| holding | 8.3 | |

|

| ||

| 20s | 8.2 | |

|

| ||

| fun | 8.2 | |

|

| ||

| industrial | 8.2 | |

|

| ||

| teacher | 8.1 | |

|

| ||

| aged | 8.1 | |

|

| ||

| suit | 8.1 | |

|

| ||

| women | 7.9 | |

|

| ||

| table | 7.8 | |

|

| ||

| mercantile establishment | 7.7 | |

|

| ||

| repair | 7.7 | |

|

| ||

| husband | 7.6 | |

|

| ||

| safety | 7.4 | |

|

| ||

| inside | 7.4 | |

|

| ||

| light | 7.3 | |

|

| ||

| looking | 7.2 | |

|

| ||

Google

created on 2019-08-09

| Photograph | 95.8 | |

|

| ||

| Black-and-white | 78.1 | |

|

| ||

| Stock photography | 73.9 | |

|

| ||

| Photography | 72.5 | |

|

| ||

| Monochrome | 64.3 | |

|

| ||

| Monochrome photography | 57.8 | |

|

| ||

Microsoft

created on 2019-08-09

| person | 99.2 | |

|

| ||

| man | 99.1 | |

|

| ||

| indoor | 92.3 | |

|

| ||

| black and white | 91.1 | |

|

| ||

| human face | 87.2 | |

|

| ||

| text | 84.4 | |

|

| ||

| clothing | 82.4 | |

|

| ||

| glasses | 58.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 39-57 |

| Gender | Male, 75% |

| Surprised | 0.7% |

| Calm | 11.4% |

| Disgusted | 0.4% |

| Happy | 0.7% |

| Sad | 81.1% |

| Angry | 1.9% |

| Fear | 1.5% |

| Confused | 2.4% |

Feature analysis

Categories

Imagga

| food drinks | 90.6% | |

|

| ||

| people portraits | 5.9% | |

|

| ||

| events parties | 2.9% | |

|

| ||

Captions

Microsoft

created on 2019-08-09

| a man sitting in a chair | 79.5% | |

|

| ||

| a man sitting in a room | 79.4% | |

|

| ||

| a man holding a laptop | 59.9% | |

|

| ||