Machine Generated Data

Tags

Amazon

created on 2023-10-25

| Electronics | 99.4 | |

|

| ||

| Screen | 99.4 | |

|

| ||

| Computer Hardware | 98.8 | |

|

| ||

| Hardware | 98.8 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Person | 97 | |

|

| ||

| Person | 96.8 | |

|

| ||

| Person | 96.5 | |

|

| ||

| Person | 96.4 | |

|

| ||

| Person | 96.4 | |

|

| ||

| Person | 95.4 | |

|

| ||

| Person | 95.3 | |

|

| ||

| Person | 95.1 | |

|

| ||

| Person | 95.1 | |

|

| ||

| Person | 94.9 | |

|

| ||

| Person | 94.9 | |

|

| ||

| Person | 94.7 | |

|

| ||

| Person | 94.3 | |

|

| ||

| Person | 93.4 | |

|

| ||

| Person | 92.7 | |

|

| ||

| Person | 92.6 | |

|

| ||

| Person | 92.5 | |

|

| ||

| Person | 92.2 | |

|

| ||

| Person | 91.1 | |

|

| ||

| Adult | 91.1 | |

|

| ||

| Male | 91.1 | |

|

| ||

| Man | 91.1 | |

|

| ||

| Person | 90.9 | |

|

| ||

| Person | 90.8 | |

|

| ||

| Person | 89.4 | |

|

| ||

| Person | 89.2 | |

|

| ||

| Person | 88.2 | |

|

| ||

| Person | 88.1 | |

|

| ||

| Baby | 88.1 | |

|

| ||

| Clothing | 87 | |

|

| ||

| Formal Wear | 87 | |

|

| ||

| Suit | 87 | |

|

| ||

| Person | 86.9 | |

|

| ||

| Baby | 86.9 | |

|

| ||

| Person | 86.8 | |

|

| ||

| Person | 86.2 | |

|

| ||

| Person | 85.7 | |

|

| ||

| Person | 85.7 | |

|

| ||

| Person | 83.5 | |

|

| ||

| Baby | 83.5 | |

|

| ||

| Person | 83.2 | |

|

| ||

| Person | 83 | |

|

| ||

| Person | 82 | |

|

| ||

| Person | 80.4 | |

|

| ||

| TV | 80.2 | |

|

| ||

| Suit | 79.4 | |

|

| ||

| Person | 78 | |

|

| ||

| Person | 75.1 | |

|

| ||

| Face | 74.6 | |

|

| ||

| Head | 74.6 | |

|

| ||

| Person | 70.2 | |

|

| ||

| Art | 70.2 | |

|

| ||

| Collage | 70.2 | |

|

| ||

| Person | 67.5 | |

|

| ||

| Animal | 66.3 | |

|

| ||

| Canine | 66.3 | |

|

| ||

| Dog | 66.3 | |

|

| ||

| Mammal | 66.3 | |

|

| ||

| Pet | 66.3 | |

|

| ||

| Monitor | 64.2 | |

|

| ||

| Indoors | 61.1 | |

|

| ||

| Person | 60.8 | |

|

| ||

| Shop | 60.2 | |

|

| ||

| Photographic Film | 55.8 | |

|

| ||

Clarifai

created on 2019-02-18

Imagga

created on 2019-02-18

| case | 100 | |

|

| ||

| shop | 23 | |

|

| ||

| architecture | 16.4 | |

|

| ||

| restaurant | 16 | |

|

| ||

| building | 15.2 | |

|

| ||

| modern | 14 | |

|

| ||

| design | 12.9 | |

|

| ||

| cafeteria | 12.7 | |

|

| ||

| furniture | 12.6 | |

|

| ||

| vending machine | 12.2 | |

|

| ||

| mercantile establishment | 11.9 | |

|

| ||

| machine | 11.7 | |

|

| ||

| city | 11.6 | |

|

| ||

| kitchen | 11.6 | |

|

| ||

| interior | 11.5 | |

|

| ||

| table | 11.2 | |

|

| ||

| glass | 11.2 | |

|

| ||

| shelf | 10.7 | |

|

| ||

| light | 10.7 | |

|

| ||

| night | 10.6 | |

|

| ||

| bottle | 10.6 | |

|

| ||

| food | 10.4 | |

|

| ||

| home | 10.4 | |

|

| ||

| house | 10 | |

|

| ||

| slot machine | 9.8 | |

|

| ||

| business | 9.7 | |

|

| ||

| technology | 9.6 | |

|

| ||

| urban | 9.6 | |

|

| ||

| structure | 9.5 | |

|

| ||

| store | 9.4 | |

|

| ||

| colorful | 9.3 | |

|

| ||

| wood | 9.2 | |

|

| ||

| counter | 9 | |

|

| ||

| window | 8.7 | |

|

| ||

| party | 8.6 | |

|

| ||

| culture | 8.5 | |

|

| ||

| tobacco shop | 8.4 | |

|

| ||

| place of business | 7.9 | |

|

| ||

| film | 7.7 | |

|

| ||

| drink | 7.5 | |

|

| ||

| traditional | 7.5 | |

|

| ||

| lights | 7.4 | |

|

| ||

| fruit | 7.3 | |

|

| ||

| object | 7.3 | |

|

| ||

| lifestyle | 7.2 | |

|

| ||

| travel | 7 | |

|

| ||

| indoors | 7 | |

|

| ||

Google

created on 2019-02-18

| Photograph | 96.5 | |

|

| ||

| Picture frame | 87 | |

|

| ||

| Collection | 86.4 | |

|

| ||

| Display case | 77.6 | |

|

| ||

| Photography | 74.8 | |

|

| ||

| Photographic paper | 63.9 | |

|

| ||

| Black-and-white | 56.4 | |

|

| ||

Microsoft

created on 2019-02-18

| wall | 95.6 | |

|

| ||

| old | 47.3 | |

|

| ||

| collection | 47.3 | |

|

| ||

| black and white | 33.8 | |

|

| ||

| art | 19.9 | |

|

| ||

| toy | 11.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 38-46 |

| Gender | Male, 99.5% |

| Calm | 87.2% |

| Surprised | 6.6% |

| Fear | 6% |

| Sad | 3.4% |

| Disgusted | 3.1% |

| Happy | 2.7% |

| Angry | 1.6% |

| Confused | 0.9% |

AWS Rekognition

| Age | 37-45 |

| Gender | Female, 79.2% |

| Calm | 63.4% |

| Happy | 22.5% |

| Surprised | 7.2% |

| Fear | 6.3% |

| Sad | 3.8% |

| Angry | 2.9% |

| Confused | 2.7% |

| Disgusted | 1.6% |

AWS Rekognition

| Age | 41-49 |

| Gender | Male, 98.8% |

| Sad | 89.9% |

| Calm | 29.3% |

| Disgusted | 11.2% |

| Surprised | 8.8% |

| Confused | 7.9% |

| Fear | 6.9% |

| Angry | 1.5% |

| Happy | 1.3% |

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 99.4% |

| Calm | 64.1% |

| Sad | 41.1% |

| Surprised | 7.4% |

| Fear | 6.4% |

| Disgusted | 2.5% |

| Happy | 2.3% |

| Angry | 2% |

| Confused | 1.3% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 70.4% |

| Happy | 55% |

| Calm | 11.6% |

| Fear | 9% |

| Surprised | 7.9% |

| Disgusted | 6.9% |

| Angry | 6.3% |

| Sad | 6.1% |

| Confused | 2.7% |

AWS Rekognition

| Age | 18-24 |

| Gender | Male, 98.1% |

| Calm | 94.1% |

| Surprised | 7.3% |

| Fear | 6% |

| Sad | 2.6% |

| Disgusted | 0.8% |

| Confused | 0.7% |

| Angry | 0.6% |

| Happy | 0.3% |

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 79.7% |

| Sad | 100% |

| Surprised | 6.5% |

| Fear | 6.1% |

| Happy | 3% |

| Confused | 1% |

| Disgusted | 0.9% |

| Angry | 0.8% |

| Calm | 0.5% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 96.6% |

| Calm | 79.4% |

| Surprised | 8.1% |

| Fear | 6.5% |

| Happy | 6.1% |

| Sad | 3.6% |

| Disgusted | 2.2% |

| Confused | 1.9% |

| Angry | 1.9% |

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 82% |

| Disgusted | 35.4% |

| Calm | 22.5% |

| Sad | 15.3% |

| Confused | 9.8% |

| Happy | 8.6% |

| Surprised | 7.9% |

| Fear | 6.9% |

| Angry | 3.4% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 98.3% |

| Calm | 92% |

| Surprised | 6.4% |

| Fear | 6% |

| Sad | 3.2% |

| Angry | 2.9% |

| Happy | 0.6% |

| Confused | 0.5% |

| Disgusted | 0.4% |

AWS Rekognition

| Age | 18-24 |

| Gender | Male, 91.7% |

| Calm | 95.1% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 3.1% |

| Confused | 1.5% |

| Happy | 0.4% |

| Disgusted | 0.1% |

| Angry | 0.1% |

AWS Rekognition

| Age | 56-64 |

| Gender | Male, 98.8% |

| Calm | 57.4% |

| Confused | 30.7% |

| Surprised | 6.8% |

| Fear | 6% |

| Sad | 4.9% |

| Angry | 2.9% |

| Disgusted | 1.1% |

| Happy | 0.4% |

Feature analysis

Amazon

| Person | 97.3% | |

|

| ||

| Person | 97.3% | |

|

| ||

| Person | 97% | |

|

| ||

| Person | 96.8% | |

|

| ||

| Person | 96.5% | |

|

| ||

| Person | 96.4% | |

|

| ||

| Person | 96.4% | |

|

| ||

| Person | 95.4% | |

|

| ||

| Person | 95.3% | |

|

| ||

| Person | 95.1% | |

|

| ||

| Person | 95.1% | |

|

| ||

| Person | 94.9% | |

|

| ||

| Person | 94.9% | |

|

| ||

| Person | 94.7% | |

|

| ||

| Person | 94.3% | |

|

| ||

| Person | 93.4% | |

|

| ||

| Person | 92.7% | |

|

| ||

| Person | 92.6% | |

|

| ||

| Person | 92.5% | |

|

| ||

| Person | 92.2% | |

|

| ||

| Person | 91.1% | |

|

| ||

| Person | 90.9% | |

|

| ||

| Person | 90.8% | |

|

| ||

| Person | 89.4% | |

|

| ||

| Person | 89.2% | |

|

| ||

| Person | 88.2% | |

|

| ||

| Person | 88.1% | |

|

| ||

| Person | 86.9% | |

|

| ||

| Person | 86.8% | |

|

| ||

| Person | 86.2% | |

|

| ||

| Person | 85.7% | |

|

| ||

| Person | 85.7% | |

|

| ||

| Person | 83.5% | |

|

| ||

| Person | 83.2% | |

|

| ||

| Person | 83% | |

|

| ||

| Person | 82% | |

|

| ||

| Person | 80.4% | |

|

| ||

| Person | 78% | |

|

| ||

| Person | 75.1% | |

|

| ||

| Person | 70.2% | |

|

| ||

| Person | 67.5% | |

|

| ||

| Person | 60.8% | |

|

| ||

| Adult | 91.1% | |

|

| ||

| Male | 91.1% | |

|

| ||

| Man | 91.1% | |

|

| ||

| Baby | 88.1% | |

|

| ||

| Baby | 86.9% | |

|

| ||

| Baby | 83.5% | |

|

| ||

| Suit | 87% | |

|

| ||

| Suit | 79.4% | |

|

| ||

| Dog | 66.3% | |

|

| ||

| Monitor | 64.2% | |

|

| ||

Categories

Imagga

| interior objects | 96% | |

|

| ||

| text visuals | 3.5% | |

|

| ||

Captions

Microsoft

created on 2019-02-18

| an old photo of a kitchen | 57.7% | |

|

| ||

| an old photo of a living room | 48% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-10

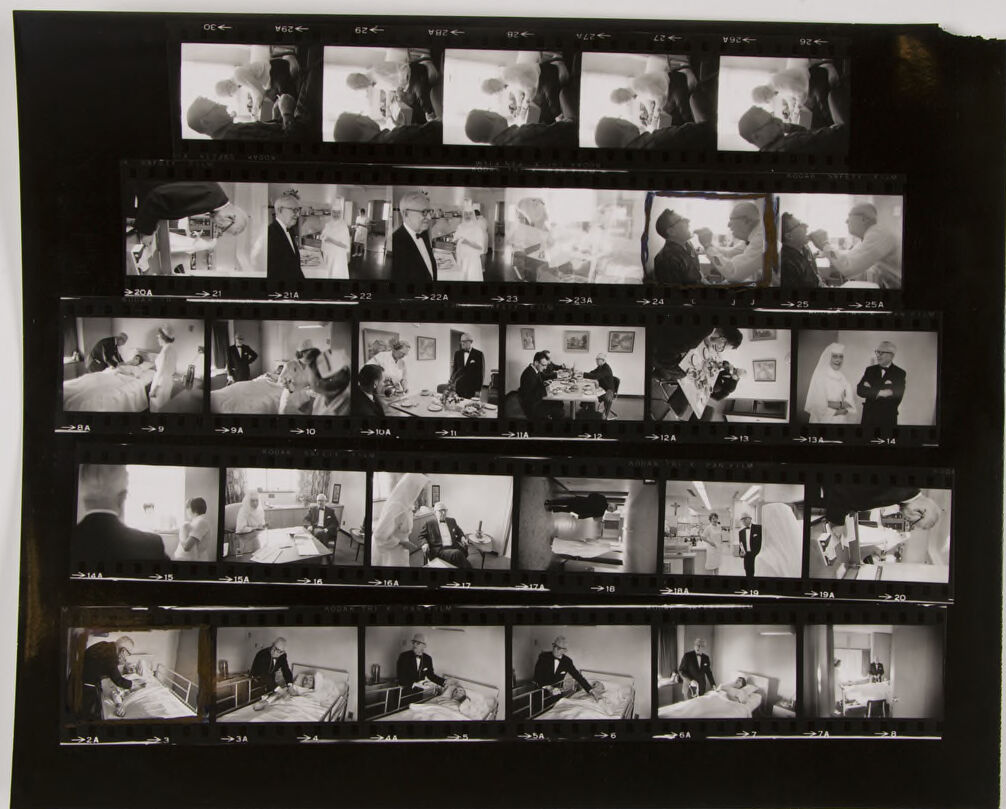

This image is a contact sheet of black and white film negatives. The film strip is arranged in rows of individual frames, each capturing a different moment in a sequence. The scenes appear to be documentary-style photos taken in various indoor settings. Some frames appear to be of a medical nature, with individuals wearing medical attire tending to patients in beds, while other frames depict what looks like social gatherings with people in formal wear. The quality of the negatives suggests the images were taken some time ago, possibly in the mid-20th century.

Created by gemini-2.0-flash on 2025-05-10

Here's a description of the image:

The image shows a contact sheet of black and white photographs. The contact sheet is arranged in rows and columns. The photographs appear to depict scenes related to medical care and a man in a suit, possibly a formal event.

There are images that show a doctor and patient interactions, including checking the patient. There are also images of a man in a suit, sometimes in the presence of someone wearing medical attire, possibly a nurse. Other photos show them at a dining table with two other people.

Overall, the contact sheet suggests a sequence of events or a photo story related to a medical setting and a formal event with the same man in suit.

Text analysis

Amazon