Machine Generated Data

Tags

Amazon

created on 2023-10-25

Clarifai

created on 2019-02-18

Imagga

created on 2019-02-18

| gas pump | 35 | |

|

| ||

| pump | 29.6 | |

|

| ||

| car | 25.9 | |

|

| ||

| mechanical device | 21.2 | |

|

| ||

| model t | 19.2 | |

|

| ||

| city | 19.1 | |

|

| ||

| urban | 14.8 | |

|

| ||

| business | 14.6 | |

|

| ||

| mechanism | 14.3 | |

|

| ||

| travel | 14.1 | |

|

| ||

| architecture | 14.1 | |

|

| ||

| night | 13.3 | |

|

| ||

| boat | 13.1 | |

|

| ||

| device | 13 | |

|

| ||

| motor vehicle | 12.7 | |

|

| ||

| water | 12.7 | |

|

| ||

| black | 12 | |

|

| ||

| old | 11.8 | |

|

| ||

| office | 11.7 | |

|

| ||

| transportation | 11.6 | |

|

| ||

| building | 11.4 | |

|

| ||

| sky | 10.2 | |

|

| ||

| light | 10 | |

|

| ||

| vehicle | 9.9 | |

|

| ||

| stall | 9.8 | |

|

| ||

| monitor | 9.4 | |

|

| ||

| electronic equipment | 9.3 | |

|

| ||

| equipment | 9.3 | |

|

| ||

| ship | 9.3 | |

|

| ||

| structure | 9.2 | |

|

| ||

| transport | 9.1 | |

|

| ||

| container | 8.6 | |

|

| ||

| tourism | 8.2 | |

|

| ||

| computer | 8.1 | |

|

| ||

| reflection | 8.1 | |

|

| ||

| working | 7.9 | |

|

| ||

| work | 7.8 | |

|

| ||

| people | 7.8 | |

|

| ||

| center | 7.7 | |

|

| ||

| sign | 7.5 | |

|

| ||

| ocean | 7.5 | |

|

| ||

| tramway | 7.4 | |

|

| ||

| street | 7.4 | |

|

| ||

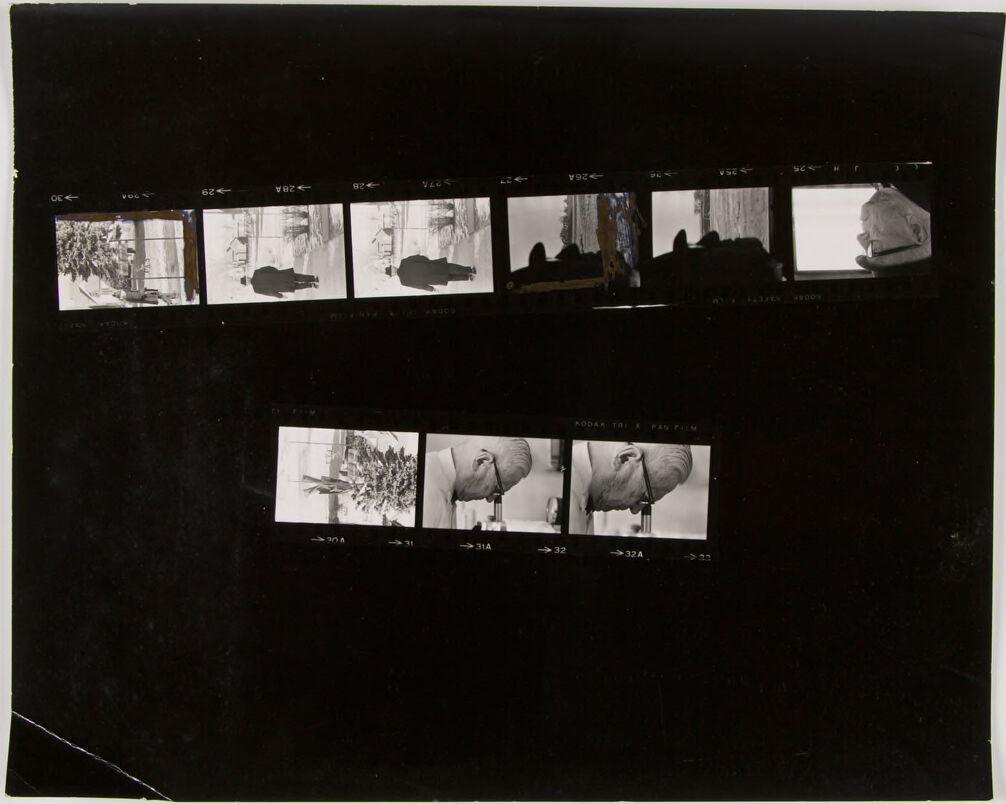

Google

created on 2019-02-18

| Photograph | 96.9 | |

|

| ||

| Black | 95.1 | |

|

| ||

| Photographic paper | 90.7 | |

|

| ||

| Photography | 73.8 | |

|

| ||

| Room | 71.4 | |

|

| ||

| Black-and-white | 68.3 | |

|

| ||

| Rectangle | 64.3 | |

|

| ||

| Photograph album | 61.9 | |

|

| ||

| Picture frame | 60.3 | |

|

| ||

Microsoft

created on 2019-02-18

| black and white | 14.4 | |

|

| ||

| abandoned | 9.5 | |

|

| ||

| art | 6.2 | |

|

| ||

| monochrome | 5 | |

|

| ||

| exhibition | 5 | |

|

| ||

Color Analysis

Face analysis

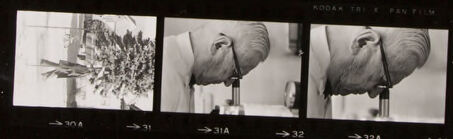

Amazon

AWS Rekognition

| Age | 68-78 |

| Gender | Male, 99.9% |

| Calm | 91% |

| Surprised | 6.8% |

| Fear | 6% |

| Sad | 3.8% |

| Happy | 1.2% |

| Angry | 0.8% |

| Confused | 0.8% |

| Disgusted | 0.7% |

Feature analysis

Categories

Imagga

| text visuals | 99.9% | |

|

| ||

Captions

Microsoft

created on 2019-02-18

| a screen shot of a television | 33.9% | |

|

| ||

| a close up of a screen | 33.8% | |

|

| ||

| an old television | 30.2% | |

|

| ||

Text analysis

Amazon

PAN

29

32

RODAR

VLC

DE

92

RODAR THE F PAN T.ILM

F

YUC

T.ILM

>32A

THE

VOZ

V92

3308

TS

→32

→32 A

→31A

→

32

A

31A