Machine Generated Data

Tags

Amazon

created on 2023-10-25

Clarifai

created on 2019-02-18

Imagga

created on 2019-02-18

| mug shot | 20.8 | |

|

| ||

| photograph | 18.8 | |

|

| ||

| representation | 17.2 | |

|

| ||

| paper | 16.5 | |

|

| ||

| child | 15.4 | |

|

| ||

| people | 15.1 | |

|

| ||

| portrait | 14.9 | |

|

| ||

| money | 14.5 | |

|

| ||

| face | 14.2 | |

|

| ||

| happy | 13.2 | |

|

| ||

| culture | 12.8 | |

|

| ||

| old | 12.5 | |

|

| ||

| business | 12.1 | |

|

| ||

| person | 11.8 | |

|

| ||

| frame | 11.8 | |

|

| ||

| vintage | 11.6 | |

|

| ||

| art | 11.4 | |

|

| ||

| sculpture | 11.2 | |

|

| ||

| creation | 11.1 | |

|

| ||

| card | 10.9 | |

|

| ||

| symbol | 10.8 | |

|

| ||

| sign | 10.5 | |

|

| ||

| happiness | 10.2 | |

|

| ||

| design | 10.2 | |

|

| ||

| smiling | 10.1 | |

|

| ||

| head | 10.1 | |

|

| ||

| cute | 10 | |

|

| ||

| dress | 9.9 | |

|

| ||

| currency | 9.9 | |

|

| ||

| statue | 9.8 | |

|

| ||

| bank | 9.2 | |

|

| ||

| banking | 9.2 | |

|

| ||

| man | 8.9 | |

|

| ||

| envelope | 8.8 | |

|

| ||

| decoration | 8.8 | |

|

| ||

| golden | 8.6 | |

|

| ||

| smile | 8.5 | |

|

| ||

| finance | 8.4 | |

|

| ||

| adult | 8.4 | |

|

| ||

| clothing | 8.4 | |

|

| ||

| holding | 8.3 | |

|

| ||

| retro | 8.2 | |

|

| ||

| new | 8.1 | |

|

| ||

| antique | 7.9 | |

|

| ||

| empty | 7.7 | |

|

| ||

| religious | 7.7 | |

|

| ||

| wall | 7.7 | |

|

| ||

| doll | 7.6 | |

|

| ||

| elegance | 7.6 | |

|

| ||

| fun | 7.5 | |

|

| ||

| traditional | 7.5 | |

|

| ||

| savings | 7.5 | |

|

| ||

| wealth | 7.2 | |

|

| ||

| religion | 7.2 | |

|

| ||

| holiday | 7.2 | |

|

| ||

| childhood | 7.2 | |

|

| ||

| kid | 7.1 | |

|

| ||

| look | 7 | |

|

| ||

Google

created on 2019-02-18

| Photograph | 97 | |

|

| ||

| Picture frame | 91.8 | |

|

| ||

| Snapshot | 85.1 | |

|

| ||

| Art | 69.5 | |

|

| ||

| Room | 65.7 | |

|

| ||

| Photography | 62.4 | |

|

| ||

| Painting | 54.7 | |

|

| ||

Color Analysis

Face analysis

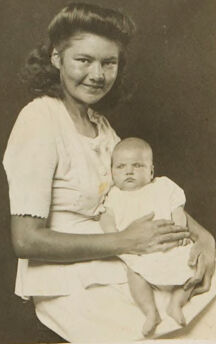

Amazon

Microsoft

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 99.9% |

| Happy | 97.9% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.2% |

| Calm | 1.3% |

| Confused | 0.2% |

| Disgusted | 0.1% |

| Angry | 0.1% |

AWS Rekognition

| Age | 4-10 |

| Gender | Male, 99.9% |

| Calm | 78.5% |

| Angry | 18% |

| Surprised | 6.5% |

| Fear | 6% |

| Sad | 2.4% |

| Confused | 1.3% |

| Happy | 0.4% |

| Disgusted | 0.4% |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Person

| Person | 98.7% | |

|

| ||

Categories

Imagga

| paintings art | 99.9% | |

|

| ||

Captions

Microsoft

created on 2019-02-18

| a person in a white room | 50.7% | |

|

| ||

| a person in a white room | 50.6% | |

|

| ||

| an old photo of a person | 49% | |

|

| ||