Machine Generated Data

Tags

Amazon

created on 2019-11-07

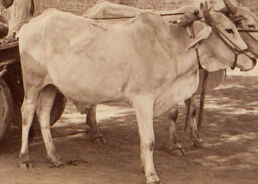

| Mammal | 99.6 | |

|

| ||

| Animal | 99.6 | |

|

| ||

| Bull | 99.6 | |

|

| ||

| Horse | 96.9 | |

|

| ||

| Person | 95.4 | |

|

| ||

| Human | 95.4 | |

|

| ||

| Cow | 94.8 | |

|

| ||

| Cattle | 94.8 | |

|

| ||

| Person | 94.6 | |

|

| ||

| Wheel | 92.4 | |

|

| ||

| Machine | 92.4 | |

|

| ||

| Person | 91.1 | |

|

| ||

| Person | 86.9 | |

|

| ||

| Person | 85.4 | |

|

| ||

| Ox | 82.1 | |

|

| ||

| Person | 72.6 | |

|

| ||

| Person | 67.3 | |

|

| ||

| Horse | 66.3 | |

|

| ||

| Person | 64 | |

|

| ||

| Advertisement | 64 | |

|

| ||

| Person | 63.5 | |

|

| ||

| Horse | 61.9 | |

|

| ||

| Poster | 60.1 | |

|

| ||

| Collage | 56.2 | |

|

| ||

| Spoke | 55.1 | |

|

| ||

| Horse | 51.4 | |

|

| ||

Clarifai

created on 2019-11-07

Imagga

created on 2019-11-07

Google

created on 2019-11-07

| Photograph | 95 | |

|

| ||

| Bovine | 87.6 | |

|

| ||

| oxcart | 75.3 | |

|

| ||

| Picture frame | 68.4 | |

|

| ||

| Adaptation | 67 | |

|

| ||

| Room | 65.7 | |

|

| ||

| Working animal | 61.1 | |

|

| ||

| Livestock | 58.6 | |

|

| ||

| Ox | 58.3 | |

|

| ||

| Cart | 54.8 | |

|

| ||

| Cow-goat family | 50.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-35 |

| Gender | Male, 50.3% |

| Sad | 49.8% |

| Confused | 49.5% |

| Fear | 49.8% |

| Angry | 49.6% |

| Surprised | 49.5% |

| Disgusted | 49.5% |

| Calm | 49.8% |

| Happy | 49.5% |

AWS Rekognition

| Age | 26-40 |

| Gender | Male, 50.5% |

| Confused | 49.5% |

| Calm | 50.5% |

| Happy | 49.5% |

| Fear | 49.5% |

| Angry | 49.5% |

| Surprised | 49.5% |

| Disgusted | 49.5% |

| Sad | 49.5% |

AWS Rekognition

| Age | 31-47 |

| Gender | Female, 50.2% |

| Calm | 49.6% |

| Sad | 50.3% |

| Fear | 49.6% |

| Angry | 49.5% |

| Disgusted | 49.5% |

| Happy | 49.5% |

| Confused | 49.6% |

| Surprised | 49.5% |

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 50.1% |

| Happy | 49.6% |

| Fear | 49.5% |

| Disgusted | 49.5% |

| Calm | 49.6% |

| Sad | 50.2% |

| Confused | 49.5% |

| Angry | 49.5% |

| Surprised | 49.5% |

AWS Rekognition

| Age | 15-27 |

| Gender | Female, 50.4% |

| Angry | 49.6% |

| Confused | 49.5% |

| Fear | 50.1% |

| Happy | 49.5% |

| Surprised | 49.7% |

| Calm | 49.6% |

| Disgusted | 49.5% |

| Sad | 49.6% |

AWS Rekognition

| Age | 26-42 |

| Gender | Female, 50.3% |

| Calm | 49.8% |

| Fear | 49.5% |

| Sad | 49.9% |

| Angry | 49.5% |

| Surprised | 49.5% |

| Happy | 49.6% |

| Confused | 49.5% |

| Disgusted | 49.5% |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2019-11-07

| a vintage photo of a sign | 63.1% | |

|

| ||

| a vintage photo of a person | 53.2% | |

|

| ||

| a vintage photo of some text | 53.1% | |

|

| ||

Text analysis

Amazon

ark.

metivation

enetivation ark. od

enetivation

Soonghing

metivation arti

od

arti

glanghing

IS

enttivatiow Cart

enltivation eant

glanghing

IS

enttivatiow

Cart

enltivation

eant