Machine Generated Data

Tags

Amazon

created on 2021-12-14

Clarifai

created on 2023-10-22

Imagga

created on 2021-12-14

| man | 26.2 | |

|

| ||

| person | 23.6 | |

|

| ||

| uniform | 22.5 | |

|

| ||

| male | 22 | |

|

| ||

| people | 20.6 | |

|

| ||

| clothing | 17.9 | |

|

| ||

| war | 17.3 | |

|

| ||

| weapon | 16.2 | |

|

| ||

| black | 15.6 | |

|

| ||

| device | 15.5 | |

|

| ||

| military | 15.4 | |

|

| ||

| astronaut | 15.3 | |

|

| ||

| work | 14.9 | |

|

| ||

| soldier | 14.7 | |

|

| ||

| mask | 14.6 | |

|

| ||

| men | 12.9 | |

|

| ||

| human | 12.7 | |

|

| ||

| equipment | 12.6 | |

|

| ||

| instrument | 12.5 | |

|

| ||

| adult | 11.7 | |

|

| ||

| gun | 11.7 | |

|

| ||

| nurse | 11.7 | |

|

| ||

| worker | 11.7 | |

|

| ||

| covering | 11.6 | |

|

| ||

| hand | 11.4 | |

|

| ||

| protection | 10.9 | |

|

| ||

| military uniform | 10.7 | |

|

| ||

| army | 10.7 | |

|

| ||

| professional | 10.4 | |

|

| ||

| safety | 10.1 | |

|

| ||

| camouflage | 10.1 | |

|

| ||

| music | 9.9 | |

|

| ||

| art | 9.8 | |

|

| ||

| battle | 9.8 | |

|

| ||

| warrior | 9.8 | |

|

| ||

| surgeon | 9.4 | |

|

| ||

| armor | 9.4 | |

|

| ||

| guy | 9.2 | |

|

| ||

| portrait | 9.1 | |

|

| ||

| paint | 9.1 | |

|

| ||

| newspaper | 9 | |

|

| ||

| metal | 8.8 | |

|

| ||

| armed | 8.8 | |

|

| ||

| body | 8.8 | |

|

| ||

| mechanic | 8.8 | |

|

| ||

| helmet | 8.8 | |

|

| ||

| rock | 8.7 | |

|

| ||

| engine | 8.7 | |

|

| ||

| old | 8.4 | |

|

| ||

| fashion | 8.3 | |

|

| ||

| occupation | 8.2 | |

|

| ||

| playing | 8.2 | |

|

| ||

| danger | 8.2 | |

|

| ||

| history | 8 | |

|

| ||

| game | 8 | |

|

| ||

| player | 8 | |

|

| ||

| medical | 7.9 | |

|

| ||

| product | 7.9 | |

|

| ||

| forces | 7.9 | |

|

| ||

| conflict | 7.8 | |

|

| ||

| face | 7.8 | |

|

| ||

| model | 7.8 | |

|

| ||

| play | 7.8 | |

|

| ||

| doctor | 7.5 | |

|

| ||

| consumer goods | 7.5 | |

|

| ||

| fun | 7.5 | |

|

| ||

| technology | 7.4 | |

|

| ||

| hospital | 7.4 | |

|

| ||

| vehicle | 7.4 | |

|

| ||

| business | 7.3 | |

|

| ||

| breastplate | 7.2 | |

|

| ||

| megaphone | 7.1 | |

|

| ||

| job | 7.1 | |

|

| ||

| working | 7.1 | |

|

| ||

| musical instrument | 7.1 | |

|

| ||

| medicine | 7 | |

|

| ||

Google

created on 2021-12-14

| Cap | 89.3 | |

|

| ||

| Hat | 88.1 | |

|

| ||

| Headgear | 82.1 | |

|

| ||

| Art | 74.6 | |

|

| ||

| Motor vehicle | 74.6 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Vintage clothing | 73.6 | |

|

| ||

| Monochrome | 69.4 | |

|

| ||

| Stock photography | 66.9 | |

|

| ||

| Military person | 65.7 | |

|

| ||

| Non-commissioned officer | 64.2 | |

|

| ||

| Illustration | 63.4 | |

|

| ||

| Helmet | 61.8 | |

|

| ||

| Personal protective equipment | 61.5 | |

|

| ||

| Monochrome photography | 60.6 | |

|

| ||

| Baseball cap | 59.8 | |

|

| ||

| History | 59.4 | |

|

| ||

| Photo caption | 58.1 | |

|

| ||

| Vintage advertisement | 57.2 | |

|

| ||

| Fedora | 57.2 | |

|

| ||

Microsoft

created on 2021-12-14

| person | 99.3 | |

|

| ||

| text | 96.4 | |

|

| ||

| outdoor | 92 | |

|

| ||

| black and white | 80.4 | |

|

| ||

| clothing | 74.6 | |

|

| ||

Color Analysis

Face analysis

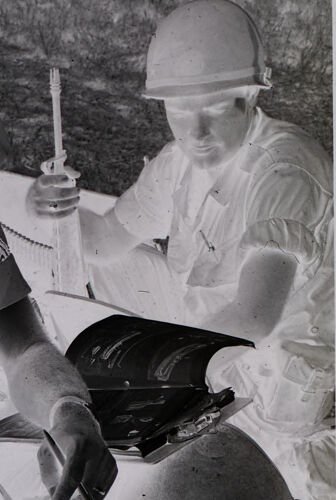

Amazon

AWS Rekognition

| Age | 23-37 |

| Gender | Male, 85.1% |

| Calm | 99.3% |

| Surprised | 0.3% |

| Sad | 0.2% |

| Angry | 0.1% |

| Fear | 0.1% |

| Happy | 0.1% |

| Confused | 0.1% |

| Disgusted | 0% |

AWS Rekognition

| Age | 23-35 |

| Gender | Male, 79.1% |

| Calm | 87.2% |

| Fear | 4.9% |

| Angry | 4.3% |

| Surprised | 1.5% |

| Happy | 0.9% |

| Sad | 0.7% |

| Disgusted | 0.4% |

| Confused | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 62.9% | |

|

| ||

| people portraits | 21.1% | |

|

| ||

| pets animals | 11.9% | |

|

| ||

| food drinks | 1.1% | |

|

| ||

Captions

Microsoft

created by unknown on 2021-12-14

| a group of people sitting around a baseball field | 28.4% | |

|

| ||

| a group of people sitting around a baseball hat | 28.3% | |

|

| ||

| a group of people around each other | 28.2% | |

|

| ||

Text analysis

Amazon

ALFA

S