Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 94.8% |

| Disgusted | 1.2% |

| Happy | 0.6% |

| Calm | 33% |

| Sad | 54.3% |

| Angry | 4.8% |

| Confused | 4.5% |

| Surprised | 1.5% |

Feature analysis

Amazon

| Helmet | 97.9% | |

Categories

Imagga

| pets animals | 46.3% | |

| paintings art | 36.2% | |

| people portraits | 16.8% | |

Captions

Microsoft

created by unknown on 2019-03-22

| a close up of a person wearing a hat | 72.3% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-16

| a photograph of a man in a helmet and helmet with a helmet on | -100% | |

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-27

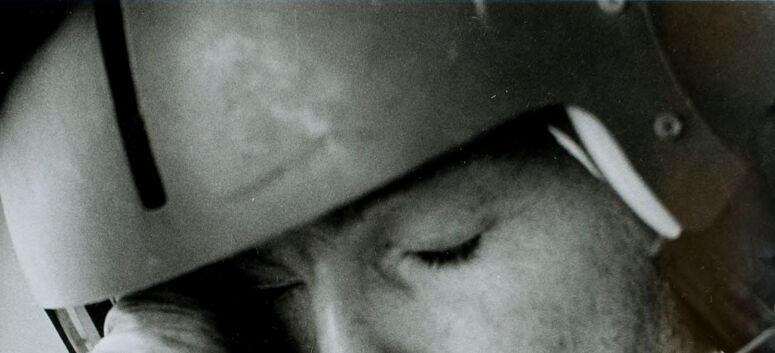

The image is a black-and-white photograph of a man wearing a helmet and a jacket, with his eyes closed. The man is the main subject of the image, and he appears to be in a state of relaxation or sleep.

- The man is wearing a helmet that covers his head and face, with a strap under his chin to secure it in place.

- The helmet has a distinctive shape, with a curved top and a flat bottom.

- It appears to be made of a hard material, possibly plastic or metal.

- The man is also wearing a jacket that covers his torso and arms.

- The jacket is dark-colored and has a collar that is turned up around his neck.

- It appears to be made of a thick, heavy material, possibly leather or denim.

- The man's eyes are closed, and his head is tilted slightly to one side.

- His facial expression is calm and relaxed, with no visible signs of stress or tension.

- His mouth is slightly open, and his lips are pursed as if he is breathing deeply.

- The background of the image is out of focus, but it appears to be a plain wall or surface.

- There are no other objects or people visible in the background.

- The overall atmosphere of the image is one of quiet contemplation, with the man appearing to be lost in thought or meditation.

In summary, the image is a close-up photograph of a man wearing a helmet and a jacket, with his eyes closed and a calm expression on his face. The background is out of focus, and the overall atmosphere of the image is one of relaxation and contemplation.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-27

The image is a black-and-white photograph of a man wearing a helmet and oxygen mask, with his eyes closed. The man's face is the primary focus of the image, and he appears to be in a state of relaxation or concentration.

Key Features:

- Helmet: The man is wearing a helmet that covers his head and ears, suggesting that he may be engaged in an activity that requires protective gear.

- Oxygen Mask: The man has an oxygen mask covering his nose and mouth, which could indicate that he is in a high-altitude environment or engaging in an activity that requires supplemental oxygen.

- Closed Eyes: The man's eyes are closed, which may suggest that he is resting, meditating, or focusing on a specific task.

- Facial Expression: The man's facial expression is neutral, with no visible signs of stress or discomfort.

Context:

- Activity: Based on the man's attire and equipment, it is likely that he is engaged in an activity such as flying, skydiving, or scuba diving.

- Environment: The background of the image is not clearly visible, but it appears to be a dark or shadowy area, which could suggest that the man is in a confined space or a low-light environment.

Overall Impression:

- Serene: The image conveys a sense of serenity and calmness, as the man appears to be relaxed and focused.

- Professional: The man's attire and equipment suggest that he is a professional or experienced individual in his field.

- Mysterious: The image raises questions about the man's identity, his activity, and the context in which the photograph was taken.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-27

The image is a black-and-white photograph of a man who appears to be a pilot. He is wearing a helmet with a visor and a headset with a microphone. The man's eyes are closed, and he is holding his left ear with his left hand, which is covered in a white glove. The headset is connected to a microphone on the right side of his face. The man's face is slightly tilted to the right, and his mouth is slightly open. The background is dark, and the image has a slightly blurry effect.

Created by amazon.nova-pro-v1:0 on 2025-05-27

A black-and-white photo of a man wearing a helmet with a visor and a microphone with a strap around his head. He is wearing a pair of gloves and a uniform. He is holding his earphone with his hand, which is probably a headset. He is probably talking to someone and seems to be listening. He is closing his eyes and looking down.

Google Gemini

Created by gemini-2.0-flash on 2025-05-22

The black and white image shows a close-up of a person's face wearing a helmet and what appears to be a respirator or some kind of mouth gear. The person has their eyes closed, and one hand, clad in a glove, is partially obscuring their face. The helmet is bulky, with a stripe running vertically down the side. The facial expression seems contemplative or weary, and the overall composition suggests a moment of quiet or introspection.

Created by gemini-2.0-flash-lite on 2025-05-22

Here's a description of the image:

Overall Impression:

The image is a close-up, black and white photograph of a person wearing what appears to be a pilot's or a similar type of helmet and gear. The shot is focused on the face, capturing a moment of introspective or perhaps weary concentration.

Key Features:

- Subject: The main subject is a person whose face is partially visible. They are wearing a helmet with a visor and communication gear attached, like the mouth piece of a microphone.

- Facial Expression: The person's eyes are closed, suggesting a moment of rest, concentration, or maybe even contemplation. The angle and lighting create some visible shadows.

- Details: The image shows texture of the skin, the fabric of the flight suit or uniform. The pilot is resting his head on his gloved hand.

- Composition: The composition is tight and focused on the person's face and upper body. The lighting creates contrast, with areas of light and shadow.

- Atmosphere: The black and white format and the close-up perspective give the image a sense of intimacy and seriousness.

Possible Interpretations:

Given the gear, the image might depict:

- A pilot or astronaut taking a moment to relax or gather themselves before a mission.

- A moment of reflection during or after a demanding flight.

- The quiet intensity required in a high-pressure profession.