Machine Generated Data

Tags

Amazon

created on 2019-04-05

Clarifai

created on 2018-04-19

Imagga

created on 2018-04-19

| cathode-ray tube | 40.6 | |

|

| ||

| tray | 33.7 | |

|

| ||

| gas-discharge tube | 32.4 | |

|

| ||

| container | 30.2 | |

|

| ||

| receptacle | 27.3 | |

|

| ||

| money | 27.2 | |

|

| ||

| currency | 26 | |

|

| ||

| vessel | 25 | |

|

| ||

| tube | 23.7 | |

|

| ||

| dollar | 22.2 | |

|

| ||

| finance | 21.1 | |

|

| ||

| cash | 21 | |

|

| ||

| business | 18.2 | |

|

| ||

| close | 17.7 | |

|

| ||

| paper | 17.3 | |

|

| ||

| electronic device | 16.2 | |

|

| ||

| banking | 15.6 | |

|

| ||

| hundred | 15.5 | |

|

| ||

| bathtub | 15.3 | |

|

| ||

| bank | 15.2 | |

|

| ||

| us | 14.4 | |

|

| ||

| bill | 14.2 | |

|

| ||

| financial | 14.2 | |

|

| ||

| one | 13.4 | |

|

| ||

| dollars | 12.5 | |

|

| ||

| wealth | 11.7 | |

|

| ||

| device | 11.5 | |

|

| ||

| exchange | 11.4 | |

|

| ||

| savings | 11.2 | |

|

| ||

| object | 11 | |

|

| ||

| franklin | 10.8 | |

|

| ||

| glass | 10.5 | |

|

| ||

| tub | 10.4 | |

|

| ||

| rich | 10.2 | |

|

| ||

| decoration | 10.2 | |

|

| ||

| closeup | 10.1 | |

|

| ||

| vintage | 9.9 | |

|

| ||

| old | 9.7 | |

|

| ||

| art | 9.2 | |

|

| ||

| gold | 9 | |

|

| ||

| market | 8.9 | |

|

| ||

| detail | 8.8 | |

|

| ||

| symbol | 8.7 | |

|

| ||

| bills | 8.7 | |

|

| ||

| pay | 8.6 | |

|

| ||

| note | 8.3 | |

|

| ||

| religion | 8.1 | |

|

| ||

| celebration | 8 | |

|

| ||

| interior | 7.9 | |

|

| ||

| funds | 7.8 | |

|

| ||

| banknotes | 7.8 | |

|

| ||

| golden | 7.7 | |

|

| ||

| states | 7.7 | |

|

| ||

| loan | 7.7 | |

|

| ||

| notes | 7.7 | |

|

| ||

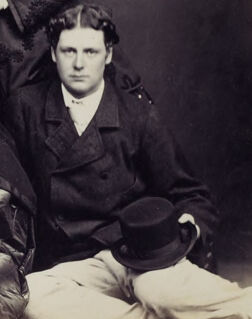

Google

created on 2018-04-19

| vintage clothing | 69.2 | |

|

| ||

| art | 52.8 | |

|

| ||

| stock photography | 52 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-44 |

| Gender | Male, 99.3% |

| Angry | 10.4% |

| Happy | 0.3% |

| Confused | 9.8% |

| Calm | 63.4% |

| Sad | 12.3% |

| Disgusted | 0.5% |

| Surprised | 3.3% |

AWS Rekognition

| Age | 30-47 |

| Gender | Male, 54.7% |

| Happy | 45.1% |

| Angry | 45.3% |

| Confused | 45.9% |

| Calm | 53.1% |

| Surprised | 45.4% |

| Disgusted | 45.1% |

| Sad | 45.2% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 53.4% |

| Surprised | 45.5% |

| Confused | 45.7% |

| Disgusted | 46.5% |

| Angry | 46.8% |

| Sad | 47.5% |

| Calm | 47.5% |

| Happy | 45.4% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50% |

| Calm | 51% |

| Angry | 46.3% |

| Surprised | 45.3% |

| Disgusted | 45.6% |

| Confused | 45.5% |

| Sad | 45.9% |

| Happy | 45.5% |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

Captions

Microsoft

created on 2018-04-19

| a group of baseball players standing on top of a book | 25.8% | |

|

| ||

| a group of baseball players sitting on a book | 21.8% | |

|

| ||

| a group of baseball players sitting on top of a book | 20.6% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-30

The image shows a group portrait of four individuals, three women and one man, dressed in Victorian-era clothing. The women are seated on a chaise lounge, adorned in elaborate dresses with ruffles and bows. The man is seated on the floor, dressed in a dark suit. The image appears to be a formal portrait, likely taken in a photography studio, with a dramatic backdrop and artistic touches such as musical notes drawn on the frame. Overall, the image conveys a sense of the formality and fashions of the late 19th century.

Created by claude-3-5-sonnet-20241022 on 2024-12-30

This is a Victorian-era photograph, likely from the mid-1800s. It shows a formal group portrait of four people in period clothing. The composition features two people standing and two in seated positions. One of the central figures wears an elaborate dark dress with a very full skirt typical of the time period. The others are dressed in dark coats and outerwear, including fur or similar winter hats. The photograph has the distinctive sepia tones and oval format common in 19th-century photography, and appears to be mounted in an album or book, with decorative markings around the edges. The formal poses and clothing styles are characteristic of Victorian portrait photography, where subjects would need to remain still for extended periods due to long exposure times.

Text analysis

Amazon