Microsoft

created on 2020-04-24

Azure OpenAI

Created on 2024-12-04

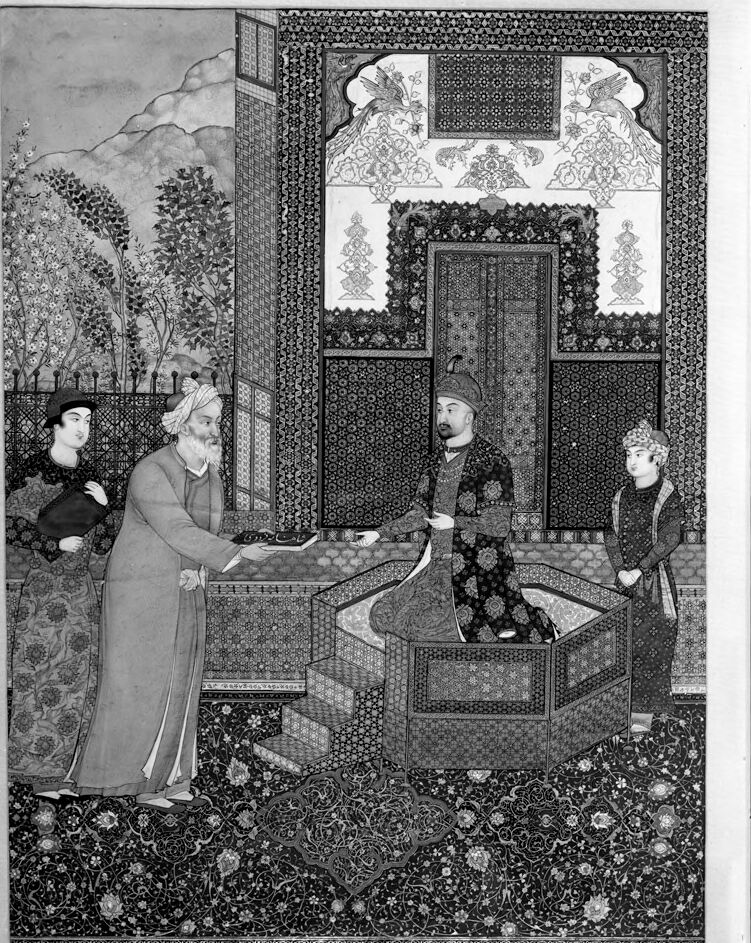

This is a black and white image of an intricately detailed artwork. It appears to represent a scene from a historic or mythological narrative, done in a style reminiscent of Persian or Mughal miniature paintings. The artwork is rich with patterns and ornamental designs, with depictions of an outdoor setting visible through an open doorway, including trees and a fence, indicating a garden.

There are several figures wearing traditional attire, amidst a setting that includes ornate architectural elements such as a doorway and finely detailed wall and floor patterns. One of the figures seems to be holding an object in an extended hand towards the center. The central area of the image features what could be a fountain or raised platform with geometric designs.

The upper portion of the artwork contains decorative elements resembling a border combined with vegetal motifs and another scene that might be part of a larger narrative. The meticulous attention to detail in the clothing, architectural elements, and surrounding environment suggests that this artwork may have been created by a skilled artist to either tell a story or depict an important event or royal setting.

Anthropic Claude

Created on 2024-11-27

The image depicts an intricately detailed scene from what appears to be an Iranian or Persian court setting. The central figure, dressed in ornate robes, is seated and surrounded by several other individuals in traditional attire. The background is filled with elaborate floral and geometric patterns, creating a visually striking and intricate composition. The overall style and subject matter suggest this is an artwork or illustration from a Persian or Iranian artistic tradition.

Meta Llama

Created on 2024-11-25

The image depicts a black-and-white illustration of a scene from an ancient Persian manuscript, possibly from the 16th or 17th century. The scene is set in a courtyard, with a group of men gathered around a central figure who appears to be seated on a throne or raised platform. The men are dressed in traditional Persian attire, including turbans and robes, and are engaged in conversation or discussion.

In the background, there are trees and a mountain range visible, suggesting that the scene is set in a natural environment. The overall atmosphere of the image is one of elegance and refinement, with intricate patterns and designs adorning the walls and furniture.

The image is likely a reproduction or scan of an original manuscript page, given its high level of detail and craftsmanship. The use of black and white ink suggests that the original artwork was created using traditional techniques such as calligraphy or illumination.

Overall, the image provides a glimpse into the artistic and cultural heritage of the Persian Empire during the Safavid period, known for its rich literary and artistic traditions.