Machine Generated Data

Tags

Amazon

created on 2023-10-06

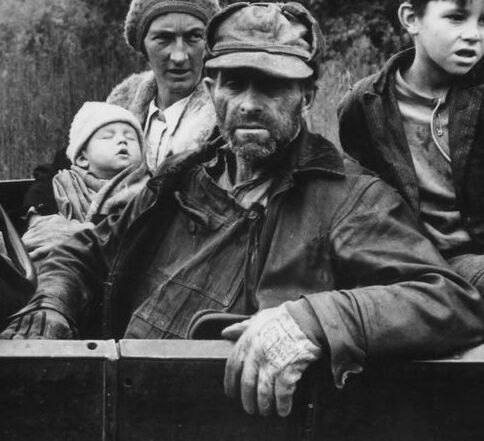

| Face | 100 | |

|

| ||

| Head | 100 | |

|

| ||

| Photography | 100 | |

|

| ||

| Portrait | 100 | |

|

| ||

| Clothing | 99.9 | |

|

| ||

| Coat | 99.9 | |

|

| ||

| Jacket | 99.8 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Boy | 99.3 | |

|

| ||

| Child | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Person | 98 | |

|

| ||

| Male | 98 | |

|

| ||

| Adult | 98 | |

|

| ||

| Man | 98 | |

|

| ||

| Person | 96.4 | |

|

| ||

| Adult | 96.4 | |

|

| ||

| Female | 96.4 | |

|

| ||

| Woman | 96.4 | |

|

| ||

| People | 93.9 | |

|

| ||

| Person | 93.3 | |

|

| ||

| Baby | 93.3 | |

|

| ||

| Cap | 92.2 | |

|

| ||

| Accessories | 87.3 | |

|

| ||

| Bag | 87.3 | |

|

| ||

| Handbag | 87.3 | |

|

| ||

| Glove | 85.9 | |

|

| ||

| Transportation | 74.8 | |

|

| ||

| Vehicle | 74.8 | |

|

| ||

| Car | 70.8 | |

|

| ||

| Hat | 67.7 | |

|

| ||

| Outdoors | 62.6 | |

|

| ||

| Helmet | 57.6 | |

|

| ||

| Worker | 56.9 | |

|

| ||

| Body Part | 56.8 | |

|

| ||

| Finger | 56.8 | |

|

| ||

| Hand | 56.8 | |

|

| ||

| Baseball Cap | 56.3 | |

|

| ||

| Vest | 55.8 | |

|

| ||

| Hat | 55.8 | |

|

| ||

| Hardhat | 55.6 | |

|

| ||

Clarifai

created on 2018-05-10

| people | 99.9 | |

|

| ||

| vehicle | 99.6 | |

|

| ||

| group together | 99.2 | |

|

| ||

| group | 99 | |

|

| ||

| adult | 97.8 | |

|

| ||

| two | 96.4 | |

|

| ||

| child | 95.4 | |

|

| ||

| man | 95.3 | |

|

| ||

| wear | 94.7 | |

|

| ||

| portrait | 94.5 | |

|

| ||

| veil | 94.3 | |

|

| ||

| transportation system | 94.1 | |

|

| ||

| four | 93.5 | |

|

| ||

| outfit | 93.2 | |

|

| ||

| recreation | 93.1 | |

|

| ||

| three | 93.1 | |

|

| ||

| military | 93 | |

|

| ||

| watercraft | 92.9 | |

|

| ||

| five | 90.8 | |

|

| ||

| woman | 90.3 | |

|

| ||

Imagga

created on 2023-10-06

| vehicle | 33.4 | |

|

| ||

| bobsled | 30.4 | |

|

| ||

| sled | 28.5 | |

|

| ||

| people | 24 | |

|

| ||

| man | 23.5 | |

|

| ||

| conveyance | 19.4 | |

|

| ||

| male | 19.1 | |

|

| ||

| person | 18.7 | |

|

| ||

| adult | 18.1 | |

|

| ||

| car | 15.7 | |

|

| ||

| outdoors | 15.7 | |

|

| ||

| sitting | 15.4 | |

|

| ||

| seat | 15.1 | |

|

| ||

| happy | 15 | |

|

| ||

| park | 14 | |

|

| ||

| fun | 13.5 | |

|

| ||

| sport | 12.9 | |

|

| ||

| one | 12.7 | |

|

| ||

| lifestyle | 12.3 | |

|

| ||

| smile | 12.1 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| helmet | 11.5 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| laptop | 11.1 | |

|

| ||

| leisure | 10 | |

|

| ||

| attractive | 9.8 | |

|

| ||

| driver | 9.7 | |

|

| ||

| style | 9.6 | |

|

| ||

| smiling | 9.4 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| model | 9.3 | |

|

| ||

| outdoor | 9.2 | |

|

| ||

| couple | 8.7 | |

|

| ||

| boy | 8.7 | |

|

| ||

| face | 8.5 | |

|

| ||

| sit | 8.5 | |

|

| ||

| joy | 8.3 | |

|

| ||

| dark | 8.3 | |

|

| ||

| vintage | 8.3 | |

|

| ||

| computer | 8.1 | |

|

| ||

| stretcher | 8 | |

|

| ||

| hair | 7.9 | |

|

| ||

| business | 7.9 | |

|

| ||

| love | 7.9 | |

|

| ||

| tract | 7.9 | |

|

| ||

| work | 7.8 | |

|

| ||

| statue | 7.8 | |

|

| ||

| day | 7.8 | |

|

| ||

| driving | 7.7 | |

|

| ||

| men | 7.7 | |

|

| ||

| child | 7.7 | |

|

| ||

| luxury | 7.7 | |

|

| ||

| modern | 7.7 | |

|

| ||

| pretty | 7.7 | |

|

| ||

| war | 7.7 | |

|

| ||

| youth | 7.7 | |

|

| ||

| drive | 7.6 | |

|

| ||

| human | 7.5 | |

|

| ||

| clothing | 7.4 | |

|

| ||

| support | 7.4 | |

|

| ||

| art | 7.4 | |

|

| ||

| cheerful | 7.3 | |

|

| ||

| protection | 7.3 | |

|

| ||

| relaxing | 7.3 | |

|

| ||

| danger | 7.3 | |

|

| ||

| weapon | 7.3 | |

|

| ||

| looking | 7.2 | |

|

| ||

| blond | 7.2 | |

|

| ||

| recreation | 7.2 | |

|

| ||

| motor vehicle | 7.1 | |

|

| ||

| sofa | 7.1 | |

|

| ||

| summer | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.6 | |

|

| ||

| motor vehicle | 89.3 | |

|

| ||

| black and white | 89.2 | |

|

| ||

| monochrome photography | 71.1 | |

|

| ||

| vehicle | 67.5 | |

|

| ||

| car | 61.3 | |

|

| ||

| vintage clothing | 59.8 | |

|

| ||

| stock photography | 59.3 | |

|

| ||

| crew | 58.9 | |

|

| ||

| monochrome | 57.1 | |

|

| ||

| soldier | 53.9 | |

|

| ||

| troop | 52.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

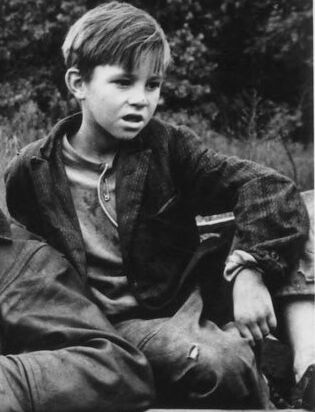

| Age | 4-12 |

| Gender | Male, 100% |

| Sad | 68.8% |

| Confused | 40.6% |

| Fear | 9.7% |

| Calm | 8.3% |

| Surprised | 8% |

| Angry | 3.8% |

| Disgusted | 3.3% |

| Happy | 0.6% |

AWS Rekognition

| Age | 42-50 |

| Gender | Male, 99.9% |

| Calm | 99.4% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.2% |

| Happy | 0% |

| Disgusted | 0% |

| Confused | 0% |

AWS Rekognition

| Age | 0-6 |

| Gender | Male, 99.8% |

| Calm | 99.9% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.1% |

| Confused | 0% |

| Angry | 0% |

| Disgusted | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 30-40 |

| Gender | Female, 87.5% |

| Angry | 76.4% |

| Calm | 12.5% |

| Confused | 10.1% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.2% |

| Disgusted | 0.1% |

| Happy | 0.1% |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 6 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 22 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 93.1% | |

|

| ||

| people portraits | 5.5% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black and white photo of a man | 94.4% | |

|

| ||

| an old black and white photo of a man | 93.7% | |

|

| ||

| an old photo of a man | 93.6% | |

|

| ||