Machine Generated Data

Tags

Amazon

created on 2023-10-06

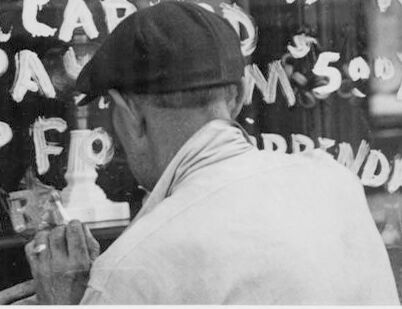

| Baseball Cap | 99.9 | |

|

| ||

| Cap | 99.9 | |

|

| ||

| Clothing | 99.9 | |

|

| ||

| Hat | 99.9 | |

|

| ||

| Adult | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Man | 99.4 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Adult | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Man | 99.3 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Person | 94.4 | |

|

| ||

| Face | 90.9 | |

|

| ||

| Head | 90.9 | |

|

| ||

| Text | 84 | |

|

| ||

| Barbershop | 78.2 | |

|

| ||

| Indoors | 78.2 | |

|

| ||

| Shop | 65.1 | |

|

| ||

| Photography | 62.6 | |

|

| ||

| Portrait | 62.6 | |

|

| ||

| Blackboard | 57.5 | |

|

| ||

| T-Shirt | 57 | |

|

| ||

| Hairdresser | 56.9 | |

|

| ||

| Restaurant | 56.1 | |

|

| ||

| Cafeteria | 55.3 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| barbershop | 100 | |

|

| ||

| shop | 100 | |

|

| ||

| mercantile establishment | 79.7 | |

|

| ||

| place of business | 53.2 | |

|

| ||

| blackboard | 33.8 | |

|

| ||

| man | 30.9 | |

|

| ||

| male | 27.7 | |

|

| ||

| establishment | 26.6 | |

|

| ||

| people | 22.9 | |

|

| ||

| education | 20.8 | |

|

| ||

| person | 20 | |

|

| ||

| business | 18.2 | |

|

| ||

| school | 18 | |

|

| ||

| student | 17.5 | |

|

| ||

| sign | 17.3 | |

|

| ||

| board | 16.8 | |

|

| ||

| classroom | 16 | |

|

| ||

| businessman | 15.9 | |

|

| ||

| adult | 15.6 | |

|

| ||

| room | 14 | |

|

| ||

| book jacket | 13.9 | |

|

| ||

| professional | 13.6 | |

|

| ||

| portrait | 13.6 | |

|

| ||

| work | 13.3 | |

|

| ||

| modern | 13.3 | |

|

| ||

| office | 13.2 | |

|

| ||

| looking | 12.8 | |

|

| ||

| teacher | 12.8 | |

|

| ||

| old | 12.5 | |

|

| ||

| class | 12.5 | |

|

| ||

| college | 12.3 | |

|

| ||

| text | 12.2 | |

|

| ||

| casual | 11.9 | |

|

| ||

| jacket | 11.8 | |

|

| ||

| chalkboard | 11.8 | |

|

| ||

| building | 11.6 | |

|

| ||

| vintage | 11.6 | |

|

| ||

| technology | 11.1 | |

|

| ||

| black | 10.8 | |

|

| ||

| job | 10.6 | |

|

| ||

| university | 10.5 | |

|

| ||

| men | 10.3 | |

|

| ||

| study | 10.3 | |

|

| ||

| indoor | 10 | |

|

| ||

| happy | 10 | |

|

| ||

| hand | 9.9 | |

|

| ||

| to | 9.7 | |

|

| ||

| exam | 9.6 | |

|

| ||

| boy | 9.6 | |

|

| ||

| writing | 9.4 | |

|

| ||

| occupation | 9.2 | |

|

| ||

| human | 9 | |

|

| ||

| formula | 8.9 | |

|

| ||

| indoors | 8.8 | |

|

| ||

| home | 8.8 | |

|

| ||

| teaching | 8.8 | |

|

| ||

| architecture | 8.6 | |

|

| ||

| finance | 8.4 | |

|

| ||

| structure | 8.4 | |

|

| ||

| wrapping | 8.2 | |

|

| ||

| success | 8 | |

|

| ||

| financial | 8 | |

|

| ||

| computer | 8 | |

|

| ||

| worker | 8 | |

|

| ||

| design | 7.9 | |

|

| ||

| knowledge | 7.7 | |

|

| ||

| learn | 7.6 | |

|

| ||

| smart | 7.5 | |

|

| ||

| write | 7.5 | |

|

| ||

| screen | 7.5 | |

|

| ||

| manager | 7.4 | |

|

| ||

| guy | 7.4 | |

|

| ||

| letter | 7.3 | |

|

| ||

| message | 7.3 | |

|

| ||

| graphic | 7.3 | |

|

| ||

| lifestyle | 7.2 | |

|

| ||

| science | 7.1 | |

|

| ||

| face | 7.1 | |

|

| ||

| working | 7.1 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.4 | |

|

| ||

| black and white | 88.6 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| poster | 78.3 | |

|

| ||

| monochrome photography | 69.7 | |

|

| ||

| monochrome | 68.4 | |

|

| ||

| human behavior | 66.2 | |

|

| ||

| font | 56.8 | |

|

| ||

| advertising | 55.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 98.6% |

| Calm | 72% |

| Sad | 50.3% |

| Surprised | 6.3% |

| Fear | 6% |

| Angry | 0.4% |

| Confused | 0.2% |

| Disgusted | 0.2% |

| Happy | 0.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

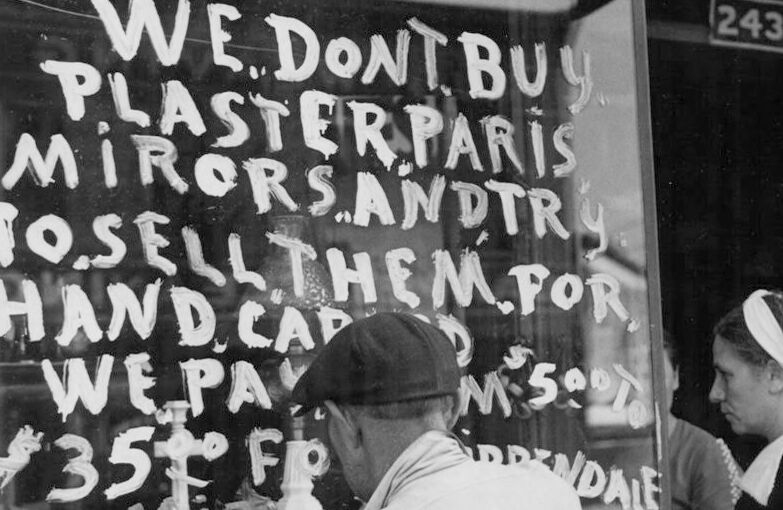

| paintings art | 80.6% | |

|

| ||

| text visuals | 12.1% | |

|

| ||

| interior objects | 3.5% | |

|

| ||

| pets animals | 2.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a black and white photo of a person | 81.9% | |

|

| ||

| an old photo of a person | 81.7% | |

|

| ||

| a group of people around each other | 72.7% | |

|

| ||

Text analysis

Amazon

243

35

WEPA

PLASTERPARIS

HANDCAP

35 to Fp

Fp

TOSELLTHEM.POR

to

MiBA

the

NDALE

MIRORSANDTR

M-500

243

PLASTER PARIS

MIRORSANDTR

#8

35P

243

PLASTER

PARIS

MIRORSANDTR

#

8

35P