Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

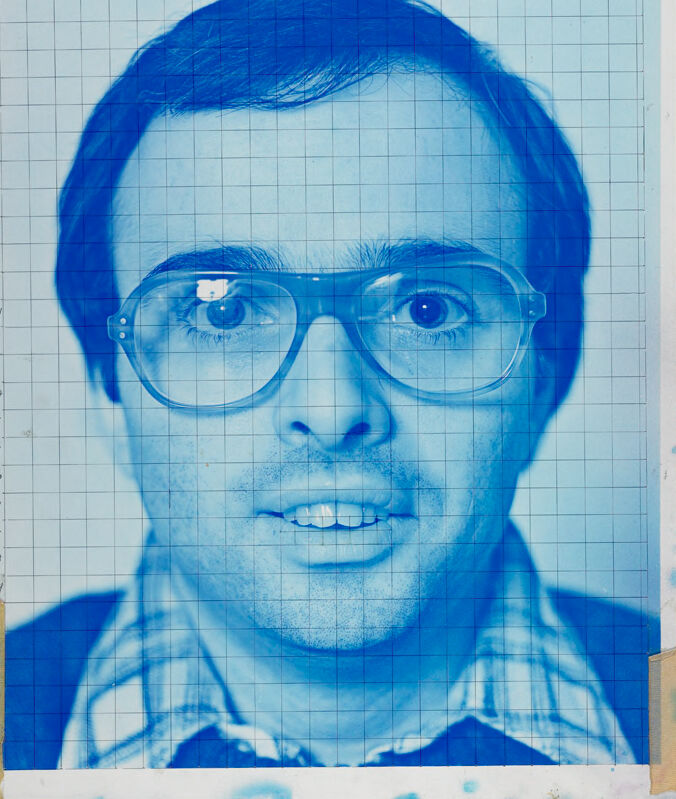

| Age | 27-44 |

| Gender | Male, 98.9% |

| Calm | 55.5% |

| Surprised | 7% |

| Happy | 9.4% |

| Confused | 10% |

| Disgusted | 2.1% |

| Angry | 3.4% |

| Sad | 12.5% |

Feature analysis

Amazon

| Glasses | 99.6% | |

Categories

Imagga

Captions

Microsoft

created on 2018-04-19

| a man wearing glasses posing for the camera | 81.1% | |

| a man wearing glasses posing for a photo | 73.7% | |

| a man wearing glasses | 73.6% | |

OpenAI GPT

Created by gpt-4 on 2025-03-08

The image appears to be an artistic collage or alteration of what looks like a traditional photograph, with several elements brought together in a layered composition. A square of vibrant blue obscures a central part of the image, leaving visible only the silhouettes of a person's hair, shoulders, and an upper garment with a collar. The photographic element is overlaid on a piece of paper with grid lines, suggesting a systematic or technical backdrop. The left edge of the photograph includes handwritten notations that resemble measurements or calculations, while the right edge shows paint smudges, enhancing the creative feel of the piece. Adhesive tape secures the photograph to the backing, further emphasizing the physical and crafted nature of the image. Additionally, the composition features two signatures—one at the top on a worn and yellowed section of paper, and one at the bottom over a more transparent and smeared section, likely done with a fluid medium like ink or watercolor. Overall, the image is an evocative blend of photography, graphic elements, and tactile materials, suggesting an exploration of identity, memory, or representation.

Created by gpt-4o-2024-05-13 on 2025-03-08

The image displays an artistic rendering that incorporates an overlay of a grid on paper, accompanied by a photograph. The grid is neatly aligned with rows and columns labeled by numbers, while blue tones dominate the photographic layer showing portions of hair and a collared shirt. The borders of the paper exhibit some artistic splashes and stains in shades of blue, green, and pink. Handwritten words "Cyan" and "Math" appear on the top and bottom of the image respectively, suggesting the intersects of discipline and color within the artwork. Some areas are secured with pieces of tape.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image appears to be a portrait of a man with blue-tinted skin, wearing glasses. The portrait is drawn using a grid pattern, giving it a technical, graphical quality. The man has a thoughtful, serious expression on his face. The background of the image has a textured, collage-like appearance, with stains and splashes of color that add to the overall artistic style of the work.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This appears to be a vintage portrait photograph that has been printed or processed in a striking monochromatic blue tone. The image is overlaid on graph paper with numbered grid lines. The photograph shows someone wearing thick-rimmed glasses and what appears to be a collared shirt or jacket. The image has a somewhat informal, casual quality typical of ID photos or yearbook portraits from perhaps the 1970s or 1980s. There are some watercolor-like blue and pink splatters around the edges of the paper, and there appears to be some text or writing at the top and bottom of the sheet. The overall composition has an interesting artistic quality due to the blue toning and the grid background.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image is a blue-toned photograph of a man's face, overlaid on a grid paper background. The man has short dark hair and is wearing glasses with thick black frames. He is looking directly at the camera with a neutral expression. The photograph is taped to a piece of paper that has a grid pattern drawn on it, with numbers along the top and left edges. The paper is slightly yellowed and has some stains and tears around the edges. There are also some handwritten notes and scribbles on the paper, including the word "Mark" in cursive at the top and "Cyan" in print at the bottom. The overall effect of the image is one of nostalgia and retro aesthetic, evoking a sense of a bygone era. The use of grid paper and the handwritten notes adds a sense of DIY or handmade quality to the image, while the blue tone gives it a cool and calming feel.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

This image is a cyanotype print of a man's face, overlaid on a grid paper background. The man has short dark hair and wears large-framed glasses and a plaid shirt. He appears to be looking directly at the camera. The cyanotype print is in a blue hue, with the man's features and the grid paper visible underneath. The edges of the paper are worn and torn, with tape holding it together in some areas. There are also some watercolor-like marks on the right side of the paper. At the top of the image, the word "Mark" is written in black ink, while at the bottom, the word "Cyan" is written in the same handwriting. The overall effect is one of a vintage or antique photograph, with the cyanotype print giving it a unique and nostalgic feel.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-13

The image features a portrait of a man, likely a mugshot, printed on a textured, light-colored paper. The portrait is framed within a grid-like pattern, which gives it a structured and methodical appearance. The man in the portrait has short, dark hair and is wearing glasses. His facial expression is neutral, and he is looking directly at the camera. The background of the portrait is blue, and there are some faint blue and white marks around the edges, possibly from the printing process. The name "Mark" is written in a bold, stylized font at the top of the image, and there is a signature or stamp at the bottom, although it is partially obscured.

Created by amazon.nova-pro-v1:0 on 2025-01-13

The image is a photograph of a man. He is smiling and wearing glasses. The man's face is divided into a grid of squares. Each square contains a number, ranging from 1 to 15. The man's face is blue, and the background is white. The man's hair is also blue, and he is wearing a blue shirt. The photograph is titled "Mark," and it is signed by the artist, Cyan.