Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

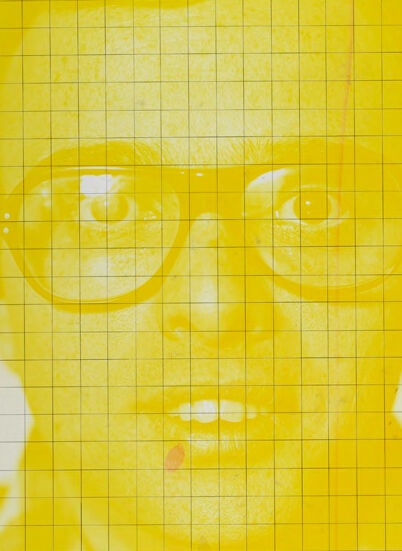

| Age | 38-57 |

| Gender | Female, 91.6% |

| Happy | 3.7% |

| Disgusted | 0.6% |

| Sad | 37.9% |

| Angry | 3.2% |

| Calm | 38% |

| Surprised | 3.6% |

| Confused | 13.1% |

Categories

Imagga

Captions

Microsoft

created on 2018-04-19

| a person standing in front of a mirror posing for the camera | 36.4% | |

| a person standing in front of a mirror | 33.9% | |

| a person in a yellow shirt | 33.8% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-11

| a photograph of a man with glasses and a yellow shirt | -100% | |

OpenAI GPT

Created by gpt-4 on 2025-03-06

The image shows a piece of paper with a yellow silhouette over a grid background. The grid is composed of small squares, which cover the entirety of the paper except for the area filled in with yellow. There is a small red mark on the right side of the paper, and near the bottom right corner, there is a signature in cursive that reads "galln" or a word of similar appearance. The paper itself appears aged, with discolored edges and corners that are frayed and slightly damaged, implying that it has some history or has been handled over time.

Created by gpt-4o-2024-05-13 on 2025-03-06

This image features a grid overlay on a piece of paper with a yellow color wash applied to the periphery. The background grid consists of rectangular cells, likely for precision in design or scaling purposes. Beneath the gridded area near the bottom of the page, the word "yellow" is handwritten in a cursive style. The corners of the paper appear slightly worn, indicating some age or usage.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image appears to be a hand-drawn portrait of a person with a yellow, grid-like background. The face is rendered in bright yellow with noticeable facial features such as eyes, nose, and mouth. The portrait has a stylized, illustrative quality to it.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This is an artistic portrait rendered entirely in yellow on a grid paper background. The image appears to be a head and shoulders shot of someone wearing glasses. The technique used creates a monochromatic effect where different intensities of yellow create the features and contours of the face. The word "yellow" is written in script at the bottom of the image. The paper appears aged with some discoloration around the edges. The grid lines visible through the yellow image suggest this might be part of a larger artistic study or technical drawing exercise.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-17

The image is a yellow-tinted photograph of a person's head and shoulders, with a grid pattern overlaid on top. The photograph is taken from the chest up, showing the person's face, neck, and shoulders. The person has short hair and is wearing glasses. They are looking directly at the camera with a neutral expression.

The photograph is printed on a piece of paper that has a grid pattern overlaid on top of it. The grid is made up of small squares, and it covers the entire surface of the photograph. The grid is yellow, which gives the image a bright and cheerful tone.

At the bottom of the image, there is a handwritten note that says "yellow" in cursive script. This suggests that the image is intended to represent the color yellow, or perhaps to evoke a sense of warmth and optimism.

Overall, the image is a simple yet striking representation of a person's face and shoulders, overlaid with a grid pattern and a bright yellow tone. It could be used as a design element in a variety of contexts, such as in graphic design, advertising, or art.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-17

The image is a portrait of a man with glasses, rendered in yellow tones and overlaid with a grid pattern. The subject's face is the central focus, with the grid lines subtly integrated into the artwork.

Key Features:

- Subject: A man wearing glasses, depicted in a yellow tone.

- Grid Pattern: A grid overlaying the portrait, adding texture and visual interest.

- Color Scheme: Predominantly yellow, with subtle variations in shading and tone.

- Background: A light-colored background, possibly paper or canvas, which provides contrast to the yellow portrait.

- Signature: A handwritten signature at the bottom of the image, although the name is not clearly legible.

- Overall Effect: The combination of the yellow portrait, grid pattern, and light background creates a unique and visually striking image.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-25

The image shows a yellow-colored portrait of a man with glasses. The portrait is in a square shape with a grid-like pattern, and the man's face is divided into smaller squares. The portrait is placed on a white background, and it seems to be a photograph. The man's face is painted in yellow, and the grid-like pattern is also in yellow. The portrait has a vintage look, and it seems to be an old photograph.

Created by amazon.nova-pro-v1:0 on 2025-02-25

The image is a portrait of a person with a yellow-tinted skin tone. The person is wearing glasses and has short hair. The person's face is slightly tilted to the left. The person's eyes are wide open, and the person's mouth is slightly open. The person's face is surrounded by a grid-like pattern. The person's face is in the center of the image. The person's face is the only object in the image.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-15

Here's a description of the image:

Overall Impression:

The image is a pop art-style portrait in a stark, bold yellow color. The subject is a man wearing glasses, rendered with a grid overlay that gives it a screen-printed or stencil-like quality. The edges of the image are distressed and aged.

Specific Details:

- Subject: The central figure is a man with a relatively round face, wearing glasses. He is looking directly at the viewer. His expression is somewhat neutral, with a slightly open mouth.

- Color and Style: The entire portrait is dominated by a single, vibrant yellow. The use of a grid overlay adds a geometric and graphic element, reminiscent of screen printing or photographic reproduction techniques.

- Grid: A fine grid of black lines covers the face. This suggests a deliberate breakdown of the image, perhaps for reproduction or as a stylistic choice.

- Text and Markings: At the bottom of the image, the word "yellow" is written in cursive. There are also a few small splotches of pink paint.

- Paper: The artwork is printed on what appears to be aged or slightly worn paper, with some visible damage and discoloration around the edges.

Artistic Interpretation:

The image has the aesthetic of pop art, probably made in the 1970's or 1980's. The yellow color, the use of a grid, and the simplicity of the portrait all combine to create a bold and impactful visual statement. The distress marks could be intentional to achieve an old look.

Created by gemini-2.0-flash on 2025-05-15

The artwork is a print on paper featuring a portrait of a person overlaid with a grid. The portrait and grid are rendered in a bright yellow color, giving the piece a monochromatic appearance. The subject is wearing glasses with thick, round frames, and their expression is neutral or slightly surprised. The grid consists of evenly spaced lines creating square cells that cover the entire portrait.

The paper itself appears to be slightly aged, with some yellowing and minor damage around the edges. The word "yellow" is handwritten at the bottom of the print in cursive, further emphasizing the color scheme. The overall composition suggests a stylized or experimental approach to portraiture, possibly exploring themes of identity, perception, or the relationship between image and structure.