Machine Generated Data

Tags

Color Analysis

Feature analysis

Amazon

| Person | 67.9% | |

Categories

Imagga

| paintings art | 74.8% | |

| pets animals | 19.9% | |

| streetview architecture | 4.2% | |

Captions

OpenAI GPT

Created by gpt-4 on 2024-12-13

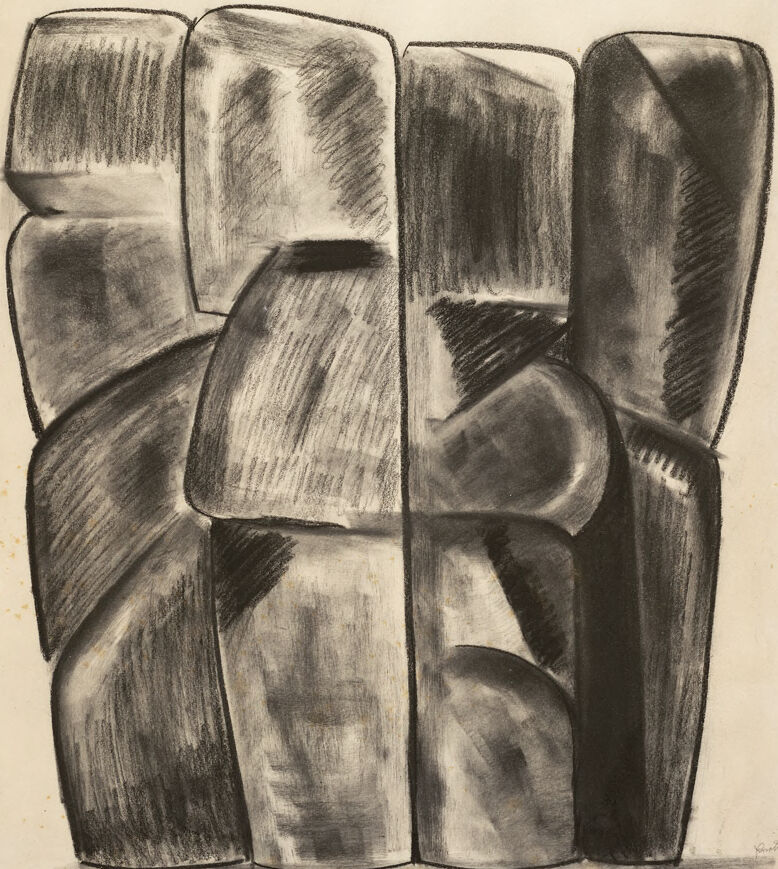

The image depicts an artwork with a series of vertical, rectangular forms that appear to be arranged closely together. The forms exhibit variations in shading, suggesting three-dimensionality and depth. The shading technique involves a range of tonal gradations, giving the shapes a sense of volume. Some of the forms have curved indentations or protrusions that interrupt their rectangular geometry, further contributing to the overall texture and composition. The background is mostly plain, allowing the viewer to focus on the forms which are the central subject of the piece. The artwork exhibits qualities typical of charcoal drawing, such as soft edges and rich blacks. The artwork has a kind of abstract or minimalist aesthetic, potentially leaving its interpretation open to the viewer. There is no clear indication of whether the shapes represent something specific or are purely geometric forms arranged for compositional effect. The signature of the artist is visible in the bottom right corner.

Created by gpt-4o-2024-05-13 on 2024-12-13

This image features an abstract charcoal drawing. The artwork appears to consist of various rounded geometric shapes, densely packed together to create a complex form. The elements have a three-dimensional appearance due to the use of shading and gradation, which gives depth to the image. The overall composition can suggest a variety of interpretations, often left to the viewer's imagination. The background is plain, which helps to bring attention to the intricate and abstract forms in the foreground.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-13

The image appears to be a charcoal drawing of several stacked books or book-like shapes. The shapes are rendered in a semi-abstract, geometric style, with bold lines and shadows creating a sense of depth and volume. The books or volumes are depicted in a variety of angles and perspectives, giving the overall composition a dynamic, three-dimensional quality. The drawing has a moody, atmospheric quality, with the use of charcoal creating a range of tones from deep blacks to softer grays.

Created by claude-3-5-sonnet-20241022 on 2024-12-13

This appears to be an abstract charcoal or graphite drawing consisting of four vertical, column-like forms standing side by side. The forms are rendered with strong contrasts between light and dark areas, creating a sense of volume and dimension. Each column has curved, organic shapes within it, and they seem to be slightly different in width and height. The shading technique uses bold, directional strokes that emphasize the forms' three-dimensional quality. The overall composition has a monumental, architectural feel despite its abstract nature. The drawing is executed on what appears to be light-colored paper, and the artistic style suggests it might be from the modernist period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-13

The image presents a charcoal drawing of a group of abstracted, rectangular shapes in varying sizes and orientations. The shapes are stacked and overlapping, creating a sense of depth and dimensionality. The drawing is rendered in a range of dark and light grays, with the darkest areas providing contrast to the lighter ones. The background of the image is a light beige color, which provides a subtle contrast to the darker tones of the charcoal drawing. The overall effect is one of simplicity and elegance, with the abstract forms creating a sense of visual interest without being overly complex or busy. The image appears to be a study or sketch, rather than a finished piece of art. The rough texture of the charcoal and the loose, expressive lines suggest a spontaneous and gestural approach to drawing. The artist's use of negative space and the careful placement of the shapes create a sense of balance and harmony, despite the overall simplicity of the composition. Overall, the image is a thoughtful and well-crafted exploration of form and texture, using the simple yet expressive medium of charcoal to create a visually appealing and engaging work of art.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-13

The image depicts a charcoal drawing of a stone sculpture, showcasing a unique blend of abstract and realistic elements. The sculpture is composed of various shapes and forms, with some resembling stones or rocks, while others appear more abstract and geometric. **Key Features:** * **Charcoal Drawing:** The image is created using charcoal, which adds a sense of texture and depth to the sculpture. * **Abstract Forms:** The sculpture features a mix of abstract and realistic elements, with some parts resembling stones or rocks, while others are more geometric and abstract. * **Shading and Lighting:** The use of shading and lighting creates a sense of volume and dimensionality, giving the sculpture a three-dimensional appearance. * **Background:** The background of the image is a light beige color, which helps to highlight the dark tones of the charcoal drawing. **Overall Impression:** The image presents a thought-provoking and visually striking representation of a stone sculpture. The combination of abstract and realistic elements, along with the effective use of shading and lighting, creates a sense of depth and dimensionality. The image invites the viewer to explore the intricate details of the sculpture and appreciate its unique beauty.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-28

The image depicts a monochromatic drawing of a collection of abstract, geometric forms. These forms are primarily composed of cubes and cylinders, arranged in a way that suggests a three-dimensional structure. The drawing is done in charcoal or graphite, with varying shades of gray to create depth and texture. The shadows and highlights are meticulously rendered, giving the impression of light and volume. The forms are placed in a staggered, overlapping arrangement, creating a sense of movement and dynamism. The background is a plain, light-colored surface, which contrasts with the darker tones of the forms, drawing attention to the subject matter. The overall composition is balanced, with a sense of symmetry and rhythm, despite the abstract nature of the forms.

Created by amazon.nova-pro-v1:0 on 2025-02-28

The image is a black-and-white drawing of a group of four large, rectangular objects, which appear to be made of stone or concrete. The objects are arranged in a row, with each one slightly overlapping the next. The objects have a rough, textured surface, with visible cracks and crevices. The drawing is done in a style that emphasizes the three-dimensionality of the objects, with shading and highlights used to create a sense of depth and volume.