Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 23-37 |

| Gender | Male, 75.5% |

| Surprised | 0% |

| Sad | 37.1% |

| Fear | 0% |

| Disgusted | 0% |

| Calm | 62.2% |

| Angry | 0.1% |

| Confused | 0.1% |

| Happy | 0.4% |

Feature analysis

Amazon

| Person | 98.8% | |

Categories

Imagga

| paintings art | 99.1% | |

Captions

Microsoft

created on 2020-04-30

| a vintage photo of a woman | 89% | |

| a vintage photo of a girl | 78.9% | |

| a vintage photo of a woman holding a book | 55.5% | |

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-03-04

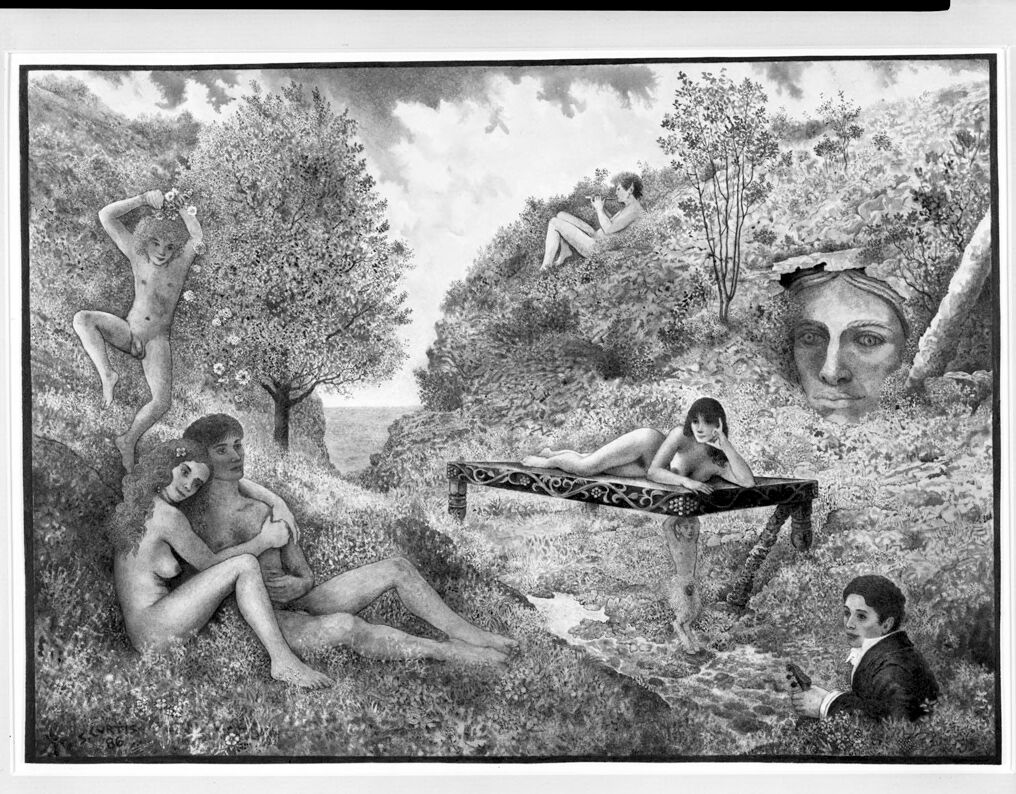

The image depicts a surreal landscape with various nude human figures in natural settings. In the foreground, there are several nude figures reclining on the grass. In the background, there are additional nude figures and a large sculptural element resembling a human face. The overall scene has a dreamlike, fantastical quality, with the figures and landscape blending together in an imaginative, artistic composition.

Created by claude-3-5-sonnet-20241022 on 2025-03-04

This appears to be a black and white artistic composition or painting depicting a pastoral or mythological scene. The artwork shows several figures in a lush natural setting with trees and foliage. There's a figure reclining on what appears to be an ornate platform or bed, while others are positioned throughout the landscape. A large face appears carved or emerging from the rocky landscape on the right side of the image. One fully clothed figure in dark attire sits in the lower right corner, contrasting with the other unclothed figures in the scene. The overall composition has a dreamy, classical quality reminiscent of Renaissance or Romantic period artwork.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-12

The image is a black and white drawing of a landscape with several nude figures. The drawing depicts a serene and idyllic scene, with lush greenery and trees surrounding the figures. In the foreground, a man and woman are seated together, embracing each other. To the left, a nude figure is standing, while another figure is reclining on a table in the center. In the background, a large face is visible, adding a sense of mystery to the scene.

The overall atmosphere of the image is one of tranquility and intimacy, with the figures appearing relaxed and content in their surroundings. The use of black and white creates a sense of simplicity and elegance, allowing the viewer to focus on the beauty of the figures and the landscape.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-12

This image is a black-and-white drawing of a landscape with several nude figures, as well as a few clothed ones. The scene is set in a natural environment, with trees, bushes, and grass covering the ground. In the foreground, there are two nude women sitting on the grass, one of whom is being embraced by a nude man. To their right, a nude woman is reclining on a bench, while another nude woman is climbing a tree to their left. A clothed man is sitting in the grass at the bottom right of the image, and a large face is visible in the foliage behind him. In the background, there is a nude man sitting on a hillside, surrounded by trees and bushes. The overall atmosphere of the image is one of serenity and tranquility, with the figures appearing to be at peace with their surroundings.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-12

This image is a black-and-white illustration of a scene in a forest with a river. The scene features a man and a woman sitting on the ground, embracing each other. The man is wearing a suit and holding a gun, while the woman is wearing a dress. In the background, there are several other people, including a man and a woman sitting on a rock, a woman lying on a table, and a man sitting on a tree.

Created by amazon.nova-pro-v1:0 on 2025-01-12

The image is a black-and-white painting with a white border. It depicts a scene with several people in a forest. On the left, a man and a woman are sitting on the grass, embracing each other. Above them, a man is climbing a tree, holding flowers. In the middle, a woman is lying on a table, with another woman sitting next to her. On the right, a man is sitting on the grass, holding a camera.