Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 47-65 |

| Gender | Male, 52% |

| Surprised | 1.2% |

| Disgusted | 2.1% |

| Happy | 15.8% |

| Angry | 9.7% |

| Confused | 2.5% |

| Calm | 27.5% |

| Fear | 4.8% |

| Sad | 36.4% |

Feature analysis

Amazon

| Person | 86.3% | |

Categories

Imagga

| paintings art | 79.4% | |

| nature landscape | 18.8% | |

| pets animals | 1.4% | |

Captions

Microsoft

created on 2020-04-30

| a person holding a book | 38.2% | |

| a close up of a person holding a book | 32.5% | |

| close up of a person holding a book | 28.6% | |

OpenAI GPT

Created by gpt-4 on 2025-03-06

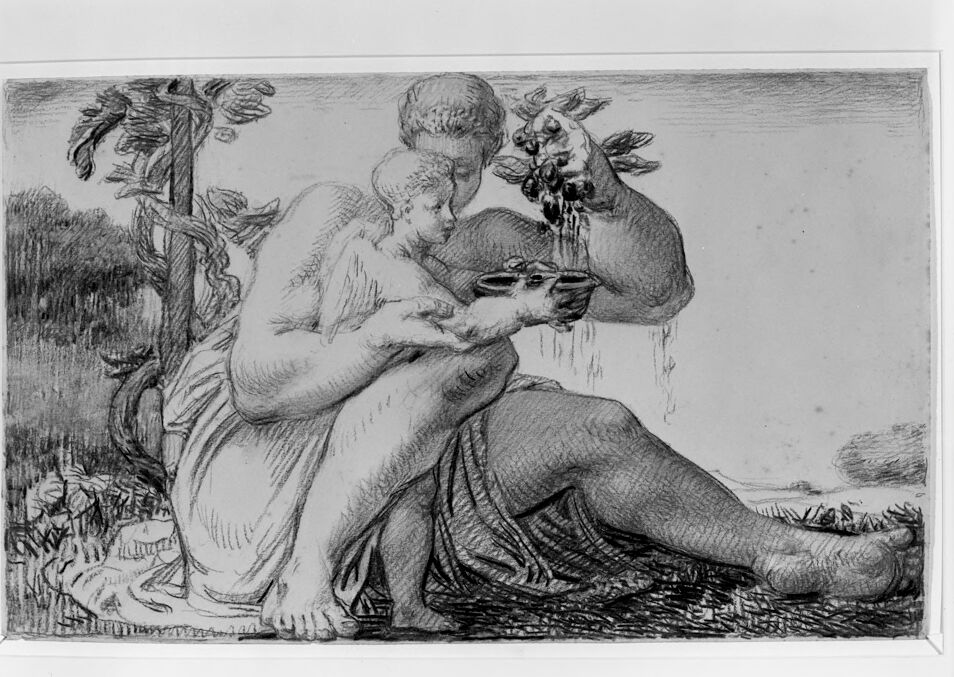

This is an etching or pen-and-ink drawing depicting a classical scene. In the foreground, there is a seated figure with draped clothing partially exposed, suggesting a relaxed or sensual pose. The hand is positioned as though holding something up to the mouth. In the background, there is a standing figure next to the seated one, also draped in flowing garments. This figure appears to be facing away and is interacting with a large urn or vessel. The overall setting seems to have plant life, potentially indicating an outdoor scene. The artwork is rich in fine details and shading, conveying texture and depth.

Created by gpt-4o-2024-05-13 on 2025-03-06

The image is a monochromatic drawing of a person in a seated position. The individual is holding a bunch of grapes in one hand and a cup in the other. The person is adorned in draped fabric that covers the lower half of their body. Surrounding the figure, there are elements of nature such as plants and a tree trunk on the left side. The drawing features a combination of detailed textures, including the muscles of the body, the folds of the fabric, and the foliage.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-03-04

The image appears to be a black and white pencil or charcoal drawing depicting a mythological or allegorical scene. It shows a figure, possibly a female, seated on the ground in a flowing, draped garment. Another figure, likely a male, leans over the seated figure, holding what appears to be a bowl or vessel. The overall scene has a somber, melancholic tone, with the figures set against a background of nature elements such as trees and foliage.

Created by claude-3-opus-20240229 on 2025-03-04

The image is a black and white drawing or etching depicting two nude figures, a man and a woman, sitting close together in an embrace. The man appears to be leaning against a tree while holding the woman, who is sitting in his lap with her head resting on his shoulder. The drawing has a rough, sketchy quality with many cross-hatched lines used for shading and to define the forms and musculature of the figures. The background includes some shrubbery or foliage, suggesting an outdoor or natural setting.

Created by claude-3-5-sonnet-20241022 on 2025-03-04

This appears to be a classical sketch or drawing, likely from the Renaissance or Baroque period. It shows two figures in a pastoral setting, reclining on the ground. The drawing is done in a soft, sketchy style with careful attention to the draping of fabric and the human form. In the background, there are suggestions of trees and landscape elements. The composition has a romantic, mythological quality typical of artwork from this period. The technique appears to be pencil or charcoal on paper, with delicate shading and line work that creates depth and dimension in the scene.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-13

The image depicts a pencil drawing of a man and a woman in a pastoral setting. The man is reclining on the ground, with his left leg bent at the knee and his right leg extended. He is wearing a loincloth and has a bowl in his left hand. The woman is kneeling beside him, holding a small bowl in her left hand and reaching out to touch the man's right hand with her right hand. She is wearing a long dress and has a small child on her lap. The child is looking up at the woman. In the background, there are trees and bushes, as well as a small animal, possibly a goat or sheep, standing on the ground. The overall atmosphere of the drawing is one of tranquility and intimacy, suggesting a peaceful and serene scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-13

This image is a black and white drawing of a man and a child. The man is sitting on the ground, with his legs stretched out to the right. He is wearing a toga and has short hair. The child is sitting on his lap, facing him. The man is holding a bowl in his hands, and the child is reaching into it. The background of the image is a landscape with trees and bushes. The overall atmosphere of the image is one of serenity and intimacy, as the man and child seem to be enjoying each other's company in a peaceful setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-13

The image is a black-and-white drawing of a man and a child sitting on the ground. The man is holding the child in his lap and is feeding him grapes from a bowl. The child is looking up at the man, and the man is looking down at the child. The man is wearing a long-sleeved shirt, and the child is wearing a short-sleeved shirt. The drawing is framed with a white border.

Created by amazon.nova-pro-v1:0 on 2025-01-13

The image features a black-and-white drawing of a man and a child sitting on the ground. The man is holding the child on his lap, and both are looking at something in front of them. The man is holding a bowl in his right hand, and his left hand is holding a plant. The child is holding a plant in his right hand. The background is blurry, and it seems to be a field.