Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 86% |

| Disgusted | 0.3% |

| Calm | 19.5% |

| Surprised | 0.3% |

| Angry | 0.8% |

| Happy | 1% |

| Sad | 77% |

| Confused | 0.7% |

| Fear | 0.6% |

Feature analysis

Amazon

| Person | 98.2% | |

Categories

Imagga

| paintings art | 100% | |

Captions

Microsoft

created by unknown on 2020-04-25

| a drawing of a map | 66.5% | |

| a close up of a map | 66.4% | |

| drawing of a map | 58.1% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-12

| a photograph of a drawing of a woman in a dress and a man in a suit | -100% | |

OpenAI GPT

Created by gpt-4 on 2024-12-14

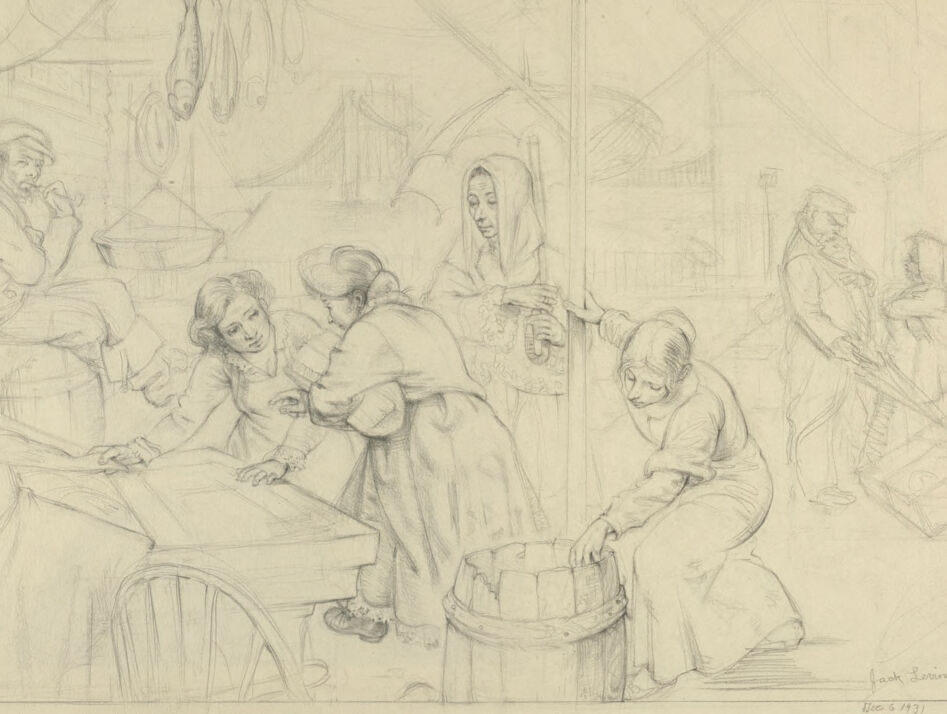

This is a pencil sketch depicting an interior scene from what appears to be a bygone era. The sketch is intricate with various figures engaged in different activities, contributing to a narrative of daily life. On the left side, you can see a person engaged in an activity at a small table, next to hanging items that could be foodstuffs or utensils. In the foreground, a figure is intent on a task that involves a barrel or bucket.

The right portion shows an extension of the room or a different space altogether, viewed through an open door or window, with a person walking away from the viewer, implying movement and life beyond the immediate scene. Another figure closer to the viewer seems to be performing a domestic task, such as scrubbing or cleaning; the gesture suggests they are intently working on the object in front of them.

The entire scene exudes a sense of domesticity and gives a glimpse into the everyday lives of the characters within the sketch. The architecture and clothing styles hint at an older historical period, likely anywhere from the 17th to early 20th century, though it's difficult to be precise without further context. The artist's signature and a date are visible on the bottom right of the image, indicating that this is likely a finished piece meant for presentation or archiving.

Created by gpt-4o-2024-05-13 on 2024-12-14

This image is a detailed pencil drawing depicting a scene of daily life. Several figures are engaged in various activities, all set within what appears to be an urban setting.

- On the left side, a figure is sitting on a barrel, appearing relaxed.

- In the middle ground, three figures are interacting around a table.

- To the right, a figure is bending over, working with a large barrel or bucket.

- In the background, there are architectural elements like bridges and buildings, adding depth and context to the setting.

- To the far right, there are two additional figures, one of which is pushing a cart or wheelbarrow.

The sketch is intricately detailed, with careful attention to the figures' postures and the various objects surrounding them.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-14

This image appears to be a pencil sketch or drawing depicting a domestic scene from what looks like the late 19th or early 20th century. The image shows several individuals engaged in various activities in what seems to be a home setting. There are people sitting around a table, with one figure appearing to be assisting or tending to another. There are also other figures in the background, suggesting a busy and active household. The overall style and composition of the sketch conveys a sense of daily life and routine activities taking place within this domestic environment.

Created by claude-3-5-sonnet-20241022 on 2024-12-14

This is a pencil sketch dated 1839 depicting a market or shop scene. The drawing shows several figures engaged in various activities. In the foreground, there appears to be a counter or cart where some business transaction is taking place. To the right, someone is working with what appears to be a barrel. The setting seems to be indoors, with architectural elements like beams or supports visible in the background. Hanging objects, possibly meats or other goods, can be seen in the upper left portion of the drawing. The style is realistic and detailed, characteristic of 19th-century observational sketching. The composition shows both the commercial activity and the working environment of what might be a shop or market stall of the period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-14

The image is a pencil drawing of a group of people in a room, possibly a tavern or inn. The drawing is signed "Jack Levine" in the bottom-right corner and dated "Dec. 5, 1931."

The scene depicts a group of people gathered around a table, with some sitting and others standing. There are also several people in the background, engaged in various activities. The atmosphere appears to be lively and social, with the people interacting with each other and enjoying each other's company.

The drawing is rendered in a detailed and realistic style, with intricate lines and shading that give the scene a sense of depth and texture. The artist has captured the subtleties of human expression and movement, conveying a sense of warmth and camaraderie among the group.

Overall, the image presents a charming and intimate portrayal of a social gathering, inviting the viewer to step into the scene and experience the joy and connection of the moment.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-14

The image depicts a pencil drawing of a group of people engaged in various activities, set against the backdrop of a market or village scene. The central figure is a woman, dressed in a long robe and headscarf, who appears to be holding a child. She is surrounded by other individuals, each involved in their own tasks.

To her left, a woman is seated at a table, while another woman is shown bending over a barrel. In the background, several men can be seen, some of whom are standing and others who are sitting. The overall atmosphere of the scene suggests a sense of community and daily life, with the individuals going about their routines in a peaceful and serene environment.

The drawing itself is rendered in a detailed and realistic style, with careful attention paid to the textures and folds of the clothing, as well as the facial expressions and body language of the figures. The use of pencil allows for a range of tonal values, from the lightest highlights to the darkest shadows, which adds depth and dimensionality to the image.

Overall, the image presents a charming and intimate portrayal of everyday life, capturing the essence of a moment in time with sensitivity and nuance.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-13

The image is a pencil drawing by Jack Levine, dated December 6, 1973. It depicts a scene inside a market or a shop, with several people engaged in various activities. In the foreground, a woman is seated on a chair, holding a piece of paper, possibly reading or writing. Another woman stands behind her, holding a basket, and a third woman is bending over, seemingly picking up something from the floor. In the background, there is a man sitting on a bench, smoking a pipe, and another man standing near a table with a scale and a hanging fish. The drawing captures a moment of everyday life, with people going about their tasks in a busy market setting.

Created by amazon.nova-pro-v1:0 on 2025-01-13

The image is a pencil drawing of a group of people in a room. The people are depicted in a simple style, with minimal details. The drawing is in black and white, and it appears to be a sketch. The people in the drawing are engaged in various activities. Some are standing, while others are sitting. There is a barrel in the foreground, and a table with a chair is visible in the background. The drawing captures a moment in time, with the people appearing to be focused on their tasks. The overall impression is one of simplicity and authenticity, with the focus on the people and their interactions rather than on elaborate details or decorations.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-13

This is a pencil sketch of a bustling marketplace scene. Several figures are depicted, seemingly engaged in various activities.

In the upper left, a man sits atop a barrel, seemingly observing the scene. Hanging above are what appear to be fish, suggesting a seafood market. A woman, partially visible, seems to be leaning against a pillar. There is a second woman wearing a head covering standing beside the pillar and she seems to be holding on to it. A third woman is inspecting a rectangular item. Beside her, another woman is leaning over a wooden barrel, her arm inside. In the background, further figures are suggested, possibly attending to other market activities. A distant bridge is faintly sketched in the background.

The artwork has a classic feel, suggesting an historical or traditional setting. The details are still in the development stage but it appears that this artwork has a level of storytelling that draws the viewer into the scene.

Created by gemini-2.0-flash on 2025-05-13

Here is a description of the image:

This is a pencil sketch depicting a scene with multiple figures in what appears to be an indoor market or shop setting. The drawing is rendered with light and delicate strokes, conveying a sense of detail despite the unfinished nature of the sketch.

In the foreground on the left, a man is sitting on a barrel, and the woman next to him and another woman are looking down at a piece of what appears to be furniture. To the right, a woman in a head covering is holding an umbrella. A woman in front of her is reaching into a barrel.

In the background, there are hints of hanging fish and other market items. A cityscape that could be the outline of a bridge can be made out in the background. In the right background there are two more figures, a man in a tall hat and a woman with her arms folded.

The style of the figures' clothing suggests a historical or period setting. The overall composition is divided into sections by what could be pillars or dividers, implying the structure of a market or indoor space.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a detailed pencil sketch that appears to depict a scene from a historical or possibly biblical setting. Here is a detailed description:

Setting: The scene takes place indoors, likely within a rustic or modest dwelling. There are several figures engaged in various activities, suggesting a communal or familial gathering.

Figures:

- Central Figure: A woman, possibly a mother or caregiver, stands in the center. She is holding an infant and appears to be the focal point of the scene. Her attire includes a head covering, which might indicate a specific cultural or historical context.

- Left Side: Several individuals are gathered around a table or bed. One person seems to be tending to something on the table, while others are observing or assisting. Their expressions and postures suggest concern or attention to a task.

- Right Side: A woman is kneeling or sitting on the floor, engaged in an activity involving a basket or container. Her posture and focus suggest she is performing a domestic task, possibly related to the central figure or the infant.

- Background: Additional figures are present in the background, some standing and others seated. Their activities are less clear, but they contribute to the overall atmosphere of a busy, communal space.

Objects and Details:

- Table/Bed: A central piece of furniture, possibly a table or bed, around which several figures are gathered.

- Basket/Container: A basket or container is being handled by the woman on the right side, suggesting a domestic or caregiving activity.

- Clothing and Attire: The figures are dressed in what appears to be historical or traditional clothing, including head coverings and long garments.

Artistic Style: The sketch is executed in a realistic style, with attention to detail in the figures' expressions, postures, and the folds of their clothing. The use of shading and line work creates a sense of depth and dimension.

Signature and Date: The sketch is signed "Jack Levine" and dated "Dec. 5, 1931," indicating the artist and the time of creation.

Overall, the image captures a moment of communal activity centered around a woman and an infant, set within a historical or traditional context. The detailed rendering of the figures and their interactions suggests a narrative of care, community, and domestic life.