Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 32-48 |

| Gender | Male, 87.5% |

| Fear | 0.1% |

| Confused | 0.3% |

| Calm | 96.8% |

| Sad | 0.3% |

| Happy | 0.4% |

| Angry | 1.2% |

| Disgusted | 0.5% |

| Surprised | 0.4% |

Feature analysis

Amazon

| Person | 98.3% | |

Categories

Imagga

| people portraits | 92.4% | |

| paintings art | 4.4% | |

| events parties | 2.6% | |

Captions

Microsoft

created on 2020-04-24

| a black and white photo of a man | 90.3% | |

| a man sitting on a bench posing for the camera | 82.5% | |

| a man sitting on a bench | 79.7% | |

OpenAI GPT

Created by gpt-4 on 2024-02-07

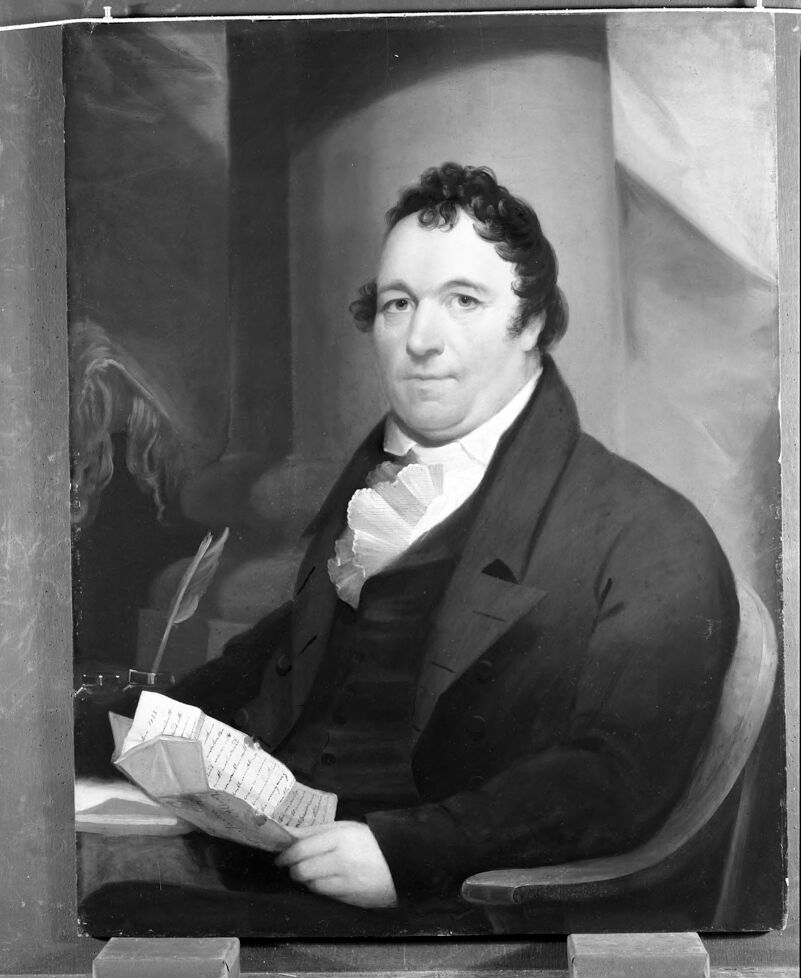

This is a black and white image of a person seemingly from a historical period, judging by the style of the clothing which includes a formal coat and a ruffled shirt. The individual appears to be seated with one arm resting on what could be a desk or a surface obscured in shadows, while the other hand holds a paper or a letter with visible lines of text or writing. Beside the individual lays a quill and what seems like a small, possibly metallic, rectangular object which could be an inkwell. The setting suggests an interior space, with a draped curtain in the background to the right, enhancing the classical or formal tone of the portrait. The image gives the impression of a staged portrait, common in historical settings, where individuals were depicted engaging in intellectual pursuits or with items representing their social status or profession.

Anthropic Claude

Created by claude-3-haiku-48k-20240307 on 2024-03-30

The image appears to be a black and white portrait of a man, likely from a historical period based on his clothing and hairstyle. The man has curly hair and is wearing a formal coat and ruffled shirt. He is seated and appears to be holding some kind of document or papers in his hands. The portrait is framed within a wooden or metal frame, creating a sense of depth and perspective.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-30

The image is a black-and-white portrait of a man sitting in a chair, holding a piece of paper. The man has dark hair and is wearing a dark jacket over a white shirt with a ruffled collar. He is seated in a wooden chair, with his left hand resting on the armrest and his right hand holding the paper. The background of the image appears to be a room with a curtain or drapes behind the man.

The overall atmosphere of the image suggests that it is a formal portrait, possibly from the 19th century. The man's attire and hairstyle are consistent with the fashion of that time period. The image may have been taken in a studio or at the man's home, and it is likely that the photograph was intended to capture the man's likeness and personality.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image depicts a black-and-white photograph of a painting, likely created in the 19th century, featuring a man seated in a chair. He is attired in a dark suit jacket, vest, and white shirt with a ruffled collar, and holds a sheet of paper in his left hand. The paper appears to be a handwritten document, possibly a letter or poem, with illegible writing.

The man's dark hair is styled in a manner that suggests it may have been curled or waved, and he sits in a wooden chair with a curved back. The background of the painting features a light-colored wall with a darker area behind the man, possibly a curtain or a shadow. A feather quill pen lies on a desk or table to the left of the man, adding to the sense of a studious or literary atmosphere.

The overall impression is one of a formal portrait, possibly created for a family member or a notable figure of the time. The level of detail and the subject's attire suggest that the painting was intended to convey a sense of dignity and respectability.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a black-and-white portrait of a man sitting on a chair. He is wearing a black coat with a white inner shirt, a white bow tie, and a white pocket square. He is holding a piece of paper in his left hand and a quill pen in his right hand. The man's head is slightly tilted to the left, and he is looking at something in front of him.

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image is a black-and-white portrait of a man, possibly a historical figure, sitting on a chair. He is dressed in formal attire, including a coat and a white cravat, and holds a piece of paper in his hand, suggesting he is reading or writing. A quill pen is placed on the desk in front of him, indicating that he might be a scholar or a writer. The portrait is framed with a black border, adding a classic and timeless touch to the image.

Google Gemini

Created by gemini-2.0-flash on 2025-04-27

Here's a description of the image:

This is a black and white portrait of a man seated and holding a letter. He is positioned in the center of the frame, angled slightly to the left.

The man has a somewhat rounded face, light skin, and dark, curly hair. He is wearing a dark suit with a high-collared shirt and a ruffled cravat. His expression is neutral, and he looks directly at the viewer.

He is seated in a chair, with what appears to be a desk or table to his left. On this surface are writing materials, including a quill pen in an inkwell, and the letter he holds. The letter is open, with lines of writing visible on the page.

The background consists of a large, classical architectural column. The light source seems to be coming from the front.

The image appears to be a photograph or a scan of a painting. The edges of the painting and some of the support structure are visible, suggesting that it's a documentation of an artwork rather than the artwork itself. There are also some apparent imperfections and damage to the painting, typical of older works.

Created by gemini-2.0-flash-lite on 2025-04-27

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white portrait painting. It depicts a well-dressed, older gentleman seated at a desk, holding a letter. The style suggests it's likely from the 18th or early 19th century.

Details:

- Subject: The man is the central figure. He has a round face with short, curly dark hair. He's looking directly at the viewer with a serious expression.

- Clothing: He's dressed in a dark suit with a white ruffled shirt.

- Setting: The man is seated in what appears to be a study or office. In the background, there is what seems to be a tall pillar or column, indicating a room with a grand architectural feature. There are also elements that hint at a desk and what may be curtains in the background.

- Props: He is holding a folded letter in his hands, and on the desk are an inkwell with a quill pen.

- Style/Medium: The painting has a traditional, formal portrait style. The details are carefully rendered, showing realistic features and a sense of depth.

Let me know if you'd like me to elaborate on any aspect!

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-26

The image is a black-and-white portrait of a man dressed in formal attire typical of the late 18th or early 19th century. He is seated in a chair and holding a document or book in his left hand. The man has short, curly hair and is wearing a dark coat with a white shirt and a cravat. The background appears to be a room with a large column and some drapery, giving the setting a formal or official appearance. The man's expression is serious and contemplative, suggesting he might be a person of significance, possibly a statesman, scholar, or legal professional. The portrait is framed, and the frame is visible in the image.