Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 51-69 |

| Gender | Male, 99.7% |

| Surprised | 0.2% |

| Sad | 0.4% |

| Disgusted | 0.1% |

| Confused | 0.3% |

| Calm | 95.1% |

| Angry | 0.1% |

| Fear | 0% |

| Happy | 3.7% |

Feature analysis

Amazon

| Painting | 95.9% | |

Categories

Imagga

| events parties | 96.7% | |

| people portraits | 3.2% | |

Captions

Microsoft

created on 2020-04-23

| a man holding a book | 47.1% | |

| a man sitting on top of a book | 33.2% | |

| a man sitting on a book | 32% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

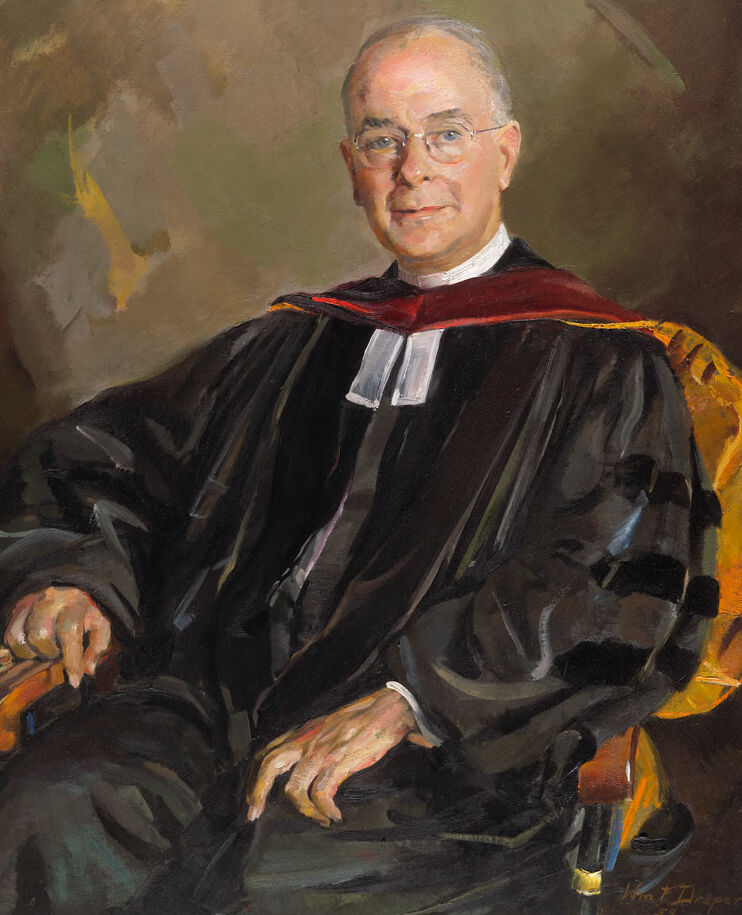

This is a portrait of a person wearing academic regalia. The individual is seated in a wooden chair with a decorative cushion. They are wearing a traditional academic gown with velvet stripes on the sleeves, which typically signifies a doctoral degree. The background of the painting is an abstract mix of earthy tones. The artist's signature can be observed in the lower right corner of the painting.

Created by gpt-4 on 2024-01-25

The image depicts an individual seated in a formal, dignified pose, wearing traditional academic regalia, which includes a black gown with velvet panels and sleeves, showcasing a prominent, richly colored red and gold-lined hood indicative of academic achievement and status. The gown suggests a high level of academic or professional distinction. The individual's hands are gently clasped together and rest comfortably on their lap, adding to the composed and respectable demeanor. The background is a muted mix of brown and green tones that compliment the formal attire and gives a classic touch to the overall composition, with evidence of loose, expressive brushwork that adds dynamism to the static scene.

Anthropic Claude

Created by claude-3-haiku-48k-20240307 on 2024-03-29

The image depicts a man wearing the robes and regalia of a university or academic institution, likely a professor or administrator. He has a serious yet composed expression on his face, and is seated in what appears to be a chair or throne. The painting style is realistic, with attention to detail in the rendering of the man's facial features and the folds of his robes. The background is indistinct, giving the focus to the central figure.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

The image is a portrait of a man in academic regalia, seated and looking directly at the viewer. He is wearing a black robe with a red and gold hood draped over his shoulders, and a white clerical collar. The background of the painting is a muted brown color, with some green and yellow accents.

The man's face is rendered in detail, with a serious expression. His eyes are cast downward slightly, giving the impression that he is looking at something below him. His eyebrows are furrowed, and his mouth is set in a firm line. The overall effect is one of gravity and authority.

The artist's signature is visible in the bottom-right corner of the painting, but it is not legible. The style of the painting suggests that it was created in the early 20th century, possibly during the 1920s or 1930s. The use of muted colors and the formal pose of the subject are consistent with the artistic conventions of the time.

Overall, the image presents a dignified and authoritative figure, likely a member of the academic or religious community. The painting's style and composition suggest that it was intended to be a formal portrait, perhaps for display in a university or church setting.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-23

The image depicts a portrait of a man dressed in academic attire, seated in a chair with his hands resting on the armrests. He is wearing a black robe with a red cape draped over his shoulders, and a white clerical collar visible underneath. The background of the painting is a muted brown color, with subtle brushstrokes and texture that suggest a traditional oil painting technique.

The overall atmosphere of the image is one of dignity and formality, with the subject's serious expression and the formal attire contributing to a sense of gravitas. The use of muted colors and traditional techniques adds to the sense of tradition and respectability, suggesting that the portrait is intended to convey a sense of authority and importance.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-04

The painting depicts a man who appears to be an elderly priest wearing a black robe with a white collar and a red stole. He is sitting on a chair, resting his hands on the armrests, and looking forward with a slight smile. He is wearing eyeglasses and has short hair. The background is blurred, and it appears to be a plain wall. The painting is signed by the artist.

Created by amazon.nova-lite-v1:0 on 2025-01-04

The image is a painting of an older man. He is wearing a black robe with a red cape and a white stole around his neck. He is sitting on a chair with his hands on his lap. He is wearing glasses and has a serious expression on his face. The painting is framed in a black frame. The background is blurry and has a brownish color. The painting is signed by "W.T. Draper" on the bottom right corner.

Google Gemini

Created by gemini-2.0-flash on 2025-04-26

Here is a description of the image:

The image is a portrait of an elderly man in academic regalia. He is seated in a chair, looking directly at the viewer with a slight smile. The man is bald on top of his head, with white hair around the sides and back. He is wearing glasses with thin, wire frames.

He is dressed in a black academic gown or robe with velvet black bands, with a red stole or hood draped over his shoulders. Beneath the gown, he appears to be wearing a white shirt with a white clerical collar. The chair has a golden-colored cushion.

The background is a blend of muted greens and browns, with a hint of yellow. The painting style is somewhat impressionistic, with visible brushstrokes. The signature "Hunt T. Draper" along with what appears to be "54" is visible in the lower right corner, indicating the artist and possibly the year of the painting.

Created by gemini-2.0-flash-lite on 2025-04-26

This is a portrait painting of a distinguished-looking, older gentleman. He is seated in a chair and is dressed in academic regalia, which includes a black robe with dark red trim around the collar and a white clerical collar. The man is fair-skinned, has thin, receding hair, and wears eyeglasses. His expression is serious, with a slight hint of a smile. His hands are visible and rest on the arm of the chair. The background is a blurred, warm-toned abstract design of browns, greens, and yellow, which contrasts with the dark tones of the man's clothing. The artwork style is realistic, with a focus on capturing the likeness and dignity of the subject. There is a signature in the bottom right corner, which reads "Hunt Draper '54".

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-25

The image is a portrait painting of an elderly man dressed in formal clerical attire. He is seated in a chair with a golden upholstery. The man is wearing a black robe with a red and gold stole draped around his neck, and a white clerical collar. He has short, gray hair and is wearing glasses. His hands are resting on the armrests of the chair, and he is looking directly at the viewer with a composed expression.

The background of the painting is a blend of muted colors, primarily shades of brown and green, which provide a neutral backdrop that highlights the subject. The painting is signed by the artist "Wm. F. Draper" in the lower right corner, with the year "59" indicating it was created in 1959. The overall style of the painting is realistic, capturing the subject's likeness and attire in detail.