Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 51-69 |

| Gender | Male, 99.4% |

| Fear | 0% |

| Angry | 0.1% |

| Disgusted | 0% |

| Calm | 99.3% |

| Happy | 0% |

| Confused | 0.1% |

| Sad | 0.2% |

| Surprised | 0.2% |

Microsoft Cognitive Services

| Age | 65 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| pets animals | 58.1% | |

| paintings art | 11.4% | |

| events parties | 11.1% | |

| people portraits | 8.5% | |

| streetview architecture | 5.3% | |

| nature landscape | 3.2% | |

| text visuals | 1.3% | |

Captions

Microsoft

created on 2019-11-09

| a man standing in front of a mirror posing for the camera | 73.1% | |

| a man standing in front of a mirror | 73% | |

| a painting of a man standing in front of a mirror | 68.2% | |

Azure OpenAI

Created on 2024-12-03

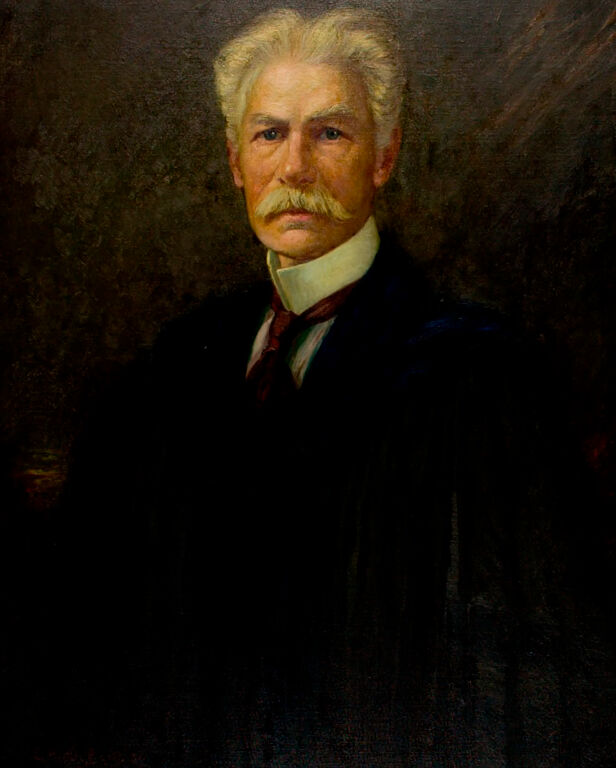

This is an image of a framed portrait painting. The painting depicts a person dressed in a dark suit with a white shirt and a red tie. The style of the suit and the visible hairstyle suggest a formal, possibly historical, representation. The background of the portrait is a dark, muted color, providing a stark contrast to the light color of the hair and the collar of the shirt. Both the portrait and the frame have an aged appearance, suggesting that the painting could be relatively old or created to look that way. The frame itself is simple and wooden, with a natural finish that complements the colors of the painting.

Anthropic Claude

Created on 2024-11-27

The image appears to be a portrait painting of an older man with gray hair and a mustache, wearing a black suit and a white collar. He has a serious expression on his face and is looking directly at the viewer. The painting is framed in an ornate wooden frame, giving it an antique, classical appearance.

Meta Llama

Created on 2024-11-22

The image presents a portrait of an older man with white hair, dressed in formal attire, set against a dark background. The subject's facial expression is neutral, and he is depicted wearing a black suit jacket, a white collared shirt, and a red tie. His white hair is styled in a manner that suggests it may be combed back or styled with some form of hair product. The portrait is framed in a wooden frame, which adds a sense of elegance and sophistication to the overall piece. The dark background behind the subject helps to draw attention to his face and upper body, creating a sense of depth and dimensionality in the image. Overall, the portrait exudes a sense of dignity and refinement, suggesting that it may have been created for a formal occasion or as a tribute to the subject's character. The use of muted colors and the subject's serious expression contribute to a somber and contemplative atmosphere, inviting the viewer to reflect on the subject's life and legacy.