Machine Generated Data

Tags

Color Analysis

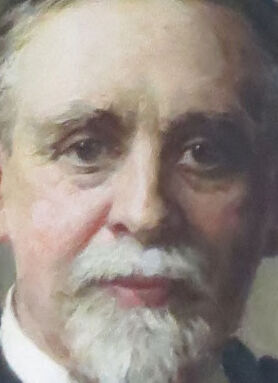

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 57-65 |

| Gender | Male, 99.9% |

| Calm | 97.4% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 2.4% |

| Disgusted | 0.4% |

| Confused | 0.3% |

| Happy | 0.2% |

| Angry | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Baton | 99.3% | |

Categories

Imagga

created on 2023-10-07

| pets animals | 51.4% | |

| people portraits | 18.5% | |

| events parties | 16.3% | |

| paintings art | 7.3% | |

| nature landscape | 1.9% | |

| streetview architecture | 1.7% | |

| food drinks | 1.2% | |

| text visuals | 1% | |

Captions

Microsoft

created by unknown on 2018-05-09

| a man standing in front of a mirror posing for the camera | 56.5% | |

| a man standing in front of a mirror | 56.4% | |

| a painting of a man standing in front of a mirror | 56.3% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-06-27

a painting of a man in a black robe and white tie

Created by general-english-image-caption-blip on 2025-04-28

a photograph of a portrait of a man in a black coat and a red coat

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-01-04

The image depicts an oil painting of a seated man dressed in academic robes, which are predominantly black with red accents. He is holding a rolled document in his right hand, possibly a diploma or certificate. The painting is framed with an ornate, carved wooden frame that adds a formal and distinguished appearance to the portrait. The background of the painting is subdued and neutral, focusing attention on the figure's attire and pose. The man's left arm rests on the arm of his chair, giving a composed and dignified impression.

Created by gpt-4 on 2024-12-03

The image depicts an oil painting framed in a dark brown, ornate wooden frame. The painting features a person dressed in a traditional black gown with red accents, which might suggest academic or judicial attire. The individual is depicted seated with their hands resting on the armrests; one hand loosely holds what appears to be a rolled document or diploma. The background of the painting is a muted blend of grey and brown tones, providing a stark contrast to the dark, rich color of the gown. The emphasis on the clothing and the document suggests the portrayed individual holds a position of authority or has achieved academic success. The painting is realistic, with attention to the textures of the fabric and the fine details of the hands and clothing.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-27

This is a formal portrait painting in a classical style, housed in an ornate gold frame. The subject is wearing academic or scholarly attire - a black robe with red accents and a white collar. They appear to be an older gentleman with a gray beard, seated in a dignified pose while holding what appears to be a rolled document or scroll in one hand. The painting has a dark, muted color palette typical of formal portraits from the late 19th or early 20th century, with the figure set against a plain background. The brushwork and style suggest this is a professional portrait, likely of someone of academic or institutional importance.

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a formal portrait painting in a classical style, housed in an ornate golden frame. The subject is wearing academic or judicial robes in black with a touch of red visible at the collar. They are portrayed in a seated position against a neutral background, holding what appears to be a rolled document or scroll in one hand. The painting style appears to be from the late 19th or early 20th century, with careful attention paid to the details of the clothing and the dignified pose typical of formal portraiture from that era. The overall tone is somber and stately, befitting what appears to be an academic or legal professional of high standing.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to be a portrait painting of an elderly man with a long white beard and wearing a dark academic robe with red trim. He is seated in a contemplative pose, with his hands clasped in front of him. The painting has an ornate, gilded frame that adds to the formal, academic setting. The man's expression is serious and thoughtful, suggesting he may be a scholar or person of importance.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

The image depicts a framed portrait of an older man, likely a professor or academic, dressed in formal attire. The subject is attired in a black robe with red trim on the collar and holds a rolled-up document in his right hand. He wears a white shirt and black tie underneath the robe, and his hair is gray and neatly combed back.

The background of the portrait features a muted, beige-colored wall, which provides a subtle contrast to the subject's dark attire. The overall atmosphere of the image suggests a sense of formality and professionalism, consistent with the subject's academic or professional setting.

The portrait is presented in a wooden frame, which adds a touch of warmth and elegance to the overall composition. The frame's design and color complement the subject's attire and the background, creating a cohesive and visually appealing image.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-02

The image depicts a framed oil painting of a man in academic regalia, holding a rolled-up paper.

The man is dressed in a black robe with red trim on the shoulders and a black bow tie. He has short gray hair and a white beard and mustache. His right hand is extended outward, and he holds a rolled-up paper in his left hand. The background of the painting is a muted brown color, with a subtle gradient that gives the impression of depth.

The painting is presented in a wooden frame, which adds a sense of elegance and sophistication to the overall piece. The frame is made of a light-colored wood, possibly oak or pine, and features a simple yet ornate design.

Overall, the image presents a dignified and professional portrait of a man who appears to be a scholar or academic. The use of muted colors and subtle shading creates a sense of depth and dimensionality, drawing the viewer's eye into the painting.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-05

The painting depicts a man who is standing in front of a gray wall. He is wearing a black robe with a red lining and a white inner shirt. He is also wearing a black tie. The man has a white beard and mustache. He is holding a white scroll in his left hand. The painting is framed in a golden frame.

Created by amazon.nova-lite-v1:0 on 2025-01-05

A portrait painting of a man is displayed in a frame. The man is wearing a black robe with a red collar and a white inner shirt. He is holding a rolled paper in his left hand and a stick in his right hand. The painting is mounted on a white wall. The painting has a brown frame with a golden border. The painting is in a portrait style.

Google Gemini

Created by gemini-2.0-flash on 2025-04-27

Here's a description of the image:

The image is a framed portrait of an older gentleman. The man has fair skin, with graying hair and a neatly trimmed white goatee. He is dressed in what appears to be formal attire, with a dark, flowing robe or gown over a suit or dress shirt and tie. The robe has a hint of red lining at the collar. In his left hand, he holds a rolled-up document, possibly a scroll or diploma.

He looks directly at the viewer with a composed expression. The background is a softly lit, neutral color, with some subtle suggestion of architectural elements or draping.

The frame itself is ornate, with a warm, golden color and some aged details. A small plaque is visible at the bottom center of the frame, presumably containing information about the portrait's subject or artist. The overall impression is that of a traditional, formal portrait.

Created by gemini-2.0-flash-lite on 2025-04-27

Here's a description of the image:

Overall Impression:

The image is a framed portrait of an older gentleman. It appears to be a classical oil painting.

Subject:

- The man has a distinguished appearance, with gray hair and a white beard. His expression is serious and composed.

- He wears a dark, possibly black, academic gown or robe.

- The red trim suggests a position of importance or authority.

- He holds a rolled paper in one hand and extends the other hand out to the side.

Composition and Style:

- The portrait is framed in a decorative, golden-colored frame.

- The background is a muted blend of colors.

- The lighting is relatively even and creates a sense of depth.

- The style of painting is realistic, with detailed brushstrokes.

Possible Interpretation:

Based on his attire and the overall impression, the portrait likely depicts a judge, academic, or other figure of authority from the late 19th or early 20th century.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-26

The image is a portrait painting of an older man with a white beard and mustache. He is dressed in formal academic attire, which includes a black robe with red accents, typically associated with scholarly or judicial roles. The man is seated and holding a rolled document, possibly a diploma or scroll, in his right hand. His left hand is resting on the arm of the chair. The background is plain and muted, allowing the focus to remain on the subject. The painting is framed in an ornate wooden frame, adding to the formal and dignified appearance of the portrait. The overall style suggests that it might be an official or commemorative portrait, likely of someone with significant academic or professional achievements.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-06-24

The image depicts a formal portrait of a man painted in a classical style. The subject is dressed in a dark, knee-length robe with a red collar, which is reminiscent of academic or judicial attire, possibly indicating a figure of authority or academia. The man has a white beard and is holding a rolled document or diploma in his left hand, suggesting he may be celebrating an achievement, such as a graduation or a professional accomplishment. The background is a muted, textured greenish-gray, giving the painting a quiet and dignified atmosphere. The painting is framed with an ornate, antique gold frame that complements the subject's formal attire and the serious tone of the portrait. The overall composition and attire suggest that the individual is of importance or holds a respected position.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-06-24

This image is a framed oil painting of a man, likely a portrait of a prominent figure. The man is depicted wearing a formal black academic gown with a red lining, suggesting a scholarly or academic role. He has a white beard and is looking directly at the viewer. In his right hand, he holds a rolled document or diploma, which further emphasizes his scholarly or professional status. The background is a simple, dark gradient, which helps to focus attention on the subject. The painting is framed in an ornate, gilded frame, adding to the overall sense of importance and formality.