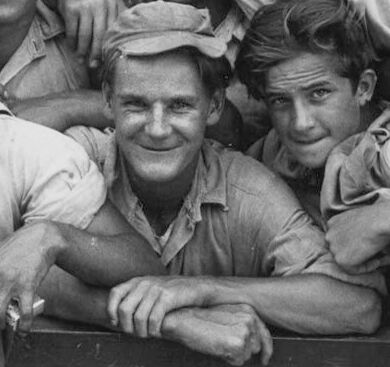

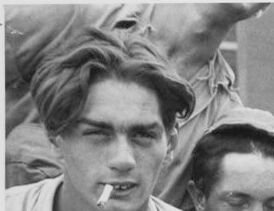

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| People | 100 | |

|

| ||

| Face | 100 | |

|

| ||

| Head | 100 | |

|

| ||

| Photography | 100 | |

|

| ||

| Portrait | 100 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Person | 98.2 | |

|

| ||

| Adult | 98.2 | |

|

| ||

| Male | 98.2 | |

|

| ||

| Man | 98.2 | |

|

| ||

| Person | 97.9 | |

|

| ||

| Adult | 97.9 | |

|

| ||

| Male | 97.9 | |

|

| ||

| Man | 97.9 | |

|

| ||

| Person | 97.4 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Adult | 97.3 | |

|

| ||

| Male | 97.3 | |

|

| ||

| Man | 97.3 | |

|

| ||

| Person | 97.2 | |

|

| ||

| Adult | 97.2 | |

|

| ||

| Male | 97.2 | |

|

| ||

| Man | 97.2 | |

|

| ||

| Person | 96.3 | |

|

| ||

| Adult | 96.3 | |

|

| ||

| Male | 96.3 | |

|

| ||

| Man | 96.3 | |

|

| ||

| Person | 96.1 | |

|

| ||

| Person | 96.1 | |

|

| ||

| Person | 95.1 | |

|

| ||

| Person | 94.3 | |

|

| ||

| Adult | 94.3 | |

|

| ||

| Bride | 94.3 | |

|

| ||

| Female | 94.3 | |

|

| ||

| Wedding | 94.3 | |

|

| ||

| Woman | 94.3 | |

|

| ||

| Person | 94.2 | |

|

| ||

| Person | 92.4 | |

|

| ||

| Adult | 92.4 | |

|

| ||

| Male | 92.4 | |

|

| ||

| Man | 92.4 | |

|

| ||

| Happy | 88 | |

|

| ||

| Smile | 88 | |

|

| ||

| American Football | 76.4 | |

|

| ||

| American Football (Ball) | 76.4 | |

|

| ||

| Ball | 76.4 | |

|

| ||

| Football | 76.4 | |

|

| ||

| Sport | 76.4 | |

|

| ||

| Groupshot | 71.3 | |

|

| ||

| Body Part | 69.2 | |

|

| ||

| Finger | 69.2 | |

|

| ||

| Hand | 69.2 | |

|

| ||

| Baseball Cap | 61.3 | |

|

| ||

| Cap | 61.3 | |

|

| ||

| Clothing | 61.3 | |

|

| ||

| Hat | 61.3 | |

|

| ||

| Crowd | 61.1 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

Google

created on 2018-05-10

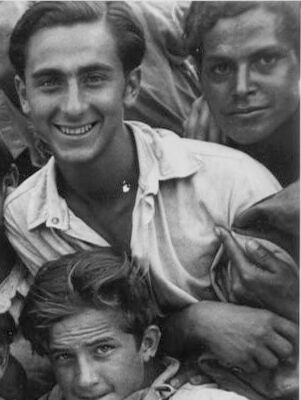

| people | 96.7 | |

|

| ||

| photograph | 95.8 | |

|

| ||

| black and white | 90.1 | |

|

| ||

| social group | 89.9 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 80.9 | |

|

| ||

| photography | 78.6 | |

|

| ||

| human | 68.8 | |

|

| ||

| family | 68.6 | |

|

| ||

| smile | 67.5 | |

|

| ||

| monochrome | 67.3 | |

|

| ||

| team | 63.9 | |

|

| ||

| vintage clothing | 58.1 | |

|

| ||

| crew | 56.5 | |

|

| ||

| human behavior | 55.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

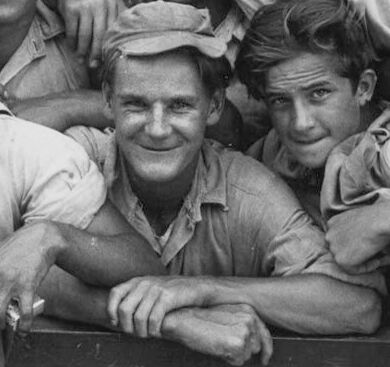

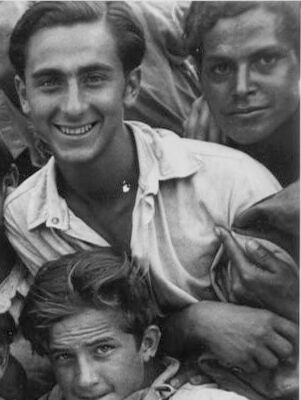

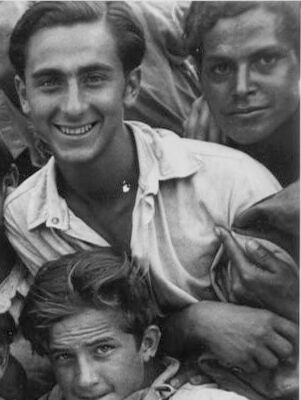

| Age | 18-26 |

| Gender | Male, 100% |

| Happy | 98.8% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.7% |

| Confused | 0.1% |

| Calm | 0.1% |

| Disgusted | 0% |

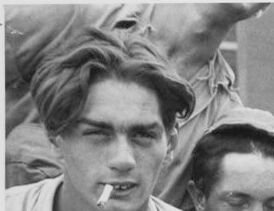

AWS Rekognition

| Age | 18-26 |

| Gender | Male, 100% |

| Calm | 61.5% |

| Confused | 25.1% |

| Angry | 11.2% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.4% |

| Disgusted | 0.8% |

| Happy | 0.3% |

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 99.8% |

| Happy | 99.5% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.2% |

| Confused | 0.1% |

| Disgusted | 0% |

| Calm | 0% |

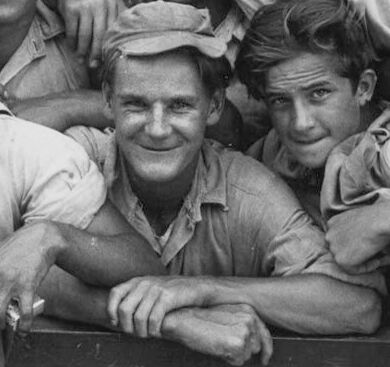

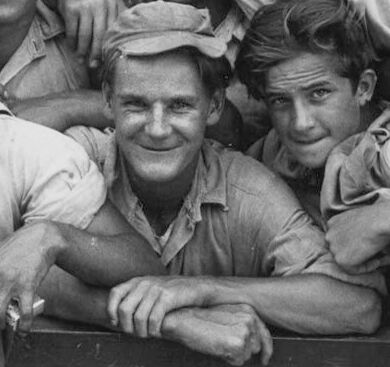

AWS Rekognition

| Age | 43-51 |

| Gender | Male, 99.9% |

| Happy | 99.1% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.3% |

| Calm | 0.1% |

| Angry | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 33-41 |

| Gender | Female, 63.4% |

| Calm | 88% |

| Surprised | 6.7% |

| Fear | 6.3% |

| Happy | 3.7% |

| Sad | 3.1% |

| Confused | 1.9% |

| Angry | 1.2% |

| Disgusted | 0.6% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 100% |

| Happy | 97.8% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.9% |

| Calm | 0.4% |

| Confused | 0.2% |

| Disgusted | 0.1% |

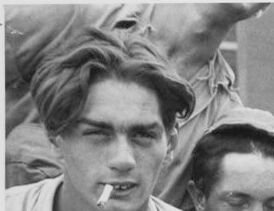

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.9% |

| Calm | 75.7% |

| Happy | 8.6% |

| Surprised | 6.7% |

| Fear | 6.1% |

| Angry | 5.1% |

| Confused | 4.6% |

| Disgusted | 2.8% |

| Sad | 2.7% |

AWS Rekognition

| Age | 9-17 |

| Gender | Female, 62.7% |

| Happy | 79.9% |

| Fear | 11.6% |

| Surprised | 6.6% |

| Calm | 3.3% |

| Sad | 2.6% |

| Confused | 1.7% |

| Angry | 1.2% |

| Disgusted | 0.9% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.8% |

| Happy | 99.2% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.2% |

| Confused | 0.1% |

| Calm | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 89.4% |

| Calm | 95.9% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 3.6% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Confused | 0.1% |

| Happy | 0% |

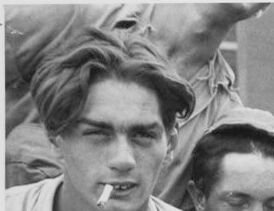

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 98.5% |

| Calm | 59.7% |

| Sad | 44.9% |

| Fear | 7.1% |

| Surprised | 7.1% |

| Confused | 7% |

| Happy | 1.3% |

| Disgusted | 1.1% |

| Angry | 0.9% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 99.9% |

| Happy | 97.9% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 2.2% |

| Calm | 1% |

| Confused | 0.4% |

| Disgusted | 0.1% |

| Angry | 0.1% |

AWS Rekognition

| Age | 28-38 |

| Gender | Male, 97.2% |

| Happy | 99.8% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0% |

| Calm | 0% |

| Disgusted | 0% |

| Confused | 0% |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 35 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 23 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 22 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 62 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 38 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 23 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 31 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 28 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 51 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 30 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Person

Adult

Male

Man

Bride

Female

Woman

American Football (Ball)

| Person | 98.9% | |

|

| ||

| Person | 98.2% | |

|

| ||

| Person | 97.9% | |

|

| ||

| Person | 97.4% | |

|

| ||

| Person | 97.3% | |

|

| ||

| Person | 97.2% | |

|

| ||

| Person | 96.3% | |

|

| ||

| Person | 96.1% | |

|

| ||

| Person | 96.1% | |

|

| ||

| Person | 95.1% | |

|

| ||

| Person | 94.3% | |

|

| ||

| Person | 94.2% | |

|

| ||

| Person | 92.4% | |

|

| ||

| Adult | 98.2% | |

|

| ||

| Adult | 97.9% | |

|

| ||

| Adult | 97.3% | |

|

| ||

| Adult | 97.2% | |

|

| ||

| Adult | 96.3% | |

|

| ||

| Adult | 94.3% | |

|

| ||

| Adult | 92.4% | |

|

| ||

| Male | 98.2% | |

|

| ||

| Male | 97.9% | |

|

| ||

| Male | 97.3% | |

|

| ||

| Male | 97.2% | |

|

| ||

| Male | 96.3% | |

|

| ||

| Male | 92.4% | |

|

| ||

| Man | 98.2% | |

|

| ||

| Man | 97.9% | |

|

| ||

| Man | 97.3% | |

|

| ||

| Man | 97.2% | |

|

| ||

| Man | 96.3% | |

|

| ||

| Man | 92.4% | |

|

| ||

| Bride | 94.3% | |

|

| ||

| Female | 94.3% | |

|

| ||

| Woman | 94.3% | |

|

| ||

| American Football (Ball) | 76.4% | |

|

| ||

Categories

Imagga

| people portraits | 99.3% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a group of people posing for a photo | 98.2% | |

|

| ||

| a group of people posing for the camera | 98.1% | |

|

| ||

| a group of people posing for a picture | 98% | |

|

| ||