Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 100% |

| Calm | 88.2% |

| Confused | 8.5% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.3% |

| Angry | 1.7% |

| Disgusted | 0.5% |

| Happy | 0.1% |

Feature analysis

Amazon

| Adult | 99.6% | |

Categories

Imagga

| pets animals | 66.9% | |

| nature landscape | 17.9% | |

| paintings art | 9.5% | |

| people portraits | 2.5% | |

| streetview architecture | 2.3% | |

Captions

Microsoft

created by unknown on 2018-05-10

| a group of people in uniform | 95.3% | |

| a group of people posing for a photo | 93% | |

| a group of people in uniform posing for a photo | 92.9% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-28

| a photograph of a man in overalls and a hat on a street | -100% | |

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-27

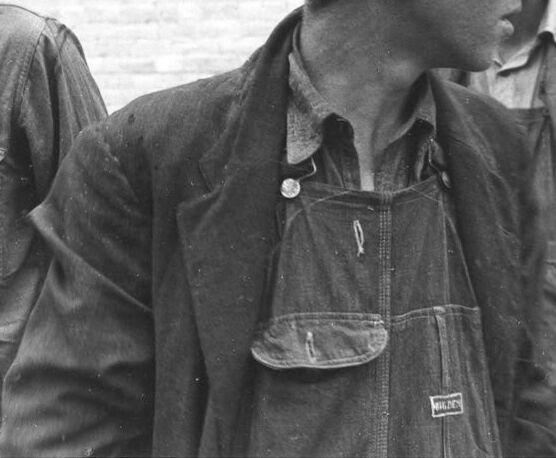

The image depicts a black-and-white photograph of four men standing in front of a brick wall. The man on the left is wearing a cap, a collared shirt, and overalls with suspenders. The man on the right is wearing a cap, a collared shirt, and overalls without suspenders. He has his arm around the shoulder of the man on the left. The man in the background on the left is wearing a cap and a collared shirt with a vest. The man in the background on the right is wearing a collared shirt and overalls.

The overall atmosphere of the image suggests that it was taken during a work-related event or gathering, possibly in the early 20th century. The men's attire and the setting imply a rural or industrial context, with the brick wall in the background adding to the sense of a specific location. The image conveys a sense of camaraderie and shared purpose among the men, as they stand together in a casual yet purposeful manner.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-27

The image depicts a black-and-white photograph of four men, with the two in the foreground wearing overalls and caps. The man on the left has a serious expression, while the man on the right appears to be speaking or shouting. In the background, two additional men are visible, although their faces are not clearly discernible.

The overall atmosphere of the image suggests that it may have been taken during a labor strike or protest, given the attire and demeanor of the individuals depicted. The photograph appears to be an archival or historical document, capturing a moment in time from a bygone era.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-27

The image features a black-and-white photograph of four men, possibly workers, standing in front of a brick wall. The man on the left is wearing a denim overall with a logo on the chest and a cap. The man next to him is wearing a denim overall with a logo on the left chest and a hat. The man in the middle is wearing a denim overall with a logo on the right chest and a hat. The man on the right is wearing a denim overall with a logo on the left chest and a hat.

Created by amazon.nova-pro-v1:0 on 2025-05-27

The black and white photo shows four men standing together, probably in a work site. The man in front is wearing a hat and a jacket with an apron on top, while the man next to him is wearing a cap and an apron. Behind them are two other men, one of whom is wearing a hat. They are all wearing long sleeves. Behind them is a brick wall.

Google Gemini

Created by gemini-2.0-flash on 2025-05-23

Here is a description of the image:

This is a black and white photograph of a group of men, likely laborers, standing together.

In the foreground, the man on the left has a stern expression and is wearing overalls with a cap that has a stripe pattern. The man next to him has a jacket over his overalls and a newsboy cap.

In the background there are other men, partially obscured, all similarly dressed in work clothes, with one wearing a cap. Behind them is a brick wall.

The style of the clothes and the quality of the photograph suggest that it might be a historical image, possibly from the early to mid-20th century.

Created by gemini-2.0-flash-lite on 2025-05-23

Here's a description of the image:

The image is a black and white photograph depicting a group of men, most likely from the early to mid-20th century. The focus is on two men in the foreground, both wearing denim overalls, a common work outfit of the era. The man on the left is looking directly at the camera, wearing a striped cap. The man on the right is looking off to the side. He is also wearing a cap, and a dark jacket over his overalls. In the background, a third man is partially visible, also wearing a cap and a dark sweater. The scene appears to be outdoors, with a brick wall providing the backdrop. The image conveys a sense of the working class and the conditions of the time. The lighting is somewhat harsh, and the image has a classic, documentary feel.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-29

The image is a black-and-white photograph depicting a group of men, possibly laborers or workers, from a bygone era. The men are dressed in work attire, including overalls and caps, which suggests they might be engaged in manual labor or industrial work.

Key details include:

- The men are wearing denim overalls, some of which have visible brand labels.

- Their caps vary in style, with one man wearing a flat cap and another a newsboy cap.

- The expressions on their faces appear serious or focused, which might indicate they are in the midst of work or taking a brief pause.

- The background is a plain brick wall, which could suggest an industrial or urban setting.

The overall mood of the image conveys a sense of hard work and possibly the economic conditions of the time, reflecting a historical period where manual labor was a significant part of daily life.