Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Adult | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Man | 99.4 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Adult | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Man | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Clothing | 93.7 | |

|

| ||

| Hat | 93.7 | |

|

| ||

| Face | 91.5 | |

|

| ||

| Head | 91.5 | |

|

| ||

| Musical Instrument | 91.3 | |

|

| ||

| Car | 89.3 | |

|

| ||

| Transportation | 89.3 | |

|

| ||

| Vehicle | 89.3 | |

|

| ||

| Guitar | 86.4 | |

|

| ||

| Machine | 79.9 | |

|

| ||

| Wheel | 79.9 | |

|

| ||

| Violin | 64 | |

|

| ||

| Coat | 57.7 | |

|

| ||

| Cello | 57.2 | |

|

| ||

| Guitarist | 57.2 | |

|

| ||

| Leisure Activities | 57.2 | |

|

| ||

| Music | 57.2 | |

|

| ||

| Musician | 57.2 | |

|

| ||

| Performer | 57.2 | |

|

| ||

| Photography | 56.9 | |

|

| ||

| Portrait | 56.9 | |

|

| ||

| Sun Hat | 55.7 | |

|

| ||

Clarifai

created on 2018-05-10

| people | 99.9 | |

|

| ||

| group | 99 | |

|

| ||

| group together | 98.6 | |

|

| ||

| adult | 98.5 | |

|

| ||

| man | 97.8 | |

|

| ||

| administration | 96.7 | |

|

| ||

| music | 96.3 | |

|

| ||

| musician | 95.7 | |

|

| ||

| two | 95.4 | |

|

| ||

| leader | 95.1 | |

|

| ||

| vehicle | 94.9 | |

|

| ||

| wear | 94.4 | |

|

| ||

| several | 93.9 | |

|

| ||

| monochrome | 93.7 | |

|

| ||

| three | 92.8 | |

|

| ||

| military | 91.1 | |

|

| ||

| four | 90 | |

|

| ||

| outfit | 89 | |

|

| ||

| portrait | 88.4 | |

|

| ||

| instrument | 88 | |

|

| ||

Imagga

created on 2023-10-06

Google

created on 2018-05-10

| photograph | 95.4 | |

|

| ||

| black and white | 92.3 | |

|

| ||

| monochrome photography | 85.1 | |

|

| ||

| photography | 81.9 | |

|

| ||

| string instrument | 79.5 | |

|

| ||

| music | 77.1 | |

|

| ||

| musical instrument accessory | 71.1 | |

|

| ||

| gentleman | 69.8 | |

|

| ||

| musical instrument | 66.3 | |

|

| ||

| monochrome | 64 | |

|

| ||

| string instrument | 63.2 | |

|

| ||

| musician | 62.3 | |

|

| ||

| violin family | 58.5 | |

|

| ||

| bowed string instrument | 55.1 | |

|

| ||

Microsoft

created on 2018-05-10

| person | 97.5 | |

|

| ||

| man | 95 | |

|

| ||

| old | 87 | |

|

| ||

| bowed instrument | 80.5 | |

|

| ||

| white | 70.3 | |

|

| ||

| suit | 65.3 | |

|

| ||

| vintage | 36 | |

|

| ||

| cello | 22.6 | |

|

| ||

| bass fiddle | 13.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

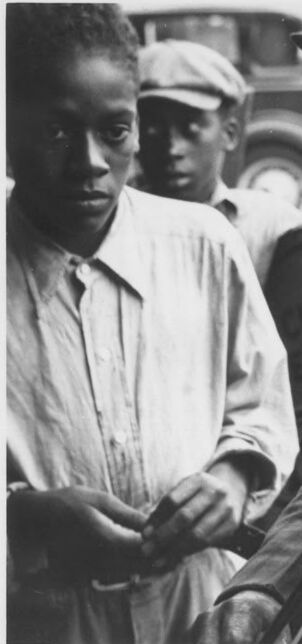

| Age | 38-46 |

| Gender | Male, 99.2% |

| Sad | 99.9% |

| Calm | 17.7% |

| Surprised | 6.5% |

| Fear | 6.1% |

| Angry | 0.9% |

| Disgusted | 0.5% |

| Happy | 0.3% |

| Confused | 0.3% |

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 56.4% |

| Calm | 98.6% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.5% |

| Confused | 0.1% |

| Angry | 0% |

| Happy | 0% |

| Disgusted | 0% |

AWS Rekognition

| Age | 18-26 |

| Gender | Male, 96.6% |

| Surprised | 80.4% |

| Calm | 43.2% |

| Fear | 6.8% |

| Sad | 2.5% |

| Happy | 2% |

| Confused | 1.6% |

| Disgusted | 1.2% |

| Angry | 0.7% |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 43 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 63.8% | |

|

| ||

| pets animals | 10.8% | |

|

| ||

| streetview architecture | 8.7% | |

|

| ||

| cars vehicles | 6.1% | |

|

| ||

| interior objects | 5.5% | |

|

| ||

| people portraits | 2.1% | |

|

| ||

| nature landscape | 1.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a vintage photo of a man wearing a suit and tie | 97% | |

|

| ||

| a vintage photo of a man in a suit and tie | 96.9% | |

|

| ||

| a vintage photo of a man holding a violin | 76.9% | |

|

| ||

Text analysis

Amazon

CURB

1

ERVIC

but