Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 12-22 |

| Gender | Female, 52.4% |

| Disgusted | 46.1% |

| Sad | 45.1% |

| Fear | 45.1% |

| Angry | 45.4% |

| Confused | 45.1% |

| Calm | 52.7% |

| Surprised | 45.3% |

| Happy | 45.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Painting | 97.7% | |

Categories

Imagga

created on 2020-04-24

| interior objects | 93% | |

| paintings art | 4.7% | |

| macro flowers | 1% | |

Captions

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-04-28

a photograph of a painting of a man on a horse

Created by general-english-image-caption-blip-2 on 2025-06-27

a painting of indian women on horseback

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

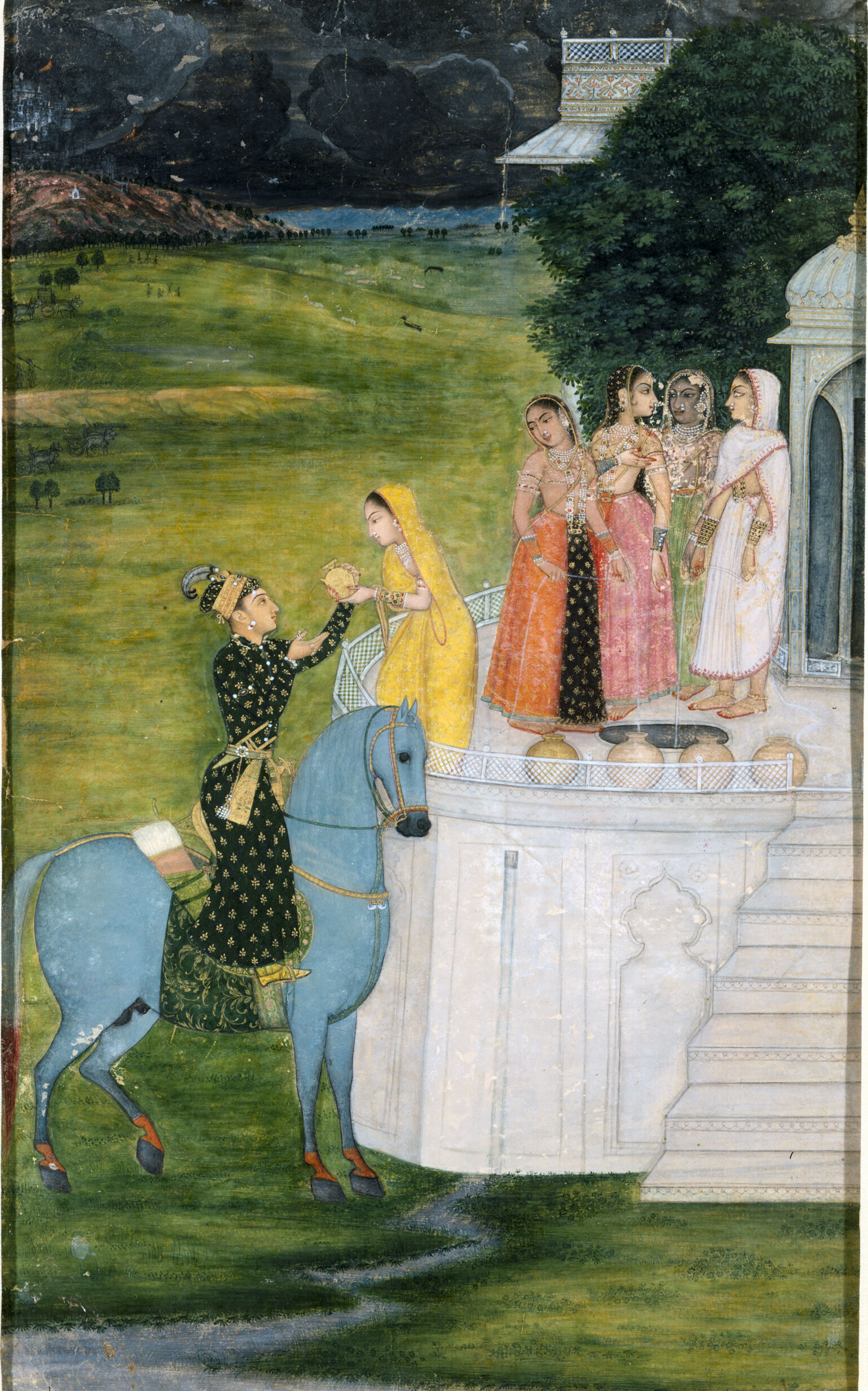

This image depicts a traditional Indian painting scene with a nobleman or prince on horseback, offering a gift to a woman standing on a terrace. The nobleman is dressed in vibrant, ornate clothing and a headpiece, indicative of royalty or high status. The horse is beautifully painted in blue, with intricate details on the saddle and harness.

The woman receiving the gift is dressed in a bright yellow veil and traditional attire, adorned with jewelry. Around her, several other women, also dressed in elegant and colorful traditional garments, stand in a line on the terrace. The background of the image includes a detailed landscape with expansive green fields, scattered trees, and dark, cloudy skies, giving a sense of depth and atmosphere to the scene. The architecture of the building features elaborate details and a staircase, further emphasizing the opulence of the setting.

Created by gpt-4 on 2024-12-03

The image is a traditional Indian miniature painting, featuring several individuals in an outdoor setting. At the left, a person is depicted riding a horse with intricate harness details and approaching a group of individuals standing on a balcony-like structure. The architecture suggests a palatial building with a white stone balustrade and stairs leading up to the platform where the group stands.

The individuals on the platform appear to be dressed in fine, traditional South Asian attire with rich ornamentation, suggesting they may be of high social standing or royalty. The environment features an expansive green landscape with a backdrop of hills and a body of water. The sky is dark, perhaps indicating an approaching storm or the time of day nearing twilight. Trees and smaller architectural elements dot the landscape, giving a sense of a well-maintained estate or royal grounds.

The technique and artistry of the painting suggest it may have been created during a historical period in India, possibly as a depiction of a royal scene or a significant cultural narrative. The color palette consists primarily of greens, blues, whites, and tans, with the clothing providing accents of yellow, red, and pink. Overall, this miniature painting conveys both a sense of serenity in the landscape and formality in the attire and postures of the figures.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a Mughal miniature painting depicting a romantic scene. The artwork shows a horseman on a blue horse reaching up towards a group of women standing on a white marble balcony or terrace. The rider wears an ornate dark outfit decorated with gold patterns, while the women are dressed in colorful traditional attire including yellow, orange, pink, and white garments adorned with jewelry. The architectural setting features typical Mughal design elements like decorative railings and arched doorways. The background shows a green landscape with scattered trees and dark storm clouds gathering in the sky. The painting style is characteristic of Mughal art, with fine details, rich colors, and a mix of Persian and Indian artistic influences.

Created by claude-3-haiku-20240307 on 2024-11-27

This image appears to be a traditional Indian painting, depicting a scene from what seems to be a historical or mythological narrative. The painting shows a man on horseback, dressed in ornate clothing, approaching a group of women standing on a balcony or terrace. The women are also wearing elaborate, colorful garments, suggesting they may be of noble or royal status. The background features a lush, pastoral landscape with mountains and a temple-like structure in the distance. The overall style and subject matter suggest this is a work of Indian or South Asian origin, likely from a specific cultural or artistic tradition. The level of detail and the vibrant colors used in the painting make it an aesthetically striking and visually compelling work of art.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-26

This appears to be a traditional Indian miniature painting depicting a romantic scene. A figure on horseback, dressed in an ornate dark outfit with gold details, reaches up towards a group of women standing on a balcony or terrace of what appears to be a palace structure. The horse is painted in a pale blue color. One woman in a yellow dress leans over the balcony rail, while several other women in colorful dresses (orange, pink, and white) stand behind her. The architectural detail shows typical Mughal or Rajasthani style with decorated pillars and arches. The background features a green landscape with scattered trees and dark storm clouds gathering in the sky. The painting style is characteristic of Indian court paintings from around the 17th-18th centuries, with fine detail work and rich colors.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

The image depicts a painting of a man on horseback, presenting a gift to a group of women standing on a balcony. The man is dressed in a black outfit with gold accents and wears a turban. He holds a small, ornate box in his right hand, which he extends towards the women.

The women are attired in colorful saris and stand on a white balcony adorned with intricate carvings. They gaze at the man with interest, their faces expressive and engaged. In the background, a lush green field stretches out, dotted with trees and a few buildings in the distance. The sky above is dark and foreboding, suggesting an impending storm.

The overall atmosphere of the painting is one of elegance and refinement, capturing a moment of interaction between the man and the women. The use of vibrant colors and intricate details adds depth and texture to the scene, drawing the viewer's eye to the central figures.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-23

The image depicts a scene from an Indian painting, likely from the Mughal or Rajput period, showcasing a man on horseback and a group of women gathered around a fountain.

- Man on Horseback:

- The man is dressed in a black outfit with gold accents, including a turban and a belt.

- He is riding a light blue horse with a black mane and tail.

- The horse is adorned with a saddle and bridle.

- Women Gathering Around the Fountain:

- There are six women in the scene, each wearing different colored saris.

- They are standing around a white fountain, which appears to be made of marble.

- The fountain has a small pool of water at its base.

- Background:

- The background of the painting features a green landscape with trees and hills.

- There is a small building or pavilion in the distance, possibly a palace or temple.

- The sky above is dark and cloudy, suggesting a stormy weather.

Overall, the image presents a serene and peaceful scene, with the man on horseback and the women gathered around the fountain creating a sense of harmony and tranquility. The use of bright colors and intricate details adds to the beauty and elegance of the painting.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-04

A painting of a man riding a blue horse and a woman holding a cup in her hand. The man is wearing a hat and a black dress, while the woman is wearing a yellow dress. Behind them, there is a white wall with a staircase and a few women standing on it. They are wearing different colored dresses. In the distance, there are trees and mountains.

Created by amazon.nova-lite-v1:0 on 2025-01-04

The image depicts a traditional Indian painting, likely from the Rajasthani school of art. The scene is set in a lush, green landscape with a serene river and a distant hill under a partly cloudy sky. The central focus is on a group of women standing on a white platform, possibly a veranda or a garden pavilion. They are dressed in vibrant traditional Indian attire, with one woman in a yellow saree and another in an orange saree, both adorned with jewelry. They seem to be engaged in conversation. In front of them, a woman on a blue horse is holding a small object, possibly a cup, and appears to be offering it to one of the women on the platform. The horse is intricately detailed, showcasing its blue color and ornate saddle. The painting is framed with a black border, enhancing its aesthetic appeal.

Google Gemini

Created by gemini-2.0-flash on 2025-04-26

Here is a description of the image:

The painting depicts a scene in a serene, idealized outdoor setting, possibly of Indian origin. A man on a light blue horse is presenting an object to a woman in a yellow sari, standing on a white, raised platform. The man is dressed in a dark, ornately patterned robe and a turban, holding out a golden object toward the woman. The woman in yellow is reaching out to receive the object.

Behind the woman, on the platform, are three other women dressed in traditional Indian attire in colors such as orange, pink, and white. These women are standing near a structure with architectural details. The background of the painting shows a green landscape with distant hills and a cloudy sky. The upper right shows a green tree with what looks like a white building above.

Created by gemini-2.0-flash-lite on 2025-04-26

Here is a description of the image:

This is a detailed miniature painting, likely from the Indian Mughal or Rajput traditions. It depicts a scene with several figures set in a lush, pastoral environment.

Key elements and description:

Central Figures: The focal point is a man on horseback. The horse is a striking pale blue, and the man is elaborately dressed in a dark robe decorated with intricate gold patterns, an embellished belt, and a jeweled headdress. He is reaching toward a woman in a yellow garment who is holding a gold vessel.

Women on a Terrace: Behind the woman, there is a white-walled platform or terrace, where several other women are gathered. They are adorned in colorful clothing (red, pink, black and white), jewelry and are of varying complexions, and some are adorned with head coverings.

Setting: The background presents a vast, verdant landscape with a rolling green field, distant hills, and a dark, dramatic sky, indicating a possible storm.

Architectural Details: The white platform appears to be part of a larger structure or a series of pavilions. There's a glimpse of other architecture, with decorative arches and stairs, in the upper right corner of the painting.

Style and Techniques: The painting is characterized by the meticulous detail and delicate brushstrokes typical of miniature art. The colors are vibrant and the forms are rendered with a certain softness. The overall composition creates a sense of elegance and formality.

Overall Impression:

The scene seems to depict a royal or noble encounter, perhaps a ceremony or a ritual of gift-giving. The painting conveys a sense of wealth and refinement. The landscape provides a beautiful backdrop for the interactions of the figures.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-25

The image depicts a traditional Indian miniature painting, likely from the Mughal or Rajput period, given the style and subject matter. The scene is set outdoors, with a lush green landscape in the background, featuring trees and a distant building that appears to be a palace or temple.

In the foreground, a man on horseback is central to the composition. He is dressed in elaborate attire, including a patterned garment and a turban, and is reaching out to a woman standing on a raised platform. The woman, dressed in a yellow sari, is also extending her hand towards the man, suggesting a gesture of greeting or farewell.

Behind the woman, several other women are standing, all dressed in colorful traditional attire, including saris and jewelry. They appear to be observing the interaction between the man and the woman. The platform they are standing on has a white railing and steps leading up to it, indicating it might be an elevated pavilion or balcony.

The overall scene suggests a moment of interaction or ceremony, possibly a departure or arrival, with the rich clothing and setting indicating the subjects are of high social status. The painting is detailed and vibrant, characteristic of Indian miniature art.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-06-24

This image is a traditional Indian painting, likely from the Mughal era, judging by the style and the attire of the figures. It depicts a scene with a man riding a blue horse, offering a flower or a decorative item to a woman dressed in a yellow sari. The woman is standing on a terrace, which is adorned with decorative railings and a fountain surrounded by pots. Behind her, three other women are dressed in colorful saris, adorned with jewelry, and appear to be observing the interaction between the man and the woman on the horse.

The background shows a lush green landscape with hills, trees, and a structure resembling a palace or temple in the distance. The sky is dark, suggesting the scene takes place during the evening or at night. The painting is detailed, with intricate patterns and vibrant colors, typical of Mughal art. The overall atmosphere of the painting is serene and elegant, capturing a moment of interaction between the characters.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-06-24

This image is a traditional Indian miniature painting, likely from the Mughal or Rajput schools of art, depicting a romantic or courtly scene. Here's a detailed description:

Foreground:

- A man is mounted on a blue horse. He is dressed in ornate clothing, including a black robe with gold embroidery and a turban. He is holding a small object, possibly a fruit or a flower, and appears to be offering it to a woman who is standing on a platform.

- The horse is depicted in a detailed manner, with a green saddle and a red and white cloth under the saddle.

Middle Ground:

- The woman receiving the object from the man is wearing a yellow sari and is adorned with jewelry. She is standing on a platform that is part of a larger structure with stairs leading up to it.

- There are three other women standing on the same platform. They are dressed in colorful saris and are also adorned with jewelry. They appear to be engaged in conversation or observing the interaction between the man and the woman in yellow.

Background:

- The background features a lush green landscape with rolling hills and trees. There is a dark, stormy sky above, suggesting an impending rain.

- In the distance, there are a few structures and possibly a bridge or pathway, indicating a rural setting.

Architecture:

- The structure on which the women are standing has a white facade with intricate details, typical of Mughal or Rajput architecture. There is an arched structure with a dome on the right side of the platform.

The overall composition and details suggest a scene of courtship or a romantic encounter, set in a picturesque and idyllic environment. The use of vibrant colors and detailed brushwork is characteristic of traditional Indian miniature paintings.