Machine Generated Data

Tags

Amazon

created on 2023-10-05

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| child | 28.3 | |

|

| ||

| outdoors | 26.1 | |

|

| ||

| people | 21.7 | |

|

| ||

| beach | 20.7 | |

|

| ||

| man | 20.1 | |

|

| ||

| happy | 20 | |

|

| ||

| adult | 20 | |

|

| ||

| male | 19.3 | |

|

| ||

| vacation | 18 | |

|

| ||

| mother | 16.9 | |

|

| ||

| person | 16.8 | |

|

| ||

| summer | 16.7 | |

|

| ||

| smiling | 15.2 | |

|

| ||

| lifestyle | 15.2 | |

|

| ||

| outside | 14.5 | |

|

| ||

| fun | 14.2 | |

|

| ||

| outdoor | 13.8 | |

|

| ||

| holiday | 13.6 | |

|

| ||

| sand | 13.5 | |

|

| ||

| sea | 13.3 | |

|

| ||

| leisure | 13.3 | |

|

| ||

| cute | 12.9 | |

|

| ||

| parent | 12.9 | |

|

| ||

| love | 12.6 | |

|

| ||

| sitting | 12 | |

|

| ||

| happiness | 11.7 | |

|

| ||

| family | 11.6 | |

|

| ||

| together | 11.4 | |

|

| ||

| couple | 11.3 | |

|

| ||

| portrait | 11 | |

|

| ||

| relaxation | 10.9 | |

|

| ||

| childhood | 10.7 | |

|

| ||

| one | 10.4 | |

|

| ||

| sexy | 10.4 | |

|

| ||

| youth | 10.2 | |

|

| ||

| healthy | 10.1 | |

|

| ||

| playing | 10 | |

|

| ||

| girls | 10 | |

|

| ||

| joy | 10 | |

|

| ||

| face | 9.9 | |

|

| ||

| father | 9.9 | |

|

| ||

| park | 9.9 | |

|

| ||

| kin | 9.8 | |

|

| ||

| sibling | 9.7 | |

|

| ||

| seller | 9.6 | |

|

| ||

| hair | 9.5 | |

|

| ||

| women | 9.5 | |

|

| ||

| world | 9.4 | |

|

| ||

| water | 9.3 | |

|

| ||

| relax | 9.3 | |

|

| ||

| dad | 9.2 | |

|

| ||

| relaxing | 9.1 | |

|

| ||

| human | 9 | |

|

| ||

| romantic | 8.9 | |

|

| ||

| little | 8.8 | |

|

| ||

| smile | 8.5 | |

|

| ||

| pretty | 8.4 | |

|

| ||

| pink | 8.4 | |

|

| ||

| fashion | 8.3 | |

|

| ||

| kid | 8 | |

|

| ||

| daughter | 7.9 | |

|

| ||

| day | 7.8 | |

|

| ||

| boy | 7.8 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| casual | 7.6 | |

|

| ||

| hand | 7.6 | |

|

| ||

| garden | 7.5 | |

|

| ||

| kids | 7.5 | |

|

| ||

| looking | 7.2 | |

|

| ||

| farm | 7.1 | |

|

| ||

| mammal | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 96 | |

|

| ||

| person | 93.9 | |

|

| ||

| black and white | 93.1 | |

|

| ||

| sitting | 92.2 | |

|

| ||

| child | 89.4 | |

|

| ||

| monochrome photography | 86.8 | |

|

| ||

| photography | 82 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| emotion | 80.2 | |

|

| ||

| human behavior | 75.4 | |

|

| ||

| girl | 74.2 | |

|

| ||

| human | 71 | |

|

| ||

| monochrome | 69.2 | |

|

| ||

| smile | 65.4 | |

|

| ||

| stock photography | 55.7 | |

|

| ||

| portrait photography | 55.6 | |

|

| ||

| shoe | 54.9 | |

|

| ||

| family | 50.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

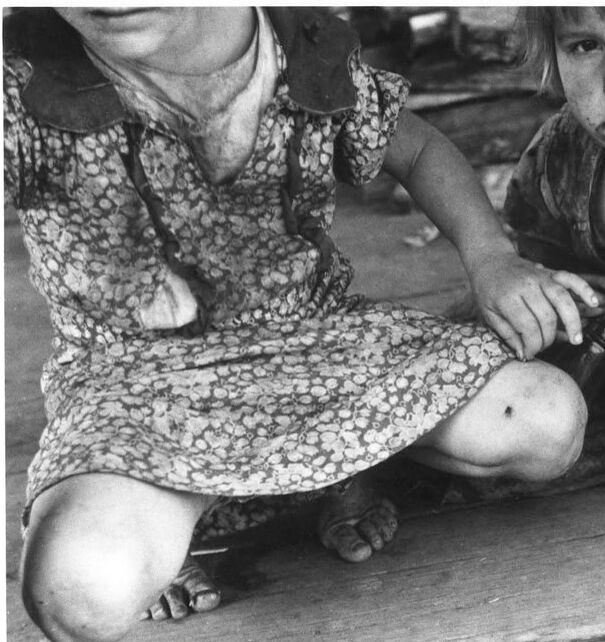

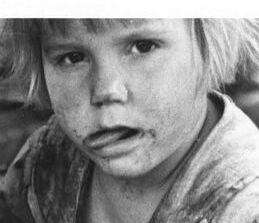

| Age | 2-8 |

| Gender | Male, 99.4% |

| Calm | 89.1% |

| Surprised | 8.2% |

| Fear | 6.1% |

| Sad | 4.1% |

| Confused | 1.6% |

| Angry | 0.4% |

| Disgusted | 0.2% |

| Happy | 0.1% |

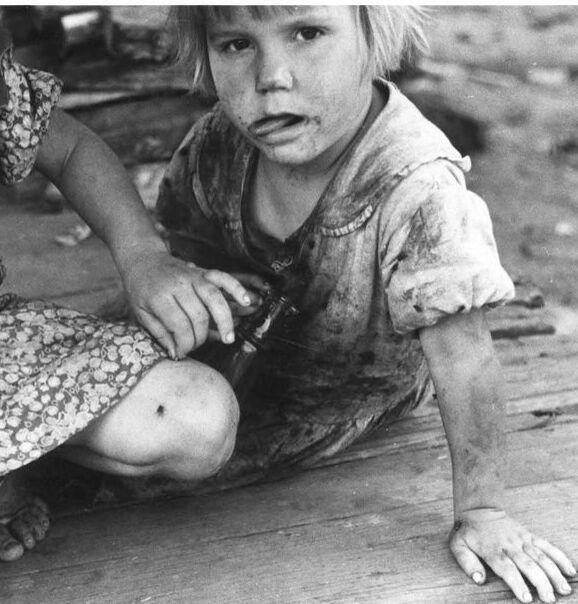

AWS Rekognition

| Age | 27-37 |

| Gender | Male, 92.3% |

| Calm | 35.9% |

| Happy | 23.5% |

| Surprised | 22.9% |

| Angry | 9.7% |

| Fear | 6.4% |

| Sad | 5.4% |

| Disgusted | 2.9% |

| Confused | 2.4% |

Microsoft Cognitive Services

| Age | 35 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| paintings art | 64% | |

|

| ||

| people portraits | 27.9% | |

|

| ||

| pets animals | 3.1% | |

|

| ||

| food drinks | 3.1% | |

|

| ||

Captions

Microsoft

created by unknown on 2018-05-10

| a person holding a baby | 38.3% | |

|

| ||

| a person holding a baby | 38.2% | |

|

| ||

| a person holding a baby | 38.1% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-28

| a photograph of a young girl sitting on a wooden floor | -100% | |

|

| ||