Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

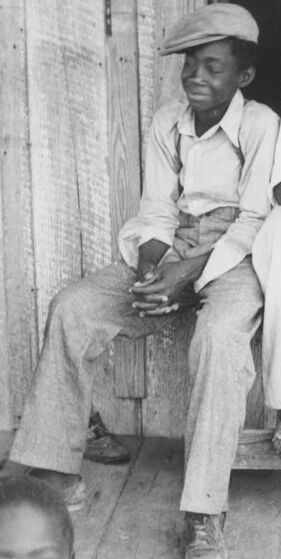

| Age | 4-12 |

| Gender | Male, 59.9% |

| Calm | 65.9% |

| Sad | 65.4% |

| Surprised | 6.4% |

| Fear | 6.3% |

| Confused | 0.7% |

| Angry | 0.5% |

| Disgusted | 0.2% |

| Happy | 0.1% |

Feature analysis

Amazon

| Adult | 99.4% | |

Categories

Imagga

| people portraits | 73.6% | |

| paintings art | 24.3% | |

| streetview architecture | 1% | |

Captions

Microsoft

created by unknown on 2018-05-10

| a black and white photo of a man | 95.6% | |

| an old black and white photo of a man | 95.5% | |

| a man sitting on a bench posing for the camera | 91.7% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-03

| a photograph of a group of people sitting on a porch | -100% | |

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-24

The image is a black-and-white photograph of a group of people, primarily children and young adults, sitting on the porch of a wooden building. The individuals are dressed in casual attire, with some wearing hats and others barefoot. The atmosphere appears relaxed, with the subjects engaged in conversation or simply sitting together.

In the background, a woman can be seen standing in the doorway, while another child sits on the floor in front of the group. The overall setting suggests a rural or impoverished environment, possibly during the early 20th century. The photograph captures a moment of everyday life, highlighting the simplicity and community of the people depicted.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-24

The image is a black-and-white photograph of a group of people sitting on a porch, with a woman and child standing in the doorway behind them.

The group of people are all wearing casual clothing, such as hats, shirts, pants, and shoes. The man in the center is wearing a white hat, a white shirt, and white pants. The man to his left is wearing a light-colored hat, a light-colored shirt, and light-colored pants. The man to his right is wearing a dark-colored hat, a white shirt, and dark-colored pants with white stripes.

In the background, there is a woman standing in the doorway of the house, wearing a long dress with polka dots. She has her hand on her hip and is looking down at the group of people on the porch. A young child is standing next to her, wearing a white dress and holding onto the woman's leg.

The overall atmosphere of the image suggests that it was taken in a rural or small-town setting, possibly during the early 20th century. The clothing and hairstyles of the people in the image are consistent with the fashion of that time period. The image may have been taken by a photographer who was documenting everyday life in a small community.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-24

The image is a black-and-white photograph of four men and two children sitting on a bench in front of a wooden house. The men are wearing hats and long-sleeved shirts, while the children are wearing dresses. The men are sitting closely together, with their hands resting on their laps. One of the men is holding a cigarette in his mouth. The children are standing behind the men, looking at something.

Created by amazon.nova-lite-v1:0 on 2025-05-24

The image is a black-and-white photograph of a group of people sitting on a wooden bench in front of a wooden house. Three adult men are sitting on the bench, while a young child is sitting on the floor in front of them. The man on the left is wearing a hat and a scarf around his neck, while the man in the middle is wearing a hat and a necklace. The man on the right is wearing a hat and has his hand on his chin. Behind them is a woman standing in the doorway of the house.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-28

Here's a description of the image:

This black and white photograph captures a group of African Americans gathered outside what appears to be a wooden structure, possibly a home. Three men are seated on a wooden bench, with a fourth individual visible partially. Their attire suggests an earlier period.

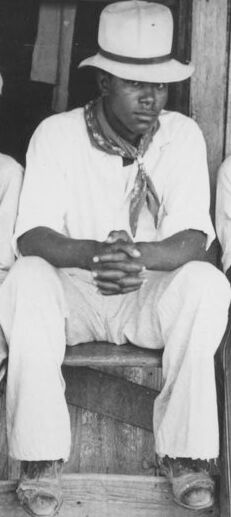

- Center: A man wearing a wide-brimmed hat, white shirt, and light-colored pants sits prominently. He has his hands clasped in his lap.

- Left: A man with a newsboy cap.

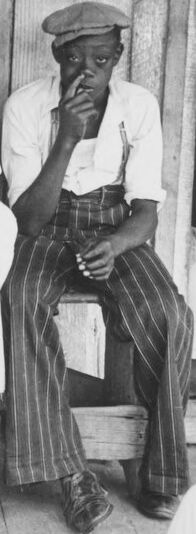

- Right: A man with a newsboy cap is seated next to the man in a wide-brimmed hat, and he is holding his finger to his mouth.

- Additional People: In the background, a woman is visible partially behind a doorway, wearing a dress with a polka-dot pattern, suggesting a family setting. Also, a child is visible looking at the camera.

- Other details: All the men are wearing shoes.

The composition is a window into a bygone era, offering a glimpse into everyday life and the clothing styles of the time. The wooden structure and the simple setting add to the feeling of authenticity and the depiction of a historical moment.

Created by gemini-2.0-flash on 2025-04-28

This is a black and white photo featuring a group of African American people sitting and standing around a wooden structure. In the center of the image, three young men are seated on what appears to be a porch or a bench attached to a building with wooden siding. The man in the middle wears a light-colored hat, a scarf tied around his neck, and his hands are clasped in his lap. The two men on either side of him also wear caps. To the right, a child in a light-colored outfit stands in a doorway, and behind them is a woman wearing a polka dot dress.

In the foreground, there are glimpses of other individuals, including another young person whose expression suggests contemplation. The overall composition conveys a sense of community and portrays a moment of quiet observation.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-23

The image is a black-and-white photograph depicting a group of people sitting and standing outside a wooden structure, likely a house or a shack. The setting appears to be rural or semi-rural.

In the foreground, there are three men sitting on a wooden bench or platform. The man in the center is wearing a white hat, white shirt, and light-colored pants. He is looking directly at the camera and appears to be holding something in his hands. To his left is another man wearing a light-colored cap, light shirt, and darker pants. He is also looking at the camera. The third man, on the right, is wearing a darker cap, a white shirt, and striped pants. He is holding a cigarette and looking away from the camera.

In the background, there are several other individuals, including a woman standing in the doorway of the wooden structure and a few children. The children are dressed in simple clothing, and one child in the foreground on the right side of the image is wearing a knitted cap and a light-colored shirt.

The overall atmosphere of the image suggests a moment of rest or gathering, possibly during a break from work or daily activities. The clothing and setting indicate a modest lifestyle, typical of rural communities in the past.