Machine Generated Data

Tags

Amazon

created on 2023-10-06

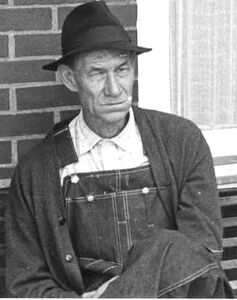

| Brick | 99.9 | |

|

| ||

| Indoors | 99.8 | |

|

| ||

| Restaurant | 99.8 | |

|

| ||

| Clothing | 99.8 | |

|

| ||

| Coat | 99.7 | |

|

| ||

| Adult | 99.7 | |

|

| ||

| Male | 99.7 | |

|

| ||

| Man | 99.7 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Sitting | 98.4 | |

|

| ||

| Furniture | 98.3 | |

|

| ||

| Adult | 97.8 | |

|

| ||

| Male | 97.8 | |

|

| ||

| Man | 97.8 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Face | 90.5 | |

|

| ||

| Head | 90.5 | |

|

| ||

| Plant | 89.6 | |

|

| ||

| Person | 87.2 | |

|

| ||

| Cap | 83.4 | |

|

| ||

| Bench | 78.3 | |

|

| ||

| Hat | 76.4 | |

|

| ||

| Cafeteria | 72.6 | |

|

| ||

| Jacket | 69.7 | |

|

| ||

| Potted Plant | 58 | |

|

| ||

| Diner | 57.7 | |

|

| ||

| Food | 57.7 | |

|

| ||

| Photography | 57.5 | |

|

| ||

| Portrait | 57.5 | |

|

| ||

| Baseball Cap | 56.6 | |

|

| ||

| Cafe | 56.5 | |

|

| ||

| Shop | 56.3 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| newspaper | 52.1 | |

|

| ||

| product | 41.7 | |

|

| ||

| creation | 32.7 | |

|

| ||

| man | 26.9 | |

|

| ||

| laptop | 25.7 | |

|

| ||

| daily | 25.1 | |

|

| ||

| office | 24.4 | |

|

| ||

| business | 22.5 | |

|

| ||

| people | 22.3 | |

|

| ||

| person | 22.2 | |

|

| ||

| male | 22 | |

|

| ||

| businessman | 19.4 | |

|

| ||

| adult | 18.9 | |

|

| ||

| computer | 18.7 | |

|

| ||

| building | 17.7 | |

|

| ||

| sitting | 17.2 | |

|

| ||

| professional | 16.3 | |

|

| ||

| room | 16.3 | |

|

| ||

| corporate | 15.5 | |

|

| ||

| work | 14.6 | |

|

| ||

| shop | 14.2 | |

|

| ||

| job | 14.1 | |

|

| ||

| old | 13.9 | |

|

| ||

| men | 13.7 | |

|

| ||

| happy | 13.1 | |

|

| ||

| notebook | 12.8 | |

|

| ||

| working | 12.4 | |

|

| ||

| executive | 12.3 | |

|

| ||

| modern | 11.9 | |

|

| ||

| technology | 11.9 | |

|

| ||

| alone | 11.9 | |

|

| ||

| indoor | 11.9 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| smiling | 11.6 | |

|

| ||

| indoors | 11.4 | |

|

| ||

| smile | 10.7 | |

|

| ||

| success | 10.5 | |

|

| ||

| businesspeople | 10.4 | |

|

| ||

| looking | 10.4 | |

|

| ||

| education | 10.4 | |

|

| ||

| mercantile establishment | 9.9 | |

|

| ||

| chair | 9.8 | |

|

| ||

| blackboard | 9.6 | |

|

| ||

| lifestyle | 9.4 | |

|

| ||

| senior | 9.4 | |

|

| ||

| casual | 9.3 | |

|

| ||

| screen | 9.2 | |

|

| ||

| black | 9 | |

|

| ||

| couple | 8.7 | |

|

| ||

| standing | 8.7 | |

|

| ||

| day | 8.6 | |

|

| ||

| finance | 8.4 | |

|

| ||

| horizontal | 8.4 | |

|

| ||

| color | 8.3 | |

|

| ||

| scholar | 8.3 | |

|

| ||

| window | 8.3 | |

|

| ||

| outdoors | 8.2 | |

|

| ||

| suit | 8.1 | |

|

| ||

| home | 8 | |

|

| ||

| women | 7.9 | |

|

| ||

| table | 7.9 | |

|

| ||

| retired | 7.7 | |

|

| ||

| career | 7.6 | |

|

| ||

| side | 7.5 | |

|

| ||

| city | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| manager | 7.4 | |

|

| ||

| park | 7.4 | |

|

| ||

| jacket | 7.4 | |

|

| ||

| student | 7.4 | |

|

| ||

| street | 7.4 | |

|

| ||

| cheerful | 7.3 | |

|

| ||

| worker | 7.3 | |

|

| ||

| confident | 7.3 | |

|

| ||

| businesswoman | 7.3 | |

|

| ||

| face | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.2 | |

|

| ||

| black and white | 91.2 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 80.7 | |

|

| ||

| monochrome photography | 80.5 | |

|

| ||

| poster | 74.8 | |

|

| ||

| monochrome | 67.8 | |

|

| ||

| advertising | 58.6 | |

|

| ||

| street | 56.9 | |

|

| ||

| window | 54.9 | |

|

| ||

| font | 53.7 | |

|

| ||

| human behavior | 53.3 | |

|

| ||

| vintage clothing | 50.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 45-51 |

| Gender | Female, 97.3% |

| Fear | 90% |

| Surprised | 9.9% |

| Calm | 8.2% |

| Sad | 6.8% |

| Confused | 1.2% |

| Disgusted | 0.9% |

| Angry | 0.9% |

| Happy | 0.4% |

AWS Rekognition

| Age | 57-65 |

| Gender | Male, 100% |

| Calm | 84% |

| Sad | 6.5% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Angry | 5.5% |

| Confused | 1% |

| Happy | 0.5% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 98.8% |

| Calm | 80.2% |

| Sad | 8.3% |

| Happy | 8% |

| Surprised | 6.4% |

| Fear | 6% |

| Confused | 0.7% |

| Disgusted | 0.4% |

| Angry | 0.3% |

Microsoft Cognitive Services

| Age | 64 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 37 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 13 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 54.6% | |

|

| ||

| paintings art | 40.7% | |

|

| ||

| interior objects | 2.9% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a man sitting on a bench reading a book | 72.9% | |

|

| ||

| a man sitting on a bench in front of a brick building | 72.8% | |

|

| ||

| a man sitting on a bench in front of a building | 72.7% | |

|

| ||

Text analysis

Amazon

MILLS

DRINK

5

100m

ILL

DRINK

ILL

DRINK