Machine Generated Data

Tags

Amazon

created on 2023-10-05

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| uniform | 45.3 | |

|

| ||

| military uniform | 35.5 | |

|

| ||

| man | 32.9 | |

|

| ||

| military | 30.9 | |

|

| ||

| gun | 29.1 | |

|

| ||

| engineer | 29 | |

|

| ||

| weapon | 28.5 | |

|

| ||

| clothing | 28.4 | |

|

| ||

| rifle | 27.2 | |

|

| ||

| soldier | 26.4 | |

|

| ||

| war | 25.1 | |

|

| ||

| private | 23.3 | |

|

| ||

| male | 22 | |

|

| ||

| person | 19.3 | |

|

| ||

| protection | 17.3 | |

|

| ||

| camouflage | 16.8 | |

|

| ||

| people | 16.7 | |

|

| ||

| sport | 16.5 | |

|

| ||

| army | 15.6 | |

|

| ||

| danger | 15.5 | |

|

| ||

| mask | 14.5 | |

|

| ||

| firearm | 14.3 | |

|

| ||

| covering | 14.3 | |

|

| ||

| outdoors | 14.2 | |

|

| ||

| consumer goods | 14.1 | |

|

| ||

| activity | 12.5 | |

|

| ||

| old | 12.5 | |

|

| ||

| leisure | 11.6 | |

|

| ||

| adult | 11.1 | |

|

| ||

| power | 10.9 | |

|

| ||

| recreation | 10.8 | |

|

| ||

| statue | 10.3 | |

|

| ||

| men | 10.3 | |

|

| ||

| guy | 10.2 | |

|

| ||

| warfare | 9.9 | |

|

| ||

| to | 9.7 | |

|

| ||

| training | 9.2 | |

|

| ||

| holding | 9.1 | |

|

| ||

| helmet | 8.9 | |

|

| ||

| battle | 8.8 | |

|

| ||

| toxic | 8.8 | |

|

| ||

| protective | 8.8 | |

|

| ||

| target | 8.8 | |

|

| ||

| architecture | 8.6 | |

|

| ||

| walking | 8.5 | |

|

| ||

| travel | 8.4 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| summer | 8.4 | |

|

| ||

| city | 8.3 | |

|

| ||

| fun | 8.2 | |

|

| ||

| industrial | 8.2 | |

|

| ||

| dirty | 8.1 | |

|

| ||

| history | 8 | |

|

| ||

| fisherman | 8 | |

|

| ||

| combat | 7.9 | |

|

| ||

| black | 7.8 | |

|

| ||

| defense | 7.8 | |

|

| ||

| nuclear | 7.8 | |

|

| ||

| shovel | 7.7 | |

|

| ||

| sculpture | 7.6 | |

|

| ||

| sports | 7.4 | |

|

| ||

| active | 7.2 | |

|

| ||

| game | 7.1 | |

|

| ||

| child | 7 | |

|

| ||

Google

created on 2018-05-10

| black and white | 91.7 | |

|

| ||

| motor vehicle | 80.7 | |

|

| ||

| monochrome photography | 79.3 | |

|

| ||

| vehicle | 72.2 | |

|

| ||

| monochrome | 62.3 | |

|

| ||

| troop | 59.5 | |

|

| ||

| human behavior | 58.3 | |

|

| ||

| history | 56.7 | |

|

| ||

| stock photography | 55.2 | |

|

| ||

| soldier | 51.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

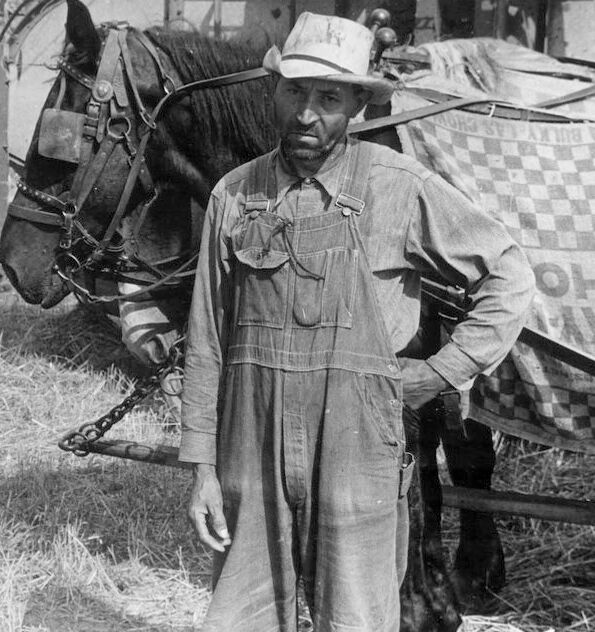

| Age | 45-51 |

| Gender | Male, 99.9% |

| Calm | 98.6% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.7% |

| Confused | 0.1% |

| Happy | 0.1% |

| Disgusted | 0.1% |

Microsoft Cognitive Services

| Age | 58 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 99.9% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a man standing next to a horse | 89.2% | |

|

| ||

| a man riding a horse | 76.1% | |

|

| ||

| a man on a horse | 76% | |

|

| ||

Text analysis

Amazon

BULKY

LAS

BULKY LAS eu

он

ANT

eu