Machine Generated Data

Tags

Amazon

created on 2023-10-06

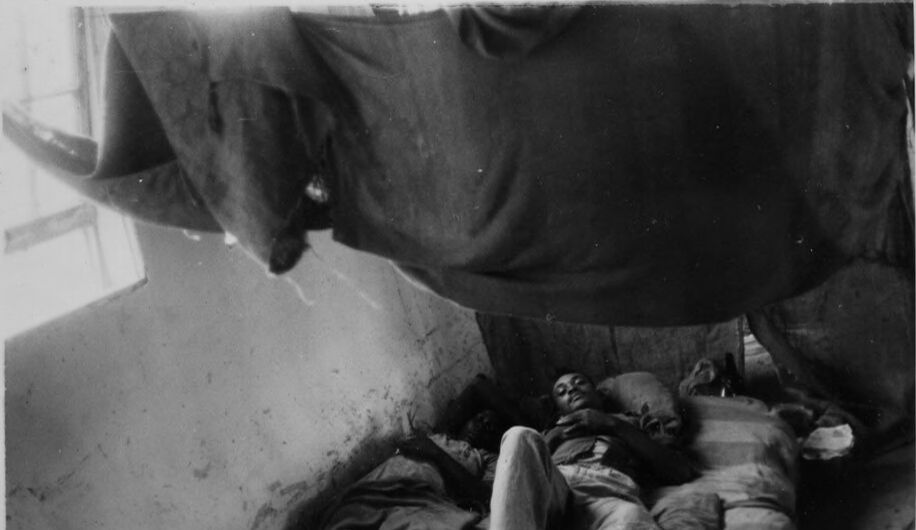

| Person | 97.7 | |

|

| ||

| Architecture | 96.2 | |

|

| ||

| Building | 96.2 | |

|

| ||

| Outdoors | 96.2 | |

|

| ||

| Shelter | 96.2 | |

|

| ||

| Face | 89.4 | |

|

| ||

| Head | 89.4 | |

|

| ||

| Person | 83.5 | |

|

| ||

| Adult | 83.5 | |

|

| ||

| Male | 83.5 | |

|

| ||

| Man | 83.5 | |

|

| ||

| Bed | 79.5 | |

|

| ||

| Furniture | 79.5 | |

|

| ||

| Laundry | 61 | |

|

| ||

| Back | 57.8 | |

|

| ||

| Body Part | 57.8 | |

|

| ||

| Hospital | 57.3 | |

|

| ||

| Sleeping | 56.5 | |

|

| ||

| Bedroom | 56.5 | |

|

| ||

| Indoors | 56.5 | |

|

| ||

| Room | 56.5 | |

|

| ||

| Animal | 56.4 | |

|

| ||

| Mammal | 56.4 | |

|

| ||

| Slum | 55.7 | |

|

| ||

| Bathing | 55.3 | |

|

| ||

| Taking Cover | 55.2 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| cadaver | 45.1 | |

|

| ||

| water | 24.7 | |

|

| ||

| potter's wheel | 23.9 | |

|

| ||

| sand | 21.7 | |

|

| ||

| sculpture | 21.6 | |

|

| ||

| wheel | 20.1 | |

|

| ||

| wildlife | 18.7 | |

|

| ||

| mammal | 18.7 | |

|

| ||

| statue | 18.6 | |

|

| ||

| travel | 18.3 | |

|

| ||

| sea | 18 | |

|

| ||

| sea lion | 16.4 | |

|

| ||

| ocean | 15.8 | |

|

| ||

| machine | 15.6 | |

|

| ||

| rock | 15.6 | |

|

| ||

| lion | 14.7 | |

|

| ||

| art | 13.2 | |

|

| ||

| stone | 12.7 | |

|

| ||

| beach | 12.7 | |

|

| ||

| tourism | 12.4 | |

|

| ||

| park | 11.5 | |

|

| ||

| marine | 11.4 | |

|

| ||

| rocks | 11.3 | |

|

| ||

| life | 10.9 | |

|

| ||

| arctic | 10.2 | |

|

| ||

| seal | 10.2 | |

|

| ||

| hippopotamus | 10.2 | |

|

| ||

| ecology | 10.1 | |

|

| ||

| city | 10 | |

|

| ||

| mechanical device | 9.7 | |

|

| ||

| earth | 9.6 | |

|

| ||

| animals | 9.3 | |

|

| ||

| mountain | 8.9 | |

|

| ||

| canyon | 8.7 | |

|

| ||

| ancient | 8.6 | |

|

| ||

| rest | 8.5 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| monument | 8.4 | |

|

| ||

| figure | 8.3 | |

|

| ||

| island | 8.2 | |

|

| ||

| landscape | 8.2 | |

|

| ||

| history | 8 | |

|

| ||

| river | 8 | |

|

| ||

| lion r n | 7.9 | |

|

| ||

| scenic | 7.9 | |

|

| ||

| polar | 7.9 | |

|

| ||

| wild | 7.8 | |

|

| ||

| sandstone | 7.8 | |

|

| ||

| geology | 7.8 | |

|

| ||

| turkey | 7.8 | |

|

| ||

| rocky | 7.7 | |

|

| ||

| outside | 7.7 | |

|

| ||

| winter | 7.7 | |

|

| ||

| trip | 7.5 | |

|

| ||

| mechanism | 7.5 | |

|

| ||

| stock | 7.5 | |

|

| ||

| black | 7.4 | |

|

| ||

| vacation | 7.4 | |

|

| ||

| animal | 7.2 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.7 | |

|

| ||

| black | 95.4 | |

|

| ||

| black and white | 95 | |

|

| ||

| monochrome photography | 91.2 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 80.2 | |

|

| ||

| monochrome | 76.4 | |

|

| ||

| still life photography | 72.7 | |

|

| ||

| stock photography | 63.4 | |

|

| ||

| jaw | 50.1 | |

|

| ||

Microsoft

created on 2018-05-10

| old | 44.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 99.6% |

| Fear | 46.8% |

| Sad | 26.1% |

| Calm | 24% |

| Surprised | 12.8% |

| Happy | 3.3% |

| Angry | 2% |

| Disgusted | 1.5% |

| Confused | 1.2% |

Feature analysis

Categories

Imagga

| pets animals | 52.4% | |

|

| ||

| nature landscape | 44.7% | |

|

| ||

| paintings art | 1.9% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| an old photo of a man | 59.3% | |

|

| ||