Machine Generated Data

Tags

Amazon

created on 2023-10-06

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| car | 34.1 | |

|

| ||

| toaster | 31.2 | |

|

| ||

| kitchen appliance | 28.2 | |

|

| ||

| vehicle | 25.4 | |

|

| ||

| home appliance | 23.4 | |

|

| ||

| automobile | 19.1 | |

|

| ||

| driver | 18.4 | |

|

| ||

| appliance | 16.3 | |

|

| ||

| drive | 16.1 | |

|

| ||

| window | 16 | |

|

| ||

| happy | 15.6 | |

|

| ||

| auto | 15.3 | |

|

| ||

| person | 14.5 | |

|

| ||

| man | 14.1 | |

|

| ||

| smiling | 13.7 | |

|

| ||

| smile | 13.5 | |

|

| ||

| driving | 13.5 | |

|

| ||

| male | 13.5 | |

|

| ||

| transportation | 13.4 | |

|

| ||

| old | 13.2 | |

|

| ||

| sitting | 12.9 | |

|

| ||

| case | 12.6 | |

|

| ||

| furniture | 12.3 | |

|

| ||

| screen | 12 | |

|

| ||

| people | 11.7 | |

|

| ||

| windowsill | 11.5 | |

|

| ||

| passenger | 11.4 | |

|

| ||

| travel | 11.3 | |

|

| ||

| joy | 10.8 | |

|

| ||

| black | 10.8 | |

|

| ||

| adult | 10.3 | |

|

| ||

| happiness | 10.2 | |

|

| ||

| transport | 10 | |

|

| ||

| road | 9.9 | |

|

| ||

| portrait | 9.7 | |

|

| ||

| outdoors | 9.7 | |

|

| ||

| sill | 9.6 | |

|

| ||

| looking | 9.6 | |

|

| ||

| fun | 9 | |

|

| ||

| china cabinet | 8.9 | |

|

| ||

| new | 8.9 | |

|

| ||

| home | 8.8 | |

|

| ||

| cabinet | 8.7 | |

|

| ||

| love | 8.7 | |

|

| ||

| wood | 8.3 | |

|

| ||

| one | 8.2 | |

|

| ||

| business | 7.9 | |

|

| ||

| furnishing | 7.9 | |

|

| ||

| motor | 7.7 | |

|

| ||

| pretty | 7.7 | |

|

| ||

| protective covering | 7.7 | |

|

| ||

| bride | 7.7 | |

|

| ||

| device | 7.6 | |

|

| ||

| building | 7.6 | |

|

| ||

| frame | 7.6 | |

|

| ||

| durables | 7.6 | |

|

| ||

| sit | 7.6 | |

|

| ||

| communication | 7.5 | |

|

| ||

| child | 7.5 | |

|

| ||

| trip | 7.5 | |

|

| ||

| house | 7.5 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| structural member | 7.4 | |

|

| ||

| inside | 7.4 | |

|

| ||

| cheerful | 7.3 | |

|

| ||

| window screen | 7.3 | |

|

| ||

| metal | 7.2 | |

|

| ||

| hat | 7.1 | |

|

| ||

| worker | 7.1 | |

|

| ||

| work | 7.1 | |

|

| ||

Google

created on 2018-05-10

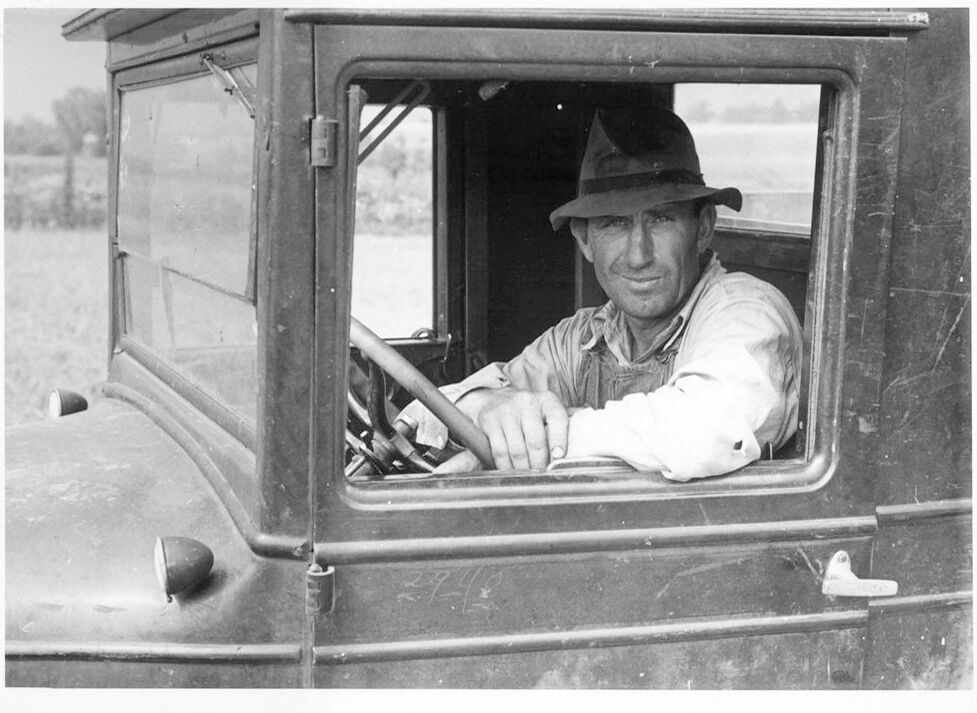

| motor vehicle | 96.7 | |

|

| ||

| photograph | 95.2 | |

|

| ||

| black and white | 89.9 | |

|

| ||

| car | 88.4 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 75.9 | |

|

| ||

| window | 72.5 | |

|

| ||

| vehicle | 69.4 | |

|

| ||

| monochrome | 56.3 | |

|

| ||

| automotive exterior | 54.1 | |

|

| ||

| vintage clothing | 50.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

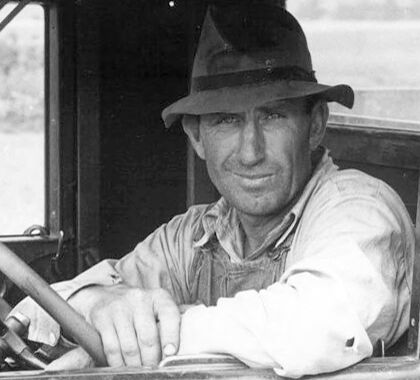

| Age | 45-51 |

| Gender | Male, 100% |

| Calm | 97.5% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Happy | 1% |

| Confused | 0.3% |

| Angry | 0.3% |

| Disgusted | 0.3% |

Microsoft Cognitive Services

| Age | 43 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 67.8% | |

|

| ||

| people portraits | 21.5% | |

|

| ||

| interior objects | 4.7% | |

|

| ||

| streetview architecture | 3.8% | |

|

| ||

| pets animals | 1% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black and white photo of a bus window | 84.9% | |

|

| ||

| a vintage photo of a bus window | 84.8% | |

|

| ||

| an old black and white photo of a bus window | 81.5% | |

|

| ||