Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Adult | 97.5 | |

|

| ||

| Bride | 97.5 | |

|

| ||

| Female | 97.5 | |

|

| ||

| Person | 97.5 | |

|

| ||

| Wedding | 97.5 | |

|

| ||

| Woman | 97.5 | |

|

| ||

| Adult | 97.1 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Male | 97.1 | |

|

| ||

| Man | 97.1 | |

|

| ||

| Person | 96.2 | |

|

| ||

| Person | 94 | |

|

| ||

| Person | 88.4 | |

|

| ||

| Person | 83.4 | |

|

| ||

| Architecture | 78.1 | |

|

| ||

| Building | 78.1 | |

|

| ||

| Face | 77.9 | |

|

| ||

| Head | 77.9 | |

|

| ||

| Person | 73.8 | |

|

| ||

| Person | 70.9 | |

|

| ||

| Shop | 68.5 | |

|

| ||

| Text | 67.1 | |

|

| ||

| Transportation | 62.3 | |

|

| ||

| Vehicle | 62.3 | |

|

| ||

| Symbol | 60.5 | |

|

| ||

| Art | 56.8 | |

|

| ||

| Painting | 56.8 | |

|

| ||

| Terminal | 56.8 | |

|

| ||

| Kiosk | 56.8 | |

|

| ||

| Indoors | 56.6 | |

|

| ||

| License Plate | 55.9 | |

|

| ||

| Number | 55.6 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| gas pump | 59.8 | |

|

| ||

| pump | 52.4 | |

|

| ||

| mechanical device | 35.3 | |

|

| ||

| device | 29.9 | |

|

| ||

| mechanism | 25.8 | |

|

| ||

| equipment | 24.3 | |

|

| ||

| door | 22 | |

|

| ||

| old | 20.9 | |

|

| ||

| architecture | 18 | |

|

| ||

| machine | 17.4 | |

|

| ||

| electronic equipment | 15.7 | |

|

| ||

| building | 15.4 | |

|

| ||

| technology | 14.8 | |

|

| ||

| business | 14 | |

|

| ||

| retro | 13.9 | |

|

| ||

| metal | 12.9 | |

|

| ||

| box | 12.7 | |

|

| ||

| office | 12.4 | |

|

| ||

| bank | 11.8 | |

|

| ||

| slot machine | 11.7 | |

|

| ||

| vintage | 11.6 | |

|

| ||

| safe | 11.2 | |

|

| ||

| window | 11.2 | |

|

| ||

| wall | 11.1 | |

|

| ||

| money | 11.1 | |

|

| ||

| security | 11 | |

|

| ||

| jukebox | 10.5 | |

|

| ||

| station | 10.4 | |

|

| ||

| industry | 10.2 | |

|

| ||

| house | 10 | |

|

| ||

| wealth | 9.9 | |

|

| ||

| button | 9.7 | |

|

| ||

| black | 9.6 | |

|

| ||

| urban | 9.6 | |

|

| ||

| design | 9.6 | |

|

| ||

| storage | 9.5 | |

|

| ||

| display | 9.4 | |

|

| ||

| shop | 9.3 | |

|

| ||

| town | 9.3 | |

|

| ||

| network | 9.3 | |

|

| ||

| communication | 9.2 | |

|

| ||

| slot | 9.2 | |

|

| ||

| history | 8.9 | |

|

| ||

| digital | 8.9 | |

|

| ||

| finance | 8.4 | |

|

| ||

| record player | 8.4 | |

|

| ||

| city | 8.3 | |

|

| ||

| exterior | 8.3 | |

|

| ||

| sign | 8.3 | |

|

| ||

| aged | 8.1 | |

|

| ||

| facade | 8 | |

|

| ||

| receiver | 8 | |

|

| ||

| tape player | 8 | |

|

| ||

| dial | 7.9 | |

|

| ||

| switch | 7.8 | |

|

| ||

| system | 7.6 | |

|

| ||

| brick | 7.5 | |

|

| ||

| electronic | 7.5 | |

|

| ||

| number | 7.5 | |

|

| ||

| room | 7.4 | |

|

| ||

| style | 7.4 | |

|

| ||

| closeup | 7.4 | |

|

| ||

| safety | 7.4 | |

|

| ||

| banking | 7.3 | |

|

| ||

| light | 7.3 | |

|

| ||

| cash | 7.3 | |

|

| ||

| computer | 7.2 | |

|

| ||

| home | 7.2 | |

|

| ||

| server | 7.1 | |

|

| ||

| wooden | 7 | |

|

| ||

Google

created on 2018-05-10

| black and white | 91.6 | |

|

| ||

| monochrome photography | 78.2 | |

|

| ||

| monochrome | 70.1 | |

|

| ||

| electronic device | 51.7 | |

|

| ||

Microsoft

created on 2018-05-10

| outdoor | 87.8 | |

|

| ||

| black | 74.7 | |

|

| ||

| white | 60.9 | |

|

| ||

| store | 39.4 | |

|

| ||

| entertainment center | 11.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 77.7% |

| Surprised | 97.4% |

| Fear | 16.2% |

| Sad | 3.9% |

| Confused | 2.8% |

| Angry | 0.9% |

| Happy | 0.8% |

| Disgusted | 0.5% |

| Calm | 0.3% |

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 88.8% |

| Fear | 80.9% |

| Surprised | 24.7% |

| Angry | 6% |

| Sad | 4.6% |

| Happy | 2.8% |

| Calm | 2% |

| Confused | 1.4% |

| Disgusted | 0.9% |

AWS Rekognition

| Age | 6-12 |

| Gender | Female, 99.9% |

| Surprised | 41.5% |

| Confused | 38.7% |

| Sad | 12.4% |

| Fear | 8.7% |

| Happy | 7.4% |

| Calm | 3.7% |

| Angry | 1.7% |

| Disgusted | 1.5% |

Microsoft Cognitive Services

| Age | 5 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 18 |

| Gender | Female |

Feature analysis

Categories

Imagga

| interior objects | 99.3% | |

|

| ||

Captions

Microsoft

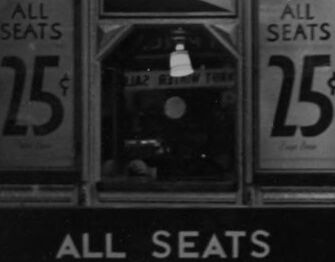

created on 2018-05-10

| a vintage photo of a person | 75% | |

|

| ||

| a vintage photo of a store | 70.9% | |

|

| ||

| a black and white photo of a person | 68.1% | |

|

| ||

Text analysis

Amazon

25

SEATS

ALL

ALL SEATS

WILL

14

25 C

Our 14

Our

OIL

C

OIL PATRICK

Q

Class

PATRICK

TIAZ

Zone

TIAZ RAILY TOST

RAILY TOST

ALL

SEATS

ALL

SEATS

ALL SEATS

ALL

SEATS