Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 23-38 |

| Gender | Male, 54.7% |

| Surprised | 45.2% |

| Disgusted | 45.1% |

| Calm | 54% |

| Confused | 45.2% |

| Angry | 45.2% |

| Happy | 45.2% |

| Sad | 45.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2018-03-23

| paintings art | 42.6% | |

| interior objects | 32.9% | |

| food drinks | 8.6% | |

| people portraits | 8.2% | |

| streetview architecture | 4.8% | |

| events parties | 1.9% | |

| text visuals | 0.6% | |

| cars vehicles | 0.2% | |

| pets animals | 0.1% | |

| nature landscape | 0.1% | |

Captions

Microsoft

created by unknown on 2018-03-23

| a group of people holding a sign | 81.8% | |

| a group of people standing next to a man holding a sign | 75.4% | |

| a group of people standing in the street | 75.3% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-11

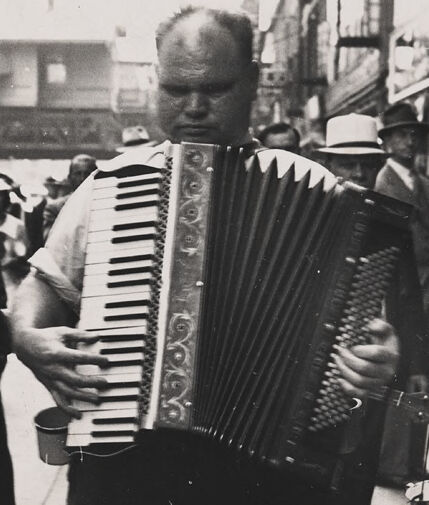

a photograph of a man playing an accordion in a city

Created by general-english-image-caption-blip-2 on 2025-06-27

a man playing an accordion on a street corner

OpenAI GPT

Created by gpt-4 on 2024-01-26

This is a black and white photograph depicting a scene on a busy street, with several people going about their activities. One individual stands out in the foreground, holding and possibly playing an accordion. In the background, there are signs for a business named "Liggett's Drugs" and other signs that appear to advertise candy and sundries. The attire of the individuals and the style of the signs suggest that this image might be from an earlier time period. The overall busy nature of the scene and the presence of the accordion player suggest a lively urban atmosphere.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-24

This is a black and white historical photograph showing street musicians playing accordions on a city sidewalk. The image appears to be from the early-to-mid 20th century, as evidenced by the clothing styles and the "Liggetts Drugs" store sign visible in the background. Several people are gathered on the street, with the accordion players being the focal point. The scene has an urban, possibly Depression-era feel to it, with the musicians likely performing for donations from passersby. The setting appears to be in an American city, with typical urban storefronts and architecture of that period visible.

Created by claude-3-haiku-48k-20240307 on 2024-03-29

The image appears to be a black and white photograph depicting a street scene in a city. The focal point is a man playing an accordion on the sidewalk, surrounded by a crowd of people. The man is wearing a hat and is concentrating on his instrument as he performs. In the background, there is a sign for a drugstore called "Liggett Drugs" and other buildings and signage visible, suggesting this is a bustling urban setting. The mood of the image conveys a sense of energy and activity on the city street.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-26

The image depicts a man playing an accordion on a bustling city street, surrounded by a crowd of people. The man is dressed in a light-colored shirt and dark pants, with his instrument held in front of him as he plays. He has short hair and appears to be middle-aged.

In the background, several men are standing around, some wearing suits and hats, while others are dressed more casually. A sign reading "Liggett's DRUGS" is visible above the man's head, indicating that the scene is set in front of a pharmacy or drugstore.

The overall atmosphere of the image suggests a lively and vibrant urban setting, with the sound of music filling the air and people going about their daily business. The presence of the accordion player adds a touch of culture and entertainment to the scene, creating a sense of community and connection among the people gathered around him.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-01

The image is a black-and-white photograph of a man playing an accordion in the middle of a street, surrounded by a crowd of people. The man is wearing a light-colored shirt and dark pants, and he is holding the accordion in front of him with both hands. He appears to be playing it with his fingers.

In the background, there are several buildings, including a sign that reads "Liggett's DRUGS" in white letters on a dark background. The sky above is overcast. The overall atmosphere of the image suggests a lively and festive scene, possibly from a street festival or celebration.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-09

The image is a black-and-white photograph depicting a scene on a street corner. In the center, a man is playing an accordion, holding it with both hands while standing on the sidewalk. He has a cup in his left hand, possibly for collecting tips. Around him, several people are gathered, some standing and others walking by. The background shows buildings with signs, one of which reads "Liggett's Drugs" and another with "CANDY" on it. The people are dressed in suits and hats, suggesting the scene might be from an earlier era.

Created by amazon.nova-pro-v1:0 on 2025-06-09

The black-and-white photo depicts a man playing an accordion on the street. He is standing on the sidewalk, facing a crowd of people. He is wearing a white shirt and black pants. The accordion has a strap that goes around his neck, and it is attached to a guitar. Some of the people in the crowd are wearing hats. Behind them, there are buildings and a sign that reads "Liggett's Drugs."

Google Gemini

Created by gemini-2.0-flash on 2025-06-04

Here is a description of the image:

The image is a vintage black and white photograph featuring a street musician playing an accordion in what appears to be a busy urban environment.

The main focus is the accordion player, who stands prominently in the foreground. The musician has a stocky build and wears a light-colored, short-sleeved shirt. Strapped to the accordion is a small can or container, presumably for collecting money.

Around the musician, there are several people, mostly men, dressed in suits and hats, giving the impression of a time period likely in the mid-20th century. Some individuals seem to be watching or listening to the musician.

In the background, there are buildings and storefronts. A sign that says "Liggett's DRUGS" is prominently visible, and beneath it, there is another sign that says "CANDY," suggesting a commercial area. The overall scene is reminiscent of a street performance or busking in a bustling city environment.

The photograph captures a slice of life from an earlier era, depicting the presence of street musicians and the style of clothing and architecture of that time.

Created by gemini-2.0-flash-lite on 2025-06-04

Here's a description of the image:

The photograph captures a street scene from what appears to be the early to mid-20th century. The central figure is a man playing an accordion. He is the focal point, positioned in the middle ground with a crowd of people surrounding him. The background reveals a bustling urban environment with shops and signs.

Here are more details:

- The Musician: The accordion player is a heavier-set man, with a mostly bald head. He is wearing a light-colored short-sleeved shirt. A small bucket is attached to the accordion, presumably to collect money. His face is focused as he plays.

- The Crowd: Several men are visible, and they seem to be gathered around, perhaps listening or watching the musician. They are dressed in suits and hats typical of the era.

- The Setting: The image is shot on a street, likely a commercial district, with signs for "Liggett's Drugs" and "Candy". Buildings with various architectural styles line the street, suggesting an urban setting.

- Style: The image is a black and white photograph, adding to the vintage feel. The tones of the image suggest it might be a vintage snapshot of a moment in time.

- Overall Impression: The photograph conveys a sense of a lively street scene, captured in a casual and candid style. It hints at the musical traditions of the past and the daily life of the era.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a black-and-white photograph depicting a street scene from what appears to be the mid-20th century. The focal point is a man playing an accordion. He is standing in the foreground, facing slightly to the right, and is dressed in a light-colored shirt. The accordion is prominently displayed, with its keys and buttons clearly visible.

Surrounding the musician are several people, mostly men, who appear to be either watching him play or engaging in conversation. They are dressed in attire typical of the period, including suits, hats, and overcoats. The background features a busy urban street with buildings and storefronts, one of which has a sign reading "Liggett's Drugs."

The atmosphere suggests a bustling, lively environment, possibly a marketplace or a busy city street. The expressions and body language of the people indicate curiosity and engagement, contributing to the overall sense of community and activity in the scene.

Qwen

No captions written