Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

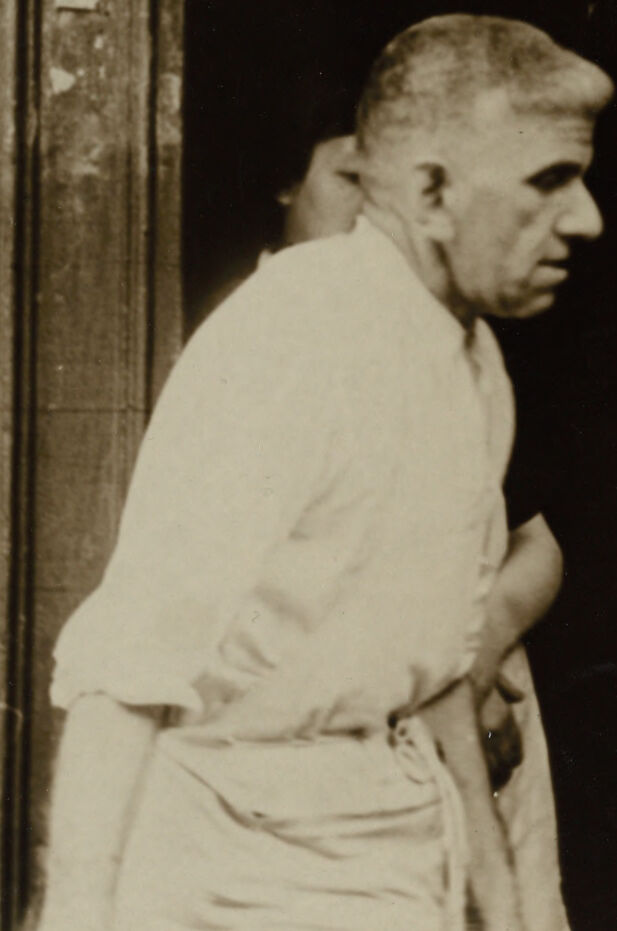

| Age | 35-52 |

| Gender | Male, 95.8% |

| Surprised | 4.5% |

| Calm | 59.6% |

| Confused | 9.6% |

| Happy | 4.3% |

| Sad | 11.3% |

| Disgusted | 5.8% |

| Angry | 5% |

Feature analysis

Amazon

| Tie | 99.4% | |

Categories

Imagga

| people portraits | 77.4% | |

| paintings art | 20.2% | |

| events parties | 1.4% | |

| pets animals | 0.6% | |

| food drinks | 0.2% | |

Captions

Microsoft

created on 2018-02-09

| a man and a woman standing in front of a building | 92.8% | |

| a man and a woman standing in front of a window | 88.3% | |

| a person standing in front of a group of people posing for a photo | 88.2% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

The image shows two individuals standing at the entrance of a building. One person is wearing a light-colored robe or coat, while the other person is dressed in a suit with a tie and a fedora hat. The doorway and surrounding structure have an old-fashioned appearance, suggesting that the photograph may have been taken in an earlier period. There is minimal detail about the interior of the building visible in the image.

Created by gpt-4 on 2024-11-28

The image appears to be a black and white photograph, seemingly from an earlier time given the clothing style and quality of the image. It depicts two people in front of what appears to be a shop or a business entrance. The person on the right is wearing a suit with a tie and a hat, while the other person is wearing a lighter colored outfit which might be an apron, suggesting they could be a worker or proprietor of the establishment. The entrance has a glass door with the number 13 visible and a transom window above. There are various items on the right that could be products or part of the window display. Due to the vintage nature of the photograph, it may have historical significance or be from a particular event or time period.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This appears to be a vintage black and white photograph, likely from the early to mid-20th century. The image shows two men standing in what appears to be a doorway or entrance. One man is dressed in light-colored, casual attire, possibly work clothes, while the other is wearing more formal business attire including a suit, tie, and fedora hat. The setting appears urban, with what looks like a commercial or residential building entrance. The photograph has the grainy, contrasty quality typical of period news or documentary photography.

Created by claude-3-haiku-20240307 on 2024-11-27

The image shows two men standing outside a building. One man is wearing a white coat and the other is wearing a suit and hat. They appear to be in conversation or interaction with each other. The background includes some signs and structures that suggest an urban setting. The image has a grainy, vintage quality typical of black and white photography from an earlier time period.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

The image is a black-and-white photograph of two men standing in front of a doorway. The man on the left has short hair and is wearing a white shirt with rolled-up sleeves, while the man on the right is dressed in a dark suit, white shirt, and dark tie, and is also wearing a fedora hat. The background of the image appears to be a doorway or entrance to a building, with a curtain or awning above it. The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly during the 1920s or 1930s.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image is a black-and-white photograph of two men standing in the doorway of a building. The man on the left has short hair and wears a white shirt, while the man on the right has a hat and a suit jacket, with his hands clasped in front of him. The background of the image shows the interior of the building, with a dark room beyond the doorway. The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly during the 1920s or 1930s, based on the clothing and hairstyles of the men depicted.