Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 93.2% |

| Confused | 0.8% |

| Happy | 4.5% |

| Sad | 2.1% |

| Angry | 1.2% |

| Disgusted | 1.3% |

| Surprised | 1.4% |

| Calm | 88.9% |

Feature analysis

Amazon

| Person | 99.5% | |

Categories

Imagga

| streetview architecture | 52.9% | |

| interior objects | 31.2% | |

| paintings art | 7.8% | |

| cars vehicles | 1.9% | |

| food drinks | 1.6% | |

| events parties | 1.4% | |

| people portraits | 1.3% | |

| nature landscape | 0.7% | |

| pets animals | 0.5% | |

| text visuals | 0.3% | |

| beaches seaside | 0.2% | |

| macro flowers | 0.1% | |

Captions

Microsoft

created on 2018-03-23

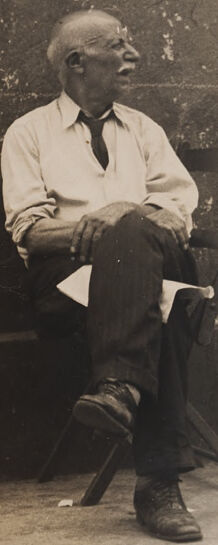

| a vintage photo of a man | 91.9% | |

| a black and white photo of a man | 89% | |

| an old black and white photo of a man | 88.9% | |

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-29

The image appears to show two elderly men sitting on chairs in what seems to be an outdoor setting. One man is wearing a hat and appears to be holding a newspaper or other documents, while the other man is sitting with his hands folded. The background is a weathered, textured wall or structure. The overall scene has an air of solitude and contemplation.

Created by claude-3-opus-20240229 on 2024-12-29

The black and white photograph shows two older men sitting on chairs outdoors against a worn, weathered wall. One man is sitting forward, arms crossed, wearing a hat, sweater and pants. The other man sits upright with his hands on his legs, wearing a light-colored button-up shirt and dark pants. In front of them is what appears to be a handwritten sign or piece of paper. The ground looks to be dirt or gravel. Overall, the vintage photo captures a candid, everyday moment of the two gentlemen taking a break or pause outside.

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a vintage black and white photograph showing two men sitting on opposite ends of what appears to be a bench or seats against a weathered wall. The man on the left wears a fedora hat and what looks like a sweater or jacket, while the man on the right is in shirt sleeves and a tie. Between them is an empty wooden chair and what appears to be some papers or a newspaper. The setting appears informal, possibly outdoors, and the wall behind them shows signs of age with peeling or damaged surfaces. The photograph has the characteristic style and quality of early to mid-20th century photography.