Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

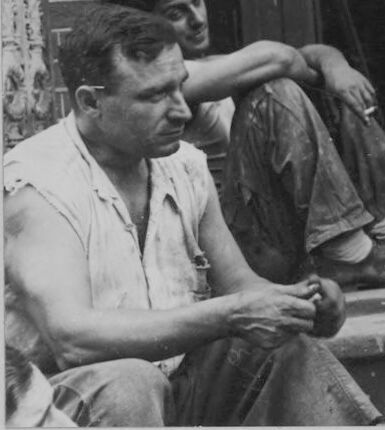

AWS Rekognition

| Age | 50-58 |

| Gender | Male, 100% |

| Calm | 92.7% |

| Surprised | 6.4% |

| Fear | 6% |

| Angry | 4.1% |

| Sad | 2.4% |

| Confused | 1.2% |

| Disgusted | 0.5% |

| Happy | 0.2% |

Feature analysis

Amazon

| Adult | 99.6% | |

Categories

Imagga

| people portraits | 46.6% | |

| interior objects | 18.7% | |

| paintings art | 9.9% | |

| events parties | 8.7% | |

| food drinks | 6.6% | |

| pets animals | 5.6% | |

| text visuals | 2.8% | |

Captions

Microsoft

created by unknown on 2018-05-10

| a man sitting on a bench next to a window | 68.2% | |

| a man sitting on a bench | 68.1% | |

| a man sitting on a bench in front of a window | 68% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-15

| a photograph of a man in a tank top sitting on a step up to a staircase | -100% | |

Google Gemini

Created by gemini-2.0-flash on 2025-05-21

Here is a description of the image:

The black and white photograph shows three men sitting on the front steps of a building, likely taking a break. The building appears to be made of brick. The man on the right is heavyset and wears a white tank top and paint-splattered overalls, and is holding a cigarette. He looks directly at the camera with a serious expression. To his left and slightly behind him, another man leans against the doorframe with his arms crossed, also holding a cigarette. The third man, on the left, sits with his hands clasped and looks down, perhaps contemplating something. He wears a button-down shirt with the sleeves rolled up and paint-splattered pants. Between the first two men are three dark glasses or cups on the step. The image has a candid, everyday feel, suggesting a slice-of-life moment captured in time.

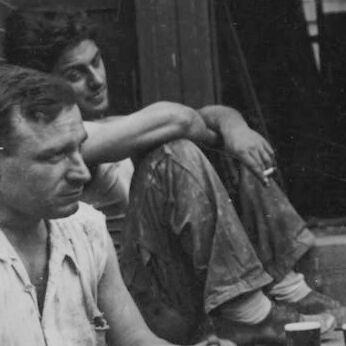

Created by gemini-2.0-flash-lite on 2025-05-21

Here's a description of the image:

Overall Impression: This is a vintage black and white photograph, likely from the mid-20th century, depicting three men taking a break on the steps of a building. The scene suggests a moment of rest during a work day.

The People:

- Main Subjects: Three men are the focal point. They appear to be working class individuals.

- Attire: They are all dressed in work clothes – likely jeans or overalls and sleeveless shirts (tank tops or similar). Their clothes are worn and stained, indicating they are in the middle of a physical labor task.

- Posture and Expression: The men have relaxed body language, slumped in their seats, and smoking cigarettes, suggesting they're at ease and taking a break. Two of them are looking in different directions, while the one on the right is gazing directly at the camera.

Setting:

- Location: They are seated on the steps of a building. The backdrop suggests a building front with a brick façade.

- Details: There are two drinks (or cups) on the steps.

Mood and Atmosphere:

- Informal and Authentic: The photograph gives a candid and authentic feel, as if capturing a slice of everyday life.

- Work and Leisure: The photograph captures a moment of work interrupted by a moment of rest, allowing for a look into their routine.

- Nostalgic: The monochrome tones and classic composition contribute to a sense of nostalgia.

In conclusion, it's a simple yet evocative photograph of three men enjoying a break from their work. It provides a glimpse into a bygone era and captures the casual moments of life.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black-and-white photograph depicting three men sitting on the steps of what appears to be a brick building. The men seem to be taking a break, possibly from manual labor, as they are dressed in work clothes and appear to be covered in dirt or paint.

- The man on the left is seated on the steps, looking downward with a somewhat pensive expression. He is wearing a light-colored shirt and dark pants.

- The man in the center is sitting on the steps with his arms resting on his knees. He is looking directly at the camera with a neutral expression and is wearing a dark shirt and pants.

- The man on the right is seated on the steps, looking to his left with a somewhat serious or contemplative expression. He is wearing a white tank top and dark pants, and his arms and face show signs of dirt or paint.

The setting appears to be an urban environment, possibly a residential area, given the brick building and the steps. The men's attire and the visible dirt or paint suggest they might be construction workers or painters. The overall mood of the photograph is one of rest and contemplation amidst a hard day's work.