Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 99.4% |

| Disgusted | 0.8% |

| Confused | 3.4% |

| Sad | 6% |

| Surprised | 3.9% |

| Calm | 48.1% |

| Angry | 1.8% |

| Happy | 36% |

Feature analysis

Amazon

| Person | 99.6% | |

Categories

Imagga

| paintings art | 62.7% | |

| text visuals | 32.4% | |

| interior objects | 2.9% | |

| streetview architecture | 0.7% | |

| food drinks | 0.7% | |

| people portraits | 0.2% | |

| events parties | 0.1% | |

Captions

Microsoft

created by unknown on 2018-03-23

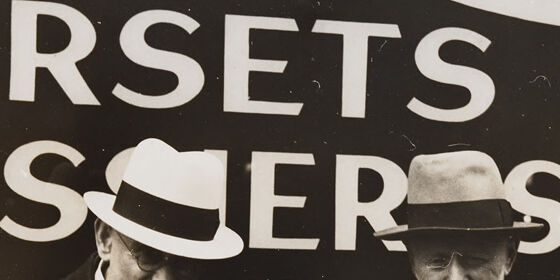

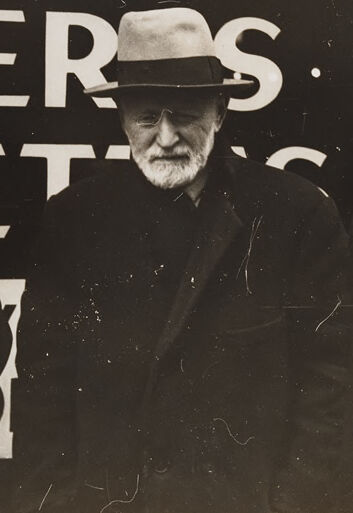

| Robert Baden-Powell et al. posing for a photo | 87.3% | |

| Robert Baden-Powell et al. posing for the camera | 87.2% | |

| a vintage photo of Robert Baden-Powell | 87.1% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-11

| a photograph of a group of men standing next to each other | -100% | |

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-29

The image depicts three elderly men standing in front of a sign that reads "MADE ORSETS" and "ASCERS ETS". The sign appears to be advertising some kind of product or service. The three men have distinctive appearances, with one wearing a hat and holding a pipe, another with a long beard, and the third seeming to be observing the scene. The image has a vintage, black and white aesthetic, suggesting it was taken some time in the past.

Created by claude-3-opus-20240229 on 2024-12-29

The black and white photograph depicts three older gentlemen standing in front of a sign that says "MADE CORSETS GARTERS". The men are dressed in suits and hats typical of the early 20th century. One man is smoking a pipe while the other two have long beards, giving them a distinguished appearance. The sign behind them suggests they may be corset and garter makers or sellers. The faded, scratched quality of the image indicates it is an antique photograph capturing a slice of life and fashion from a bygone era.

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a black and white vintage photograph showing three elderly men. The image appears to be taken in front of a storefront or advertisement sign that partially shows the word "MADE" and "ORSETS" (likely "CORSETS"). The men are all wearing dark coats and hats in what appears to be early 20th century style. The man on the left is smoking a pipe, while the man on the right has a notable long white beard. The photograph has the grainy, slightly faded quality typical of early photography. The setting and clothing styles suggest this image was likely taken in an urban area, perhaps in the early 1900s.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-23

This image is a black-and-white photograph of three men standing in front of a sign. The man on the left wears a dark suit, white shirt, and striped tie, with a white fedora hat. He holds a cigar in his right hand. The middle man also wears a dark suit and a light-colored fedora hat. The man on the right has a long white beard and wears a dark suit and dark hat.

The sign behind them is partially visible, with the words "MADE" and "RSETS" legible. The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly in the 1920s or 1930s.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-23

The image is a black-and-white photograph of three men standing in front of a sign. The man on the left is wearing a suit and hat, holding a cigar in his right hand. The man in the middle is wearing a dark coat and hat, with a beard and mustache. The man on the right is also wearing a dark coat and hat, with a long white beard.

The background of the image features a sign with large white letters that read "RSETS" and "MADE," but the rest of the text is illegible. The overall atmosphere of the image suggests a formal or professional setting, possibly from the early 20th century.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-23

A black-and-white photo shows three men standing in front of a signboard with text. The men are wearing hats and coats, and the man on the left is holding something in his hand. The man on the right has a beard and mustache. The man in the middle is wearing glasses. The photo has a white border, and the signboard has some words on it.

Created by amazon.nova-lite-v1:0 on 2025-05-23

Three men are standing together in a black-and-white photograph. They are dressed in formal attire, with hats and coats. The man on the left is holding a cigar in his mouth and has a cigarette in his hand. The man in the middle is wearing glasses and has a mustache. The man on the right has a beard and is looking directly at the camera. Behind them is a large sign with the word "Made" and "Dorsets" written on it.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-13

Here's a description of the image:

The photograph is a vintage black and white shot featuring three men standing in front of a storefront or business sign.

- Men: The men appear to be of advanced age. The man on the left is wearing a suit, a white hat with a black band, and glasses. He's holding something in his hand and appears to be eating. The middle man is wearing a coat and a fedora style hat. The man on the right has a long, white beard, a dark hat, and a dark coat.

- Sign: Behind the men is a sign with large white letters against a dark background. The word "MADE" is at the top and below are the words "CORSETS", "MASSER", "FETTIR", with only parts of the lettering.

- Overall Impression: The picture has a timeless quality, likely taken in the early to mid-20th century based on the clothing and style. The image feels like a candid moment, capturing a slice of everyday life.

Created by gemini-2.0-flash on 2025-05-13

Here is a description of the image:

This black and white photograph features three men in hats standing in front of a sign that says "CORSETS MADE." The man on the left is wearing a white fedora with a dark band, a dark double-breasted suit, and a tie. He is holding something in his hand and appears to be eating. The man in the center is wearing a light-colored fedora with a dark band and a dark coat. He has a white beard and glasses. The man on the right is wearing a dark fedora and a dark coat. He has a long white beard that covers his chest. The sign behind them is in large, bold letters. There is another sign, or perhaps a storefront, visible to the right of the men. The photograph has a vintage look, and there are some blemishes and imperfections on the surface of the print.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-24

The image is a black-and-white photograph featuring three elderly men standing together. They are all dressed in dark overcoats and hats, which suggests a formal or traditional style of dress typical of an earlier era.

- The man on the left is wearing a light-colored hat and appears to be smoking a cigarette. He has a mustache and is looking slightly to his left.

- The man in the center is wearing a dark hat and has a beard. He is looking directly at the camera.

- The man on the right also has a beard and is wearing a dark hat. He is looking slightly to his right.

In the background, there is a large sign with text that reads "HAND MADE CORSETS CORSETS CORSETS." The sign is partially visible and appears to be advertising handmade corsets. The men are positioned in front of this sign, which provides context to the setting, possibly a street or a marketplace. The overall atmosphere of the image suggests it might be from the early to mid-20th century.