Machine Generated Data

Tags

Amazon

created on 2023-10-05

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| man | 29.6 | |

|

| ||

| person | 24.6 | |

|

| ||

| male | 22.9 | |

|

| ||

| world | 21.1 | |

|

| ||

| people | 20.6 | |

|

| ||

| tricycle | 20.2 | |

|

| ||

| wheeled vehicle | 19.6 | |

|

| ||

| wheelchair | 18.6 | |

|

| ||

| portrait | 18.1 | |

|

| ||

| adult | 18.1 | |

|

| ||

| seat | 17.7 | |

|

| ||

| electric chair | 16.4 | |

|

| ||

| child | 16.2 | |

|

| ||

| bench | 14.7 | |

|

| ||

| vehicle | 14.3 | |

|

| ||

| chair | 13.8 | |

|

| ||

| youth | 13.6 | |

|

| ||

| hat | 13.4 | |

|

| ||

| black | 13.2 | |

|

| ||

| instrument of execution | 13.2 | |

|

| ||

| clothing | 12.5 | |

|

| ||

| happy | 12.5 | |

|

| ||

| family | 12.4 | |

|

| ||

| street | 12 | |

|

| ||

| city | 11.6 | |

|

| ||

| park | 11.5 | |

|

| ||

| outdoor | 11.5 | |

|

| ||

| smile | 11.4 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| boy | 11.3 | |

|

| ||

| human | 10.5 | |

|

| ||

| outdoors | 10.4 | |

|

| ||

| men | 10.3 | |

|

| ||

| women | 10.3 | |

|

| ||

| wall | 10.3 | |

|

| ||

| grandfather | 10.2 | |

|

| ||

| instrument | 10.2 | |

|

| ||

| conveyance | 9.8 | |

|

| ||

| mother | 9.6 | |

|

| ||

| uniform | 9.5 | |

|

| ||

| walk | 9.5 | |

|

| ||

| parent | 9.4 | |

|

| ||

| cowboy hat | 9.3 | |

|

| ||

| dad | 9.2 | |

|

| ||

| teenager | 9.1 | |

|

| ||

| furniture | 9.1 | |

|

| ||

| device | 9.1 | |

|

| ||

| couple | 8.7 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| park bench | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| teen | 8.3 | |

|

| ||

| style | 8.2 | |

|

| ||

| active | 8.1 | |

|

| ||

| life | 8.1 | |

|

| ||

| face | 7.8 | |

|

| ||

| father | 7.8 | |

|

| ||

| culture | 7.7 | |

|

| ||

| legs | 7.5 | |

|

| ||

| military uniform | 7.5 | |

|

| ||

| sport | 7.4 | |

|

| ||

| guy | 7.3 | |

|

| ||

| girls | 7.3 | |

|

| ||

| danger | 7.3 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| dress | 7.2 | |

|

| ||

| suit | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| pedestrian | 7.1 | |

|

| ||

| posing | 7.1 | |

|

| ||

| love | 7.1 | |

|

| ||

| work | 7.1 | |

|

| ||

| happiness | 7 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 96.4 | |

|

| ||

| white | 96 | |

|

| ||

| black | 95.4 | |

|

| ||

| man | 93.6 | |

|

| ||

| standing | 93.5 | |

|

| ||

| sitting | 91.9 | |

|

| ||

| black and white | 89.7 | |

|

| ||

| male | 82.6 | |

|

| ||

| photography | 81.8 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 74.2 | |

|

| ||

| vintage clothing | 73.9 | |

|

| ||

| monochrome | 64 | |

|

| ||

| human behavior | 55.8 | |

|

| ||

| angle | 55.6 | |

|

| ||

| recreation | 51.5 | |

|

| ||

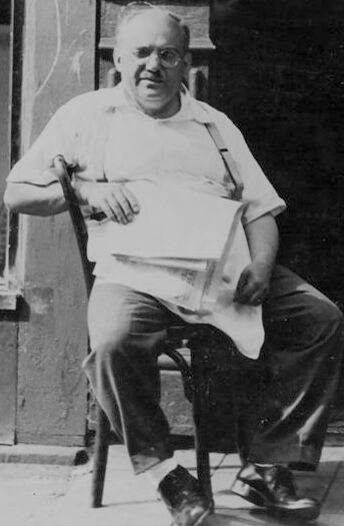

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 50-58 |

| Gender | Male, 100% |

| Calm | 83.3% |

| Surprised | 7.1% |

| Confused | 6.4% |

| Fear | 6.2% |

| Sad | 3% |

| Happy | 2.5% |

| Angry | 1.6% |

| Disgusted | 1.4% |

Microsoft Cognitive Services

| Age | 60 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 67.3% | |

|

| ||

| people portraits | 28.6% | |

|

| ||

| pets animals | 2.1% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black and white photo of a man | 90.9% | |

|

| ||

| an old black and white photo of a man | 90% | |

|

| ||

| a vintage photo of a man | 89.9% | |

|

| ||