Machine Generated Data

Tags

Amazon

created on 2023-10-05

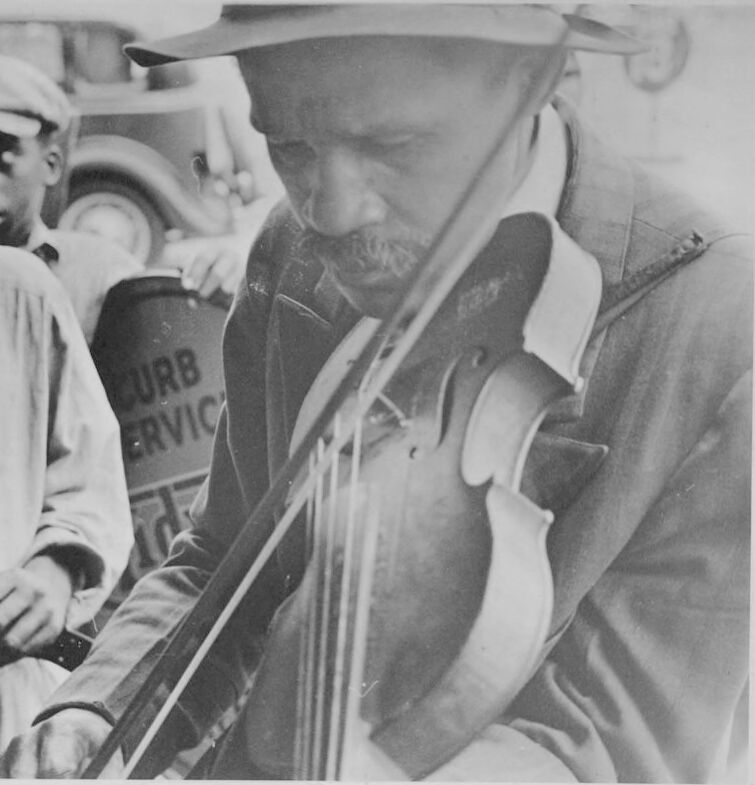

| Adult | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Man | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Adult | 99 | |

|

| ||

| Male | 99 | |

|

| ||

| Man | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Face | 92.6 | |

|

| ||

| Head | 92.6 | |

|

| ||

| Musical Instrument | 90.2 | |

|

| ||

| Photography | 88.8 | |

|

| ||

| Portrait | 88.8 | |

|

| ||

| Clothing | 80.8 | |

|

| ||

| Hat | 80.8 | |

|

| ||

| Machine | 80.4 | |

|

| ||

| Wheel | 80.4 | |

|

| ||

| Hat | 79.3 | |

|

| ||

| Violin | 76.5 | |

|

| ||

| Guitar | 71.8 | |

|

| ||

| Leisure Activities | 65.7 | |

|

| ||

| Music | 65.7 | |

|

| ||

| Musician | 65.7 | |

|

| ||

| Performer | 65.7 | |

|

| ||

| Guitarist | 55.6 | |

|

| ||

| Group Performance | 55.6 | |

|

| ||

| Music Band | 55.6 | |

|

| ||

| Coat | 55.6 | |

|

| ||

| Cello | 55.5 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| bass | 39.7 | |

|

| ||

| man | 30.2 | |

|

| ||

| person | 24.1 | |

|

| ||

| people | 24 | |

|

| ||

| male | 22.7 | |

|

| ||

| work | 22 | |

|

| ||

| car | 19.4 | |

|

| ||

| vehicle | 17.1 | |

|

| ||

| adult | 15.6 | |

|

| ||

| face | 14.9 | |

|

| ||

| tool | 14.1 | |

|

| ||

| black | 13.8 | |

|

| ||

| men | 13.7 | |

|

| ||

| device | 13.6 | |

|

| ||

| safety | 12.9 | |

|

| ||

| equipment | 12.8 | |

|

| ||

| driver | 12.6 | |

|

| ||

| business | 12.1 | |

|

| ||

| happy | 11.9 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| automobile | 11.5 | |

|

| ||

| bowed stringed instrument | 11.1 | |

|

| ||

| transportation | 10.8 | |

|

| ||

| worker | 10.7 | |

|

| ||

| hand | 10.6 | |

|

| ||

| looking | 10.4 | |

|

| ||

| stringed instrument | 10.2 | |

|

| ||

| fashion | 9.8 | |

|

| ||

| hand tool | 9.8 | |

|

| ||

| instrument | 9.7 | |

|

| ||

| one | 9.7 | |

|

| ||

| musical instrument | 9.5 | |

|

| ||

| inside | 9.2 | |

|

| ||

| human | 9 | |

|

| ||

| technology | 8.9 | |

|

| ||

| auto | 8.6 | |

|

| ||

| sitting | 8.6 | |

|

| ||

| wheel | 8.5 | |

|

| ||

| attractive | 8.4 | |

|

| ||

| health | 8.3 | |

|

| ||

| plunger | 8.3 | |

|

| ||

| industrial | 8.2 | |

|

| ||

| music | 8.1 | |

|

| ||

| job | 8 | |

|

| ||

| working | 8 | |

|

| ||

| medical | 7.9 | |

|

| ||

| smile | 7.8 | |

|

| ||

| model | 7.8 | |

|

| ||

| professional | 7.7 | |

|

| ||

| modern | 7.7 | |

|

| ||

| casual | 7.6 | |

|

| ||

| uniform | 7.6 | |

|

| ||

| drive | 7.6 | |

|

| ||

| head | 7.6 | |

|

| ||

| power | 7.6 | |

|

| ||

| close | 7.4 | |

|

| ||

| transport | 7.3 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| art | 7.2 | |

|

| ||

| steel | 7.1 | |

|

| ||

| look | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.4 | |

|

| ||

| black and white | 92 | |

|

| ||

| monochrome photography | 84.2 | |

|

| ||

| monochrome | 69.3 | |

|

| ||

| musical instrument accessory | 63.4 | |

|

| ||

| human behavior | 60.5 | |

|

| ||

| vintage clothing | 59.2 | |

|

| ||

| stock photography | 52.5 | |

|

| ||

Microsoft

created on 2018-05-10

| man | 99.3 | |

|

| ||

| person | 98.8 | |

|

| ||

| music | 93.2 | |

|

| ||

| bowed instrument | 88 | |

|

| ||

| old | 86.1 | |

|

| ||

| bass fiddle | 22.2 | |

|

| ||

| cello | 17.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 99.7% |

| Sad | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Calm | 0.7% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Happy | 0.1% |

| Confused | 0.1% |

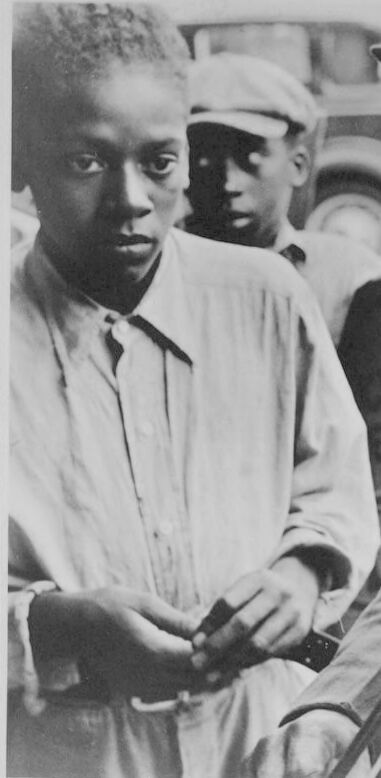

AWS Rekognition

| Age | 22-30 |

| Gender | Female, 99.9% |

| Sad | 85.8% |

| Calm | 56.9% |

| Surprised | 7% |

| Fear | 6.1% |

| Angry | 0.7% |

| Confused | 0.5% |

| Disgusted | 0.5% |

| Happy | 0.2% |

AWS Rekognition

| Age | 18-24 |

| Gender | Male, 99.9% |

| Calm | 73.8% |

| Surprised | 13.8% |

| Fear | 8.8% |

| Sad | 3.6% |

| Confused | 3% |

| Disgusted | 1% |

| Angry | 1% |

| Happy | 0.8% |

Microsoft Cognitive Services

| Age | 58 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 31 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 56.7% | |

|

| ||

| paintings art | 35.2% | |

|

| ||

| interior objects | 5.7% | |

|

| ||

| cars vehicles | 1.2% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a vintage photo of a man wearing a hat | 92.3% | |

|

| ||

| a vintage photo of a man | 92.2% | |

|

| ||

| an old photo of a man wearing a hat | 92.1% | |

|

| ||

Text analysis

Amazon

CURB

ERVIC