Machine Generated Data

Tags

Amazon

created on 2023-10-06

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| daily | 100 | |

|

| ||

| money | 39.2 | |

|

| ||

| currency | 35 | |

|

| ||

| newspaper | 29.5 | |

|

| ||

| dollar | 28.8 | |

|

| ||

| business | 28 | |

|

| ||

| finance | 27.9 | |

|

| ||

| financial | 27.6 | |

|

| ||

| banking | 27.6 | |

|

| ||

| product | 26.9 | |

|

| ||

| bank | 26 | |

|

| ||

| cash | 25.6 | |

|

| ||

| paper | 24.3 | |

|

| ||

| wealth | 22.5 | |

|

| ||

| hundred | 21.3 | |

|

| ||

| creation | 20.9 | |

|

| ||

| bills | 20.4 | |

|

| ||

| dollars | 18.4 | |

|

| ||

| savings | 17.7 | |

|

| ||

| us | 16.4 | |

|

| ||

| exchange | 16.2 | |

|

| ||

| bill | 16.2 | |

|

| ||

| sign | 15.8 | |

|

| ||

| closeup | 14.8 | |

|

| ||

| investment | 14.7 | |

|

| ||

| grunge | 13.6 | |

|

| ||

| banknote | 13.6 | |

|

| ||

| pay | 13.4 | |

|

| ||

| banknotes | 12.7 | |

|

| ||

| finances | 12.5 | |

|

| ||

| market | 12.4 | |

|

| ||

| information | 12.4 | |

|

| ||

| book | 12.4 | |

|

| ||

| franklin | 11.8 | |

|

| ||

| close | 11.4 | |

|

| ||

| word | 11.3 | |

|

| ||

| one | 11.2 | |

|

| ||

| rich | 11.2 | |

|

| ||

| old | 11.2 | |

|

| ||

| note | 11 | |

|

| ||

| packet | 10.6 | |

|

| ||

| economy | 10.2 | |

|

| ||

| symbol | 10.1 | |

|

| ||

| funds | 9.8 | |

|

| ||

| paying | 9.7 | |

|

| ||

| text | 9.6 | |

|

| ||

| loan | 9.6 | |

|

| ||

| vintage | 9.1 | |

|

| ||

| container | 9.1 | |

|

| ||

| success | 8.9 | |

|

| ||

| economic | 8.7 | |

|

| ||

| states | 8.7 | |

|

| ||

| debt | 8.7 | |

|

| ||

| save | 8.5 | |

|

| ||

| card | 8.5 | |

|

| ||

| art | 8.5 | |

|

| ||

| sale | 8.3 | |

|

| ||

| package | 8.2 | |

|

| ||

| billboard | 8 | |

|

| ||

| creative | 7.9 | |

|

| ||

| black | 7.8 | |

|

| ||

| rate | 7.8 | |

|

| ||

| capital | 7.6 | |

|

| ||

| commercial | 7.5 | |

|

| ||

| stock | 7.5 | |

|

| ||

| number | 7.5 | |

|

| ||

| document | 7.4 | |

|

| ||

| design | 7.3 | |

|

| ||

| world | 7.1 | |

|

| ||

| conceptual | 7.1 | |

|

| ||

Google

created on 2018-05-10

| black and white | 89.8 | |

|

| ||

| monochrome photography | 67.9 | |

|

| ||

| font | 61.4 | |

|

| ||

| history | 61.1 | |

|

| ||

| monochrome | 59 | |

|

| ||

| brand | 50.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 19-27 |

| Gender | Female, 99.4% |

| Fear | 69.6% |

| Surprised | 16.9% |

| Confused | 10.8% |

| Calm | 9.7% |

| Sad | 4.8% |

| Angry | 3.1% |

| Disgusted | 2.5% |

| Happy | 1.6% |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

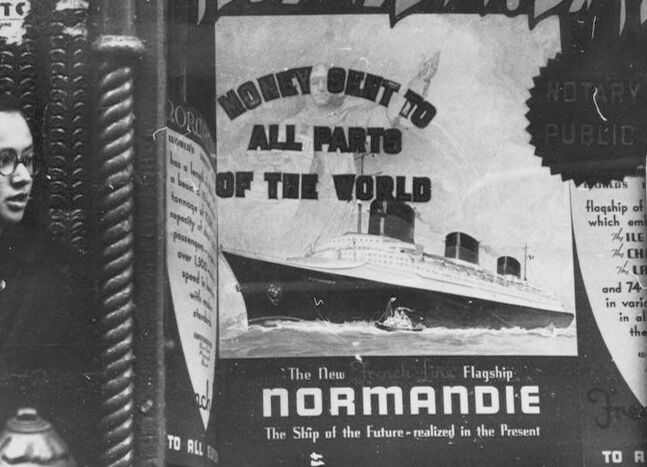

| text visuals | 92.6% | |

|

| ||

| streetview architecture | 3.4% | |

|

| ||

| paintings art | 2% | |

|

| ||

| cars vehicles | 1.6% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black sign with white text | 88.1% | |

|

| ||

| a white sign with black text | 86.3% | |

|

| ||

| a black sign with white letters | 86.2% | |

|

| ||

Text analysis

Amazon

ALL

the

Present

The

which

in

WORLD

of

and

The Ship of the Future-realized in the Present

Flagship

ALL PARTO

Ship

and 74

TO

TO ALL

74

ILE

PUBLIC

PARTO

Future-realized

CHI

BROKERAGE

in all

NOTARY

over

INSURANCE

LA

speed

all

LILL

NORMANDIE

in vario

em

flaqship of

vario

E

The LA

OF the WORLD

flaqship

over L300

New

capacity of E

MONEY

N

MONEY seet TO

TO RL

SPECIMEN INSURANCE BROKERAGE

OF

LILL ONE

WORLD'S

Free

b

: The

1

L300

& N

RORO

capacity

ED

speed when

N.!

: The New French-fina

ch

ENL

ENL BLLK

BLLK

b book ST

&

book

G.L

of ALL-KHID

RL

G.L UNLOKI

RX

KEEP

ONE

seet

stunders

>

N.CALERA.CO N.! L'LINL...!

ST

N.K.

UNLOKI

UNITED

access N.K.

éTIM

access

tonnony éTIM

N.CALERA.CO

French-fina

L'LINL...!

tonnony

ALL-KHID

when

SPECIMEN

87090

те

ALL PART

which em

in vari

The new

Flagship

ORMANDIE

TO RLL

The Ship of the Future- realized in the Present

TO R

те

ALL

PART

which

em

in

vari

The

new

Flagship

ORMANDIE

TO

RLL

Ship

of

the

Future-

realized

Present

R