Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Photography | 99.9 | |

|

| ||

| Clothing | 99.8 | |

|

| ||

| Adult | 99.5 | |

|

| ||

| Male | 99.5 | |

|

| ||

| Man | 99.5 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Adult | 99.5 | |

|

| ||

| Male | 99.5 | |

|

| ||

| Man | 99.5 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Boy | 99.2 | |

|

| ||

| Child | 99.2 | |

|

| ||

| Sitting | 99 | |

|

| ||

| Face | 98.7 | |

|

| ||

| Head | 98.7 | |

|

| ||

| Portrait | 98.7 | |

|

| ||

| Coat | 96 | |

|

| ||

| Hat | 86.4 | |

|

| ||

| Smoke | 82.4 | |

|

| ||

| Body Part | 80.4 | |

|

| ||

| Finger | 80.4 | |

|

| ||

| Hand | 80.4 | |

|

| ||

| People | 73.5 | |

|

| ||

| Formal Wear | 73.3 | |

|

| ||

| Footwear | 70 | |

|

| ||

| Shoe | 70 | |

|

| ||

| Sun Hat | 65.6 | |

|

| ||

| Text | 65.2 | |

|

| ||

| Shoe | 58.5 | |

|

| ||

| Pants | 57.3 | |

|

| ||

| Jacket | 57.1 | |

|

| ||

| Suit | 57.1 | |

|

| ||

| Accessories | 56.9 | |

|

| ||

| Tie | 56.9 | |

|

| ||

| Harmonica | 56.2 | |

|

| ||

| Musical Instrument | 56.2 | |

|

| ||

| Smoking | 55.5 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| man | 30.9 | |

|

| ||

| person | 27 | |

|

| ||

| male | 26.2 | |

|

| ||

| people | 21.7 | |

|

| ||

| adult | 19.7 | |

|

| ||

| business | 18.8 | |

|

| ||

| businessman | 18.5 | |

|

| ||

| suit | 18.4 | |

|

| ||

| men | 15.4 | |

|

| ||

| hat | 13.8 | |

|

| ||

| scholar | 13.8 | |

|

| ||

| building | 13.1 | |

|

| ||

| sitting | 12.9 | |

|

| ||

| silhouette | 12.4 | |

|

| ||

| smile | 12.1 | |

|

| ||

| work | 11.8 | |

|

| ||

| newspaper | 11.7 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| working | 11.5 | |

|

| ||

| intellectual | 11 | |

|

| ||

| outdoor | 10.7 | |

|

| ||

| old | 10.4 | |

|

| ||

| looking | 10.4 | |

|

| ||

| professional | 9.8 | |

|

| ||

| worker | 9.8 | |

|

| ||

| human | 9.7 | |

|

| ||

| product | 9.7 | |

|

| ||

| clothing | 9.4 | |

|

| ||

| happy | 9.4 | |

|

| ||

| architecture | 9.4 | |

|

| ||

| office | 9.2 | |

|

| ||

| back | 9.2 | |

|

| ||

| city | 9.1 | |

|

| ||

| hand | 9.1 | |

|

| ||

| black | 9 | |

|

| ||

| success | 8.8 | |

|

| ||

| computer | 8.8 | |

|

| ||

| couple | 8.7 | |

|

| ||

| love | 8.7 | |

|

| ||

| industry | 8.5 | |

|

| ||

| two | 8.5 | |

|

| ||

| field | 8.4 | |

|

| ||

| outdoors | 8.2 | |

|

| ||

| industrial | 8.2 | |

|

| ||

| executive | 8.1 | |

|

| ||

| world | 8.1 | |

|

| ||

| team | 8.1 | |

|

| ||

| uniform | 8 | |

|

| ||

| job | 8 | |

|

| ||

| women | 7.9 | |

|

| ||

| urban | 7.9 | |

|

| ||

| soldier | 7.8 | |

|

| ||

| creation | 7.8 | |

|

| ||

| wall | 7.7 | |

|

| ||

| sculpture | 7.6 | |

|

| ||

| relax | 7.6 | |

|

| ||

| manager | 7.4 | |

|

| ||

| holding | 7.4 | |

|

| ||

| symbol | 7.4 | |

|

| ||

| occupation | 7.3 | |

|

| ||

| alone | 7.3 | |

|

| ||

| lady | 7.3 | |

|

| ||

| laptop | 7.3 | |

|

| ||

| dirty | 7.2 | |

|

| ||

| active | 7.2 | |

|

| ||

| grass | 7.1 | |

|

| ||

| chair | 7.1 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.6 | |

|

| ||

| black and white | 88.8 | |

|

| ||

| human behavior | 80.1 | |

|

| ||

| male | 80 | |

|

| ||

| gentleman | 75.5 | |

|

| ||

| monochrome photography | 71.9 | |

|

| ||

| vintage clothing | 63.9 | |

|

| ||

| monochrome | 63.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

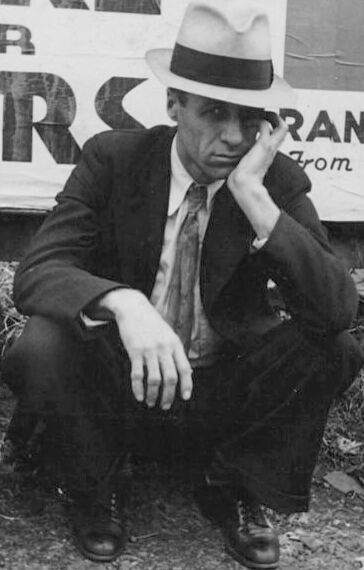

| Age | 42-50 |

| Gender | Male, 99.9% |

| Calm | 94.7% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 4% |

| Angry | 0.3% |

| Confused | 0.2% |

| Disgusted | 0.1% |

| Happy | 0% |

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 100% |

| Calm | 99.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.3% |

| Confused | 0.2% |

| Happy | 0.1% |

| Disgusted | 0.1% |

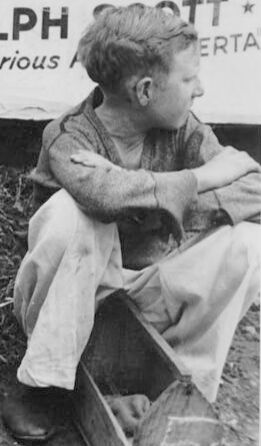

AWS Rekognition

| Age | 25-35 |

| Gender | Female, 62.6% |

| Calm | 97.6% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.2% |

| Happy | 0.6% |

| Disgusted | 0.5% |

| Angry | 0.5% |

| Confused | 0.2% |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 91.2% | |

|

| ||

| pets animals | 2% | |

|

| ||

| food drinks | 1.4% | |

|

| ||

| people portraits | 1.2% | |

|

| ||

| streetview architecture | 1.2% | |

|

| ||

| nature landscape | 1.2% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a group of people sitting on a bench | 92.8% | |

|

| ||

| a group of people that are sitting on a bench | 88.5% | |

|

| ||

| a group of people sitting at a bench | 88.4% | |

|

| ||

Text analysis

Amazon

the

Glorious

RANDOLPH

From the Glorious

ERTA

ERS

From

TT

A1RE

GER

AIRE

NGER

the

Glorious

AIRE

NGER

RANDOLPHA

RTA

rom the Glorious

RANDOLPHA

RTA

rom