Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 41-49 |

| Gender | Female, 98.9% |

| Angry | 53.4% |

| Calm | 38.2% |

| Surprised | 6.8% |

| Fear | 6% |

| Confused | 3.4% |

| Sad | 3% |

| Disgusted | 0.7% |

| Happy | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

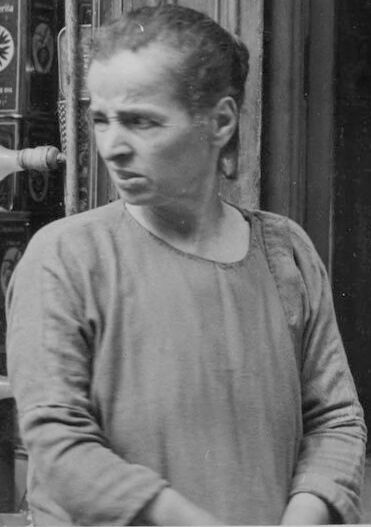

| Person | 99.8% | |

Categories

Imagga

created on 2023-10-07

| people portraits | 99.4% | |

Captions

Microsoft

created by unknown on 2018-05-10

| a man standing in front of a window | 91.5% | |

| a man standing in front of a store window | 87.9% | |

| a man standing in front of a window posing for the camera | 85.6% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-28

a photograph of a young boy standing in front of a store

Created by general-english-image-caption-blip-2 on 2025-07-07

a woman standing in front of a store window

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-11

The image shows a person standing outside a storefront amidst stacked tins of olive oil and various canned goods. The containers display ornate designs, with labels bearing text such as "olio d'oliva" and "La Preferita," featuring olive-themed motifs. The storefront itself has a vintage appearance, with glass windows and a door partially visible in the background. Light bulbs attached to the exterior suggest signage or decoration.

Created by gpt-4o-2024-08-06 on 2025-06-11

The image shows a person standing in front of a shop or display area. Behind the person, there are numerous cylindrical and rectangular containers stacked closely together. These containers seem to be packaging for some type of product, possibly food items. The packaging has various designs and text on it, representing different brands or types. Specifically, there are labels with olive graphics and words indicating that the products are related to olive oil, with the text "olio d'oliva" and "Italia." The display looks to be in a storefront setting, suggesting that these are goods available for sale.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image shows a person, likely a man, standing in front of a store or shop display. The display appears to be filled with various canned or packaged goods, some of which have distinctive designs or logos on them. The person in the image has a serious expression on their face and is looking directly at the camera. The image is in black and white, giving it an older, historical feel.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-15

The black and white image shows a woman standing in front of a storefront window filled with stacked cans and boxes of various products, likely food items. The woman has a serious expression and appears to be looking slightly off to the side. She is wearing a plain, long-sleeved shirt or sweater. The storefront has an old-fashioned, early 20th century appearance based on the style of the product packaging and window display.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white photograph that appears to be from an earlier era, showing someone standing in front of what appears to be a shop or store display. In the background, there are stacked boxes and tins with decorative patterns and logos visible. The person is wearing a simple long-sleeved garment and appears to be looking off to the side. The image has the quality and style typical of documentary photography from the early-to-mid 20th century. The display behind them includes what look like product boxes arranged on shelves, possibly in a storefront window or counter display.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-03

The image is a black-and-white photograph of a woman standing in front of a store window, surrounded by various items.

The woman has her hair pulled back and is wearing a long-sleeved shirt. She is looking to the left of the camera with a neutral expression. The background of the image shows a store window filled with boxes and cans stacked on top of each other. The boxes are all different sizes and have various labels on them, including "La Frederia" and "Olio d'Olio." The cans are also labeled, but the text is not legible. The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly in Europe or the United States.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-03

This image depicts a black-and-white photograph of a woman standing in front of a storefront, surrounded by stacks of various products. The woman, positioned centrally, has her hair pulled back and wears a long-sleeved shirt. Her gaze is directed to the left side of the image.

Behind her, the store's shelves are filled with an assortment of items, including cans and boxes featuring circular logos. Some of these products have labels written in Italian, such as "La Preferita" and "Olio d'Oliva." A light bulb hangs from the ceiling above the products, adding to the store's ambiance.

In the background, the dark interior of the store provides a subtle contrast to the well-lit foreground. The overall atmosphere of the image suggests that it was taken during the daytime, with natural light illuminating the scene. The photograph appears to be an old, vintage image, capturing a moment in time from a bygone era.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-06-02

The black-and-white image depicts a woman standing in front of a shop. She is looking at something, and her mouth is slightly open. She is wearing a long-sleeve shirt with her hands on her waist. Behind her, there are several items displayed on the shelves. There are several canned goods, and some of them have labels. There are also some round objects that are probably bottles. There are two light bulbs hanging on the wall.

Created by amazon.nova-lite-v1:0 on 2025-06-02

The image shows a black-and-white photograph of a woman standing in front of a store. She is wearing a long-sleeved shirt and has her hands in her pockets. Behind her, there is a display of various products, including boxes and cans, arranged on shelves. The store has a glass door with a handle, and there is a reflection of a person in the glass. The photograph has a vintage look, with a slightly blurry and grainy quality.

Google Gemini

Created by gemini-2.0-flash on 2025-05-29

Here is a description of the image:

This vintage black-and-white photograph shows a woman standing by a shop window filled with food products. The woman is positioned slightly off-center, looking towards the left with a somewhat pensive expression. She has a simple hairstyle, with her hair pulled back. She wears a plain, long-sleeved shirt.

The shop window is packed with stacked cans and containers, mostly featuring the words "La Preferita" and "OLIO D'OLIVA," suggesting they contain olive oil. There are also labels that mention "ITALIA." Some of the cans are decorated with intricate patterns and symbols, while others have simpler designs.

Two doorknobs jut out from the shop window frame, adding a touch of depth to the composition. Behind the woman, there's a dark, indistinct space, possibly the interior of the shop, which creates a contrast with the brighter area around the window display and the woman.

The photograph has a classic, almost nostalgic quality, with its monochrome palette and the style of the products on display, giving the impression of being taken in a traditional European market setting.

Created by gemini-2.0-flash-lite on 2025-05-29

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, likely from the early to mid-20th century, based on the style and subject matter. It seems to depict a woman standing in front of a shop window or doorway.

Details:

- Subject: A woman is the central figure. She is wearing a simple long-sleeved top. Her hair is pulled back. She has a serious, pensive expression, and appears to be looking off to the side.

- Setting: She's standing next to a display or shop window. The background is filled with stacked cans of olive oil and perhaps other Italian products. Some of the cans have the brand name "La Preferita".

- Style: The photograph has a documentary feel, with natural lighting.

Possible Interpretation:

The image might portray a shopkeeper or a customer in a shop that sells imported Italian products. The setting and the woman's expression hint at a moment of everyday life, possibly captured in a quiet, observant way. The image is likely taken outside the shop, based on the door behind the woman.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting a young boy standing in front of a store window. The boy appears to be looking directly at the camera with a neutral expression. He is wearing a simple, long-sleeved shirt and has short hair.

Behind the boy, the store window displays a variety of canned goods. The cans are stacked neatly and prominently feature labels with the brand name "La Preferita." The labels have a distinctive design, including a circular emblem and text that suggests the cans contain olive oil. The window display also includes some round containers, possibly cheese wheels, placed at the bottom.

The setting appears to be an urban environment, likely a street scene from an earlier time period, given the style of the photograph and the products on display. The overall mood of the image is somewhat somber, reflecting the simplicity and everyday life of the era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

The image is a black-and-white photograph that appears to be from the mid-20th century, judging by the style and the quality of the photograph. It features a person standing in front of a shop window. The shop window is filled with stacks of what seem to be containers of olive oil, as indicated by the labels that read "La Preferita" and "Olio d'Oliva." The person, wearing a plain long-sleeved shirt, has a serious or contemplative expression on their face. The overall tone of the photograph is somber and reflective.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This black-and-white photograph appears to be a vintage image, likely from the mid-20th century. It features a woman standing in front of a shop window. The woman is wearing a simple, long-sleeved, light-colored shirt and has a solemn or pensive expression on her face. Her hair is pulled back, and she is looking to the side.

Behind her, inside the shop, there are numerous stacked boxes and containers, many of which are labeled with the brand "La Preferita," which is known for its products such as olive oil. The boxes and containers are neatly arranged, and the shop appears to be well-stocked. The window itself has a traditional design with a round doorknob visible in the center. The overall atmosphere of the image suggests a candid moment captured in a small, possibly family-run store.