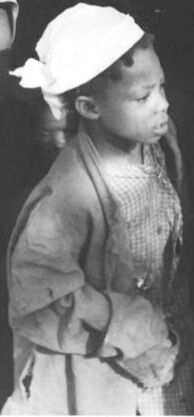

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Clothing | 100 | |

|

| ||

| Hat | 100 | |

|

| ||

| Face | 99.9 | |

|

| ||

| Head | 99.9 | |

|

| ||

| Photography | 99.9 | |

|

| ||

| Portrait | 99.9 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Boy | 99.4 | |

|

| ||

| Child | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Baby | 98.9 | |

|

| ||

| Cap | 97.8 | |

|

| ||

| Outdoors | 93.3 | |

|

| ||

| Bonnet | 84.3 | |

|

| ||

| Sun Hat | 80.1 | |

|

| ||

| Wood | 77 | |

|

| ||

| Countryside | 75.6 | |

|

| ||

| Nature | 75.6 | |

|

| ||

| Door | 74.7 | |

|

| ||

| People | 67.6 | |

|

| ||

| Baseball Cap | 63.4 | |

|

| ||

| Architecture | 57.7 | |

|

| ||

| Building | 57.7 | |

|

| ||

| Hut | 57.7 | |

|

| ||

| Rural | 57.7 | |

|

| ||

| Body Part | 55.2 | |

|

| ||

| Neck | 55.2 | |

|

| ||

| Dress | 55.1 | |

|

| ||

| Lady | 55.1 | |

|

| ||

| Housing | 55 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| cowboy hat | 72.9 | |

|

| ||

| hat | 62.1 | |

|

| ||

| headdress | 44.6 | |

|

| ||

| clothing | 30.4 | |

|

| ||

| old | 19.5 | |

|

| ||

| building | 19.1 | |

|

| ||

| man | 17.5 | |

|

| ||

| person | 17.3 | |

|

| ||

| covering | 17 | |

|

| ||

| wall | 16.2 | |

|

| ||

| door | 15.9 | |

|

| ||

| people | 15.6 | |

|

| ||

| adult | 15.5 | |

|

| ||

| consumer goods | 15.3 | |

|

| ||

| portrait | 14.2 | |

|

| ||

| house | 14.2 | |

|

| ||

| window | 14.2 | |

|

| ||

| male | 13.7 | |

|

| ||

| fashion | 13.6 | |

|

| ||

| attractive | 12.6 | |

|

| ||

| ancient | 12.1 | |

|

| ||

| sexy | 12 | |

|

| ||

| happy | 11.9 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| wood | 11.7 | |

|

| ||

| posing | 11.5 | |

|

| ||

| home | 11.2 | |

|

| ||

| model | 10.9 | |

|

| ||

| one | 10.4 | |

|

| ||

| men | 10.3 | |

|

| ||

| lifestyle | 10.1 | |

|

| ||

| face | 9.9 | |

|

| ||

| dirty | 9.9 | |

|

| ||

| pretty | 9.8 | |

|

| ||

| lady | 9.7 | |

|

| ||

| hair | 9.5 | |

|

| ||

| construction | 9.4 | |

|

| ||

| city | 9.1 | |

|

| ||

| alone | 9.1 | |

|

| ||

| human | 9 | |

|

| ||

| body | 8.8 | |

|

| ||

| wooden | 8.8 | |

|

| ||

| brick | 8.8 | |

|

| ||

| urban | 8.7 | |

|

| ||

| stone | 8.4 | |

|

| ||

| black | 8.4 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| emotion | 8.3 | |

|

| ||

| street | 8.3 | |

|

| ||

| occupation | 8.2 | |

|

| ||

| looking | 8 | |

|

| ||

| entrance | 7.7 | |

|

| ||

| elegant | 7.7 | |

|

| ||

| dark | 7.5 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| exterior | 7.4 | |

|

| ||

| water | 7.3 | |

|

| ||

| sensuality | 7.3 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| metal | 7.2 | |

|

| ||

| job | 7.1 | |

|

| ||

Google

created on 2018-05-10

| photograph | 96.2 | |

|

| ||

| black and white | 90.4 | |

|

| ||

| standing | 87.9 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 80.1 | |

|

| ||

| male | 80.1 | |

|

| ||

| monochrome photography | 78.6 | |

|

| ||

| monochrome | 65.7 | |

|

| ||

| vintage clothing | 65.5 | |

|

| ||

| window | 52.8 | |

|

| ||

| girl | 51.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 6-12 |

| Gender | Male, 88.5% |

| Calm | 95.3% |

| Surprised | 6.5% |

| Fear | 6.1% |

| Sad | 2.9% |

| Confused | 0.6% |

| Disgusted | 0.3% |

| Angry | 0.2% |

| Happy | 0.2% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 90.3% |

| Confused | 59.1% |

| Calm | 22.4% |

| Fear | 9.4% |

| Surprised | 8.3% |

| Sad | 4.2% |

| Angry | 0.9% |

| Happy | 0.8% |

| Disgusted | 0.7% |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 30 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| people portraits | 83.8% | |

|

| ||

| paintings art | 8.7% | |

|

| ||

| streetview architecture | 3.3% | |

|

| ||

| pets animals | 2.5% | |

|

| ||

Captions

Microsoft

created by unknown on 2018-05-10

| a man and a woman posing for a photo | 45.6% | |

|

| ||

| a person posing for a photo | 45.5% | |

|

| ||

| a person posing for the camera | 45.4% | |

|

| ||