Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

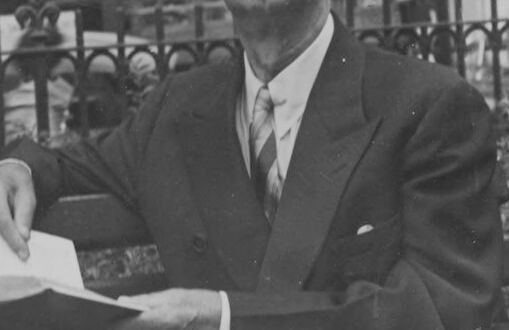

| Age | 62-72 |

| Gender | Male, 100% |

| Confused | 45.3% |

| Calm | 35.5% |

| Sad | 10.4% |

| Surprised | 8.3% |

| Fear | 6.3% |

| Angry | 1.3% |

| Disgusted | 1.2% |

| Happy | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Adult | 99.5% | |

Categories

Imagga

created on 2023-10-06

| people portraits | 45.7% | |

| streetview architecture | 24% | |

| interior objects | 11.8% | |

| paintings art | 9.3% | |

| pets animals | 4.9% | |

| cars vehicles | 2.1% | |

| food drinks | 1% | |

Captions

Microsoft

created by unknown on 2018-05-10

| a black and white photo of a man in a suit and tie | 98.8% | |

| a black and white photo of a man | 96.4% | |

| an old photo of a man in a suit and tie | 96.3% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip-2 on 2025-07-06

a man in a suit and hat sitting on a bench

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a man in a suit and hat sitting on a bench

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

This image depicts a man seated on a bench in an urban setting, dressed in a suit and tie, complemented by a straw hat with a ribbon. He is holding an open book in his hand, suggesting he may be reading or pausing in contemplation. The background showcases a street scene with historic architectural details, including large windows, a storefront, railings, and a billboard. People are faintly visible in the photograph, contributing to the lively atmosphere of a public space.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image depicts a person sitting on a bench outdoors, dressed in a suit with a tie and wearing a straw boater hat. The person is holding an open book in their right hand, which is resting on the arm of the bench. In the background, there is a street scene with a metal fence and several buildings, including one with large windows, one with an awning, and an advertisement on a wall. The setting appears to be an urban environment, possibly a park or plaza.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-13

The image shows a man wearing a hat and glasses, standing in front of a storefront. He appears to be an older gentleman, dressed in a suit and tie, and is holding some papers or documents in his hands. The background includes other buildings and storefronts, suggesting this is a city street scene. The image has a black and white, vintage aesthetic, indicating it was likely taken some time in the past.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-13

This is a black and white photograph that appears to be from an earlier era, likely the 1920s or 1930s. The image shows a person sitting on what appears to be a public bench, wearing a formal suit with a tie and a straw boater hat. They are wearing round spectacles and have a somewhat stern or serious expression. The person is holding what appears to be an open book or document. In the background, there is a city street scene with buildings, windows, and what looks like an iron fence or railing. The overall composition suggests this may have been taken in an urban setting, perhaps near a business district or public square.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of a man sitting on a bench, reading a book. The man is wearing a suit, tie, and hat, and is holding the book in his left hand. He appears to be sitting in a park or other outdoor setting, with buildings visible in the background.

- The man is wearing a suit, tie, and hat.

- He is holding a book in his left hand.

- He appears to be sitting in a park or other outdoor setting.

- Buildings are visible in the background.

The image suggests that the man is taking a break from his daily activities to enjoy some reading time in a peaceful outdoor setting.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

This image is a black and white photograph of a man sitting on a bench, reading a book. The man is dressed in a suit, tie, and hat, with his right arm resting on the back of the bench and his left hand holding the book. He appears to be wearing glasses with large lenses.

In the background, there are buildings and a fence, suggesting that the scene is set in an urban environment. The overall atmosphere of the image is one of quiet contemplation, with the man lost in thought as he reads his book.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-06

The image is a black-and-white photograph of a man sitting on a bench. He is wearing a suit, a tie, and a hat with a wide brim. He has glasses on his face and is holding a book in his hands. Behind him, there is a fence with plants and a building with glass windows. The image has a vintage look, suggesting it was taken in the past.

Created by amazon.nova-pro-v1:0 on 2025-06-06

The black-and-white image shows a man sitting on a bench outside a building. He is wearing a suit, a tie, a hat, and glasses. He is holding a book in his left hand, while his right hand is resting on the bench. Behind him, there is a black fence with plants and bushes. The building has a signboard with some text, and there are some people walking behind the fence.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white photograph that appears to be from the mid-20th century. It portrays a man sitting on a park bench, holding an open book. The setting seems to be an urban environment with buildings visible in the background.

Details:

- The Man: He is the central focus. He's wearing a suit, a tie, a hat, and round glasses. His expression is serious, almost contemplative.

- The Setting: The backdrop reveals buildings, possibly commercial or office structures, with windows. In the background, there's also an advertisement for "Sunkist Oranges". A wrought-iron fence and foliage suggest that the man is in a park or a similar public space.

- Composition: The photographer likely used the rule of thirds, placing the man on the right side of the frame to showcase both the subject and the context. The lines of the bench and the buildings in the background offer depth.

- Mood/Atmosphere: The photo has a somewhat formal and perhaps introspective feel. The man's serious expression and the vintage aesthetic create a sense of history.

If you have another image, feel free to share it!

Created by gemini-2.0-flash on 2025-05-02

Here is a description of the image:

This is a black and white photograph of a man sitting on a bench in what appears to be a public park or urban setting. The man is wearing a suit and tie, a light-colored hat, and round eyeglasses. He is holding a book open in his hands, though he is looking directly at the camera with a slightly somber expression.

Behind the man, there is a low, decorative wrought iron fence and some greenery. In the background, there are buildings with windows and what looks like a store or business front with an awning. One of the signs in the background says "Sunkist Oranges." The background details suggest an urban environment, likely taken sometime in the mid-20th century based on the clothing and style of the buildings.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image is a black-and-white photograph depicting an older man sitting on a park bench in an urban setting. He is wearing a suit and tie, a light-colored hat, and distinctive round glasses. He appears to be reading a book or a newspaper. The background shows a city street with buildings, storefronts, and a few other people. The overall atmosphere suggests a scene from the mid-20th century, possibly in a busy downtown area. The man's expression is serious and focused, and the photograph captures a moment of quiet amidst the bustling city environment.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-05

The image is a black-and-white photograph depicting a man seated on a bench in what appears to be a public outdoor setting, likely a park or plaza. The man is dressed in formal attire, wearing a suit, tie, and a white hat with a dark band. He sits with a serious or contemplative expression, holding a book open, as if he is reading or reviewing something. The background shows a cityscape with buildings, windows, and a metal fence, suggesting an urban environment. The overall atmosphere of the image conveys a sense of quietude and introspection.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-05

This black-and-white photograph depicts an older man sitting on a park bench, dressed in formal attire. He is wearing a dark suit, a white shirt, a patterned tie, a white fedora hat, and round glasses. He appears to be holding a piece of paper or a book in his hands. The background shows a city street with buildings, a fence, and some people in the distance. The overall style of the image suggests it was taken in the early to mid-20th century.