Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Architecture | 100 | |

|

| ||

| Building | 100 | |

|

| ||

| Countryside | 100 | |

|

| ||

| Hut | 100 | |

|

| ||

| Nature | 100 | |

|

| ||

| Outdoors | 100 | |

|

| ||

| Rural | 100 | |

|

| ||

| Shack | 99.9 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Bride | 99.6 | |

|

| ||

| Female | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Wedding | 99.6 | |

|

| ||

| Woman | 99.6 | |

|

| ||

| Shelter | 99 | |

|

| ||

| Person | 96.7 | |

|

| ||

| Face | 96.3 | |

|

| ||

| Head | 96.3 | |

|

| ||

| Photography | 96.3 | |

|

| ||

| Portrait | 96.3 | |

|

| ||

| Housing | 95.5 | |

|

| ||

| House | 87.1 | |

|

| ||

| Cabin | 57.9 | |

|

| ||

| Lady | 56.6 | |

|

| ||

| Pottery | 56 | |

|

| ||

| Brick | 55.8 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| hut | 40 | |

|

| ||

| structure | 35.7 | |

|

| ||

| shelter | 35.1 | |

|

| ||

| roof | 33.4 | |

|

| ||

| building | 22.8 | |

|

| ||

| house | 22.6 | |

|

| ||

| old | 20.9 | |

|

| ||

| architecture | 20.4 | |

|

| ||

| yurt | 20.4 | |

|

| ||

| thatch | 19.3 | |

|

| ||

| protective covering | 18.9 | |

|

| ||

| dwelling | 18.3 | |

|

| ||

| travel | 16.2 | |

|

| ||

| sky | 14.7 | |

|

| ||

| window | 14.3 | |

|

| ||

| brick | 14.1 | |

|

| ||

| tourism | 14 | |

|

| ||

| landscape | 12.6 | |

|

| ||

| wall | 12.6 | |

|

| ||

| housing | 12.4 | |

|

| ||

| outdoor | 12.2 | |

|

| ||

| covering | 11.7 | |

|

| ||

| mountain | 11.6 | |

|

| ||

| umbrella | 11.1 | |

|

| ||

| historic | 11 | |

|

| ||

| clouds | 11 | |

|

| ||

| countryside | 11 | |

|

| ||

| wood | 10.8 | |

|

| ||

| village | 10.7 | |

|

| ||

| hovel | 10.5 | |

|

| ||

| ancient | 10.4 | |

|

| ||

| home | 10.4 | |

|

| ||

| summer | 10.3 | |

|

| ||

| grass | 10.3 | |

|

| ||

| stone | 10.1 | |

|

| ||

| religion | 9.9 | |

|

| ||

| trees | 9.8 | |

|

| ||

| outdoors | 9.7 | |

|

| ||

| rural | 9.7 | |

|

| ||

| wooden | 9.7 | |

|

| ||

| country | 9.7 | |

|

| ||

| adult | 9.1 | |

|

| ||

| tile | 8.8 | |

|

| ||

| equipment | 8.7 | |

|

| ||

| antique | 7.8 | |

|

| ||

| scene | 7.8 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| tree | 7.7 | |

|

| ||

| door | 7.6 | |

|

| ||

| roofing | 7.5 | |

|

| ||

| canopy | 7.4 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| church | 7.4 | |

|

| ||

| water | 7.3 | |

|

| ||

| weather | 7.3 | |

|

| ||

| material | 7.2 | |

|

| ||

| road | 7.2 | |

|

| ||

| building material | 7.1 | |

|

| ||

Google

created on 2018-05-10

| photograph | 96.2 | |

|

| ||

| black | 95 | |

|

| ||

| black and white | 92.3 | |

|

| ||

| monochrome photography | 85.6 | |

|

| ||

| photography | 82 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| standing | 77.1 | |

|

| ||

| history | 70.4 | |

|

| ||

| house | 69.7 | |

|

| ||

| monochrome | 68.3 | |

|

| ||

| vintage clothing | 66.5 | |

|

| ||

| stock photography | 55.3 | |

|

| ||

| shack | 52 | |

|

| ||

Microsoft

created on 2018-05-10

| person | 93.5 | |

|

| ||

Color Analysis

Face analysis

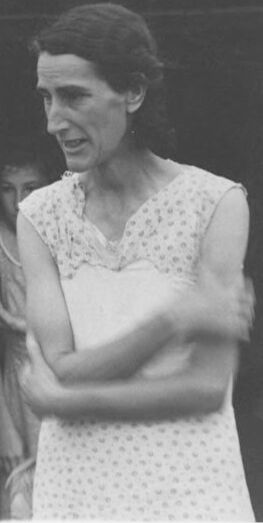

Amazon

Microsoft

AWS Rekognition

| Age | 43-51 |

| Gender | Female, 100% |

| Sad | 95.3% |

| Confused | 25.5% |

| Calm | 13.1% |

| Angry | 9.2% |

| Surprised | 6.9% |

| Fear | 6.3% |

| Disgusted | 1.5% |

| Happy | 0.4% |

AWS Rekognition

| Age | 19-27 |

| Gender | Female, 97.4% |

| Calm | 74.3% |

| Disgusted | 10.2% |

| Surprised | 6.8% |

| Fear | 6.4% |

| Sad | 5.3% |

| Happy | 2.4% |

| Confused | 2.2% |

| Angry | 2% |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 42 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 55.5% | |

|

| ||

| streetview architecture | 35.8% | |

|

| ||

| people portraits | 5.1% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a person sitting on a bench in front of a building | 56.6% | |

|

| ||

| a person sitting in front of a building | 56.5% | |

|

| ||

| a person sitting on a bench in front of a building | 56.4% | |

|

| ||