Machine Generated Data

Tags

Amazon

created on 2023-10-06

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| adult | 32.4 | |

|

| ||

| person | 29.9 | |

|

| ||

| sitting | 24.9 | |

|

| ||

| people | 24 | |

|

| ||

| happy | 21.9 | |

|

| ||

| child | 20.7 | |

|

| ||

| man | 20.4 | |

|

| ||

| computer | 19.5 | |

|

| ||

| lifestyle | 19.5 | |

|

| ||

| male | 18.1 | |

|

| ||

| laptop | 18 | |

|

| ||

| casual | 16.1 | |

|

| ||

| attractive | 16.1 | |

|

| ||

| indoors | 15.8 | |

|

| ||

| portrait | 15.5 | |

|

| ||

| home | 15.2 | |

|

| ||

| work | 14.9 | |

|

| ||

| pretty | 14.7 | |

|

| ||

| couple | 13.9 | |

|

| ||

| sofa | 13.9 | |

|

| ||

| couch | 13.5 | |

|

| ||

| technology | 13.4 | |

|

| ||

| smiling | 13 | |

|

| ||

| alone | 12.8 | |

|

| ||

| love | 12.6 | |

|

| ||

| room | 12.4 | |

|

| ||

| working | 11.5 | |

|

| ||

| boy | 11.3 | |

|

| ||

| human | 11.3 | |

|

| ||

| women | 11.1 | |

|

| ||

| youth | 11.1 | |

|

| ||

| teen | 11 | |

|

| ||

| world | 10.8 | |

|

| ||

| cute | 10.8 | |

|

| ||

| sadness | 10.7 | |

|

| ||

| face | 10.7 | |

|

| ||

| interior | 10.6 | |

|

| ||

| lonely | 10.6 | |

|

| ||

| newspaper | 10.5 | |

|

| ||

| looking | 10.4 | |

|

| ||

| business | 10.3 | |

|

| ||

| smile | 10 | |

|

| ||

| old | 9.8 | |

|

| ||

| lady | 9.7 | |

|

| ||

| outdoors | 9.7 | |

|

| ||

| education | 9.5 | |

|

| ||

| notebook | 9.5 | |

|

| ||

| living | 9.5 | |

|

| ||

| juvenile | 9.4 | |

|

| ||

| two | 9.3 | |

|

| ||

| relax | 9.3 | |

|

| ||

| student | 9.2 | |

|

| ||

| city | 9.1 | |

|

| ||

| leisure | 9.1 | |

|

| ||

| teenager | 9.1 | |

|

| ||

| fashion | 9 | |

|

| ||

| family | 8.9 | |

|

| ||

| together | 8.8 | |

|

| ||

| urban | 8.7 | |

|

| ||

| hair | 8.7 | |

|

| ||

| sad | 8.7 | |

|

| ||

| happiness | 8.6 | |

|

| ||

| jeans | 8.6 | |

|

| ||

| senior | 8.4 | |

|

| ||

| relaxation | 8.4 | |

|

| ||

| product | 8.2 | |

|

| ||

| cheerful | 8.1 | |

|

| ||

| kid | 8 | |

|

| ||

| look | 7.9 | |

|

| ||

| rest | 7.9 | |

|

| ||

| grief | 7.9 | |

|

| ||

| keyboard | 7.7 | |

|

| ||

| expression | 7.7 | |

|

| ||

| fun | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| street | 7.4 | |

|

| ||

| indoor | 7.3 | |

|

| ||

| relaxing | 7.3 | |

|

| ||

| mother | 7.3 | |

|

| ||

| building | 7.2 | |

|

| ||

Google

created on 2018-05-10

| photograph | 96 | |

|

| ||

| black | 95.5 | |

|

| ||

| black and white | 91 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 80.2 | |

|

| ||

| sitting | 79.2 | |

|

| ||

| human behavior | 73.9 | |

|

| ||

| human | 72.6 | |

|

| ||

| monochrome | 68.1 | |

|

| ||

| vintage clothing | 59 | |

|

| ||

| stock photography | 57.1 | |

|

| ||

Microsoft

created on 2018-05-10

| person | 88.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

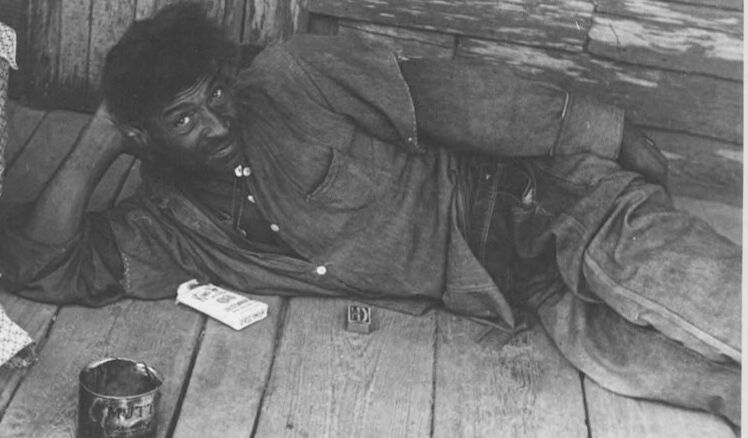

| Age | 19-27 |

| Gender | Female, 100% |

| Calm | 68.9% |

| Sad | 53.2% |

| Surprised | 6.6% |

| Fear | 6.2% |

| Angry | 0.8% |

| Confused | 0.7% |

| Disgusted | 0.3% |

| Happy | 0.2% |

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 99.9% |

| Fear | 16.8% |

| Happy | 16.6% |

| Disgusted | 15.5% |

| Angry | 15.1% |

| Confused | 13.9% |

| Calm | 11% |

| Surprised | 7.8% |

| Sad | 6.2% |

Microsoft Cognitive Services

| Age | 31 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 49.9% | |

|

| ||

| people portraits | 23.9% | |

|

| ||

| streetview architecture | 6.8% | |

|

| ||

| text visuals | 6.6% | |

|

| ||

| interior objects | 3% | |

|

| ||

| beaches seaside | 2.9% | |

|

| ||

| cars vehicles | 1.9% | |

|

| ||

| nature landscape | 1.8% | |

|

| ||

| pets animals | 1.3% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a person sitting on a bench | 86.1% | |

|

| ||

| a man and a woman sitting on a bench | 60% | |

|

| ||

| a person sitting on a bench looking at the camera | 59.9% | |

|

| ||